Overview

We briefly give an overview of the FRI protocol, before specifying how it is used in the StarkNet protocol.

FRI

FRI is a protocol that works by successively reducing the degree of a polynomial, and where the last reduction is a constant polynomial of degree . Typically the protocol obtains the best runtime complexity when each reduction can halve the degree of its input polynomial. For this reason, FRI is typically described and instantiated on a polynomial of degree a power of .

If the reductions are "correct", and it takes reductions to produce a constant polynomial in the "last layer", then it is a proof that the original polynomial at "layer 0" was of degree at most .

In order to ensure that the reductions are correct, two mechanisms are used:

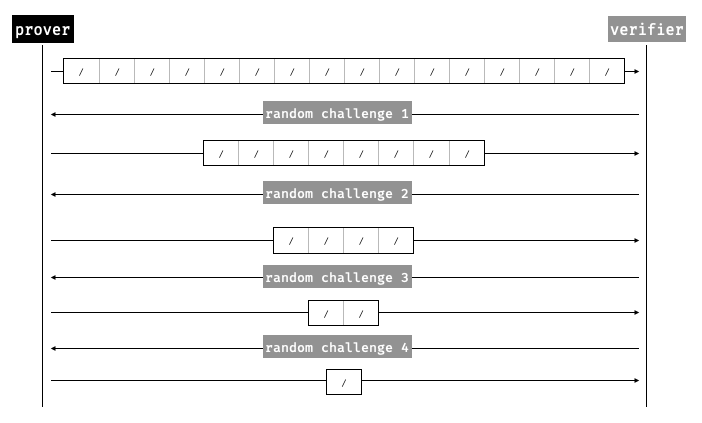

- First, an interactive protocol is performed with a verifier who helps randomize the halving of polynomials. In each round the prover commits to a "layer" polynomial.

- Second, as commitments are not algebraic objects (as FRI works with hash-based commitments), the verifier query them in multiple points to verify that an output polynomial is consistent with its input polynomial and a random challenge. (Intuitively, the more queries, the more secure the protocol.)

Setup

To illustrate how FRI works, one can use sagemath with the following setup:

# We use the starknet field (https://docs.starknet.io/architecture-and-concepts/cryptography/p-value/)

starknet_prime = 2^251 + 17*2^192 + 1

starknet_field = GF(starknet_prime)

polynomial_ring.<x> = PolynomialRing(starknet_field)

# find generator of the main group

gen = starknet_field.multiplicative_generator()

assert gen == 3

assert starknet_field(gen)^(starknet_prime-1) == 1 # 3^(order-1) = 1

# lagrange theorem gives us the orders of all the multiplicative subgroups

# which are the divisors of the main multiplicative group order (which, remember, is p - 1 as 0 is not part of it)

# p - 1 = 2^192 * 5 * 7 * 98714381 * 166848103

multiplicative_subgroup_order = starknet_field.order() - 1

assert list(factor(multiplicative_subgroup_order)) == [(2, 192), (5, 1), (7, 1), (98714381, 1), (166848103, 1)]

# find generator of subgroup of order 2^192

# the starknet field has high 2-adicity, which is useful for FRI and FFTs

# (https://www.cryptologie.net/article/559/whats-two-adicity)

gen2 = gen^( (starknet_prime-1) / (2^192) )

assert gen2^(2^192) == 1

# find generator of a subgroup of order 2^i for i <= 192

def find_gen2(i):

assert i >= 0

assert i <= 192

return gen2^( 2^(192-i) )

assert find_gen2(0)^1 == 1

assert find_gen2(1)^2 == 1

assert find_gen2(2)^4 == 1

assert find_gen2(3)^8 == 1

Reduction

A reduction in the FRI protocol is obtained by interpreting an input polynomial as a polynomial of degree and splitting it into two polynomials and of degree such that .

Then, with the help of a verifier's random challenge , we can produce a random linear combination of these polynomials to obtain a new polynomial of degree :

def split_poly(p, remove_square=True):

assert (p.degree()+1) % 2 == 0

g = (p + p(-x))/2 # <---------- nice trick!

h = (p - p(-x))//(2 * x) # <--- nice trick!

# at this point g and h are still around the same degree of p

# we need to replace x^2 by x for FRI to continue (as we want to halve the degrees!)

if remove_square:

g = g.parent(g.list()[::2]) # <-- (using python's <!--CODE_BLOCK_46--> syntax)

h = h.parent(h.list()[::2])

assert g.degree() == h.degree() == p.degree() // 2

assert p(7) == g(7^2) + 7 * h(7^2)

else:

assert g.degree() == h.degree() == p.degree() - 1

assert p(7) == g(7) + 7 * h(7)

return g, h

We can look at the following example to see how a polynomial of degree is reduced to a polynomial of degree in 3 rounds:

# p0(x) = 1 + 2x + 3x^2 + 4x^3 + 5x^4 + 6x^5 + 7x^6 + 8x^7

# degree 7 means that we'll get ceil(log2(7)) = 3 rounds (and 4 layers)

p0 = polynomial_ring([1, 2, 3, 4, 5, 6, 7, 8])

# round 1: moves from degree 7 to degree 3

h0, g0 = split_poly(p0)

assert h0.degree() == g0.degree() == 3

zeta0 = 3 # <-------------------- the verifier would pick a random zeta

p1 = h0 + zeta0 * g0 # <--------- the prover would send a commitment of p1

assert p0(zeta0) == p1(zeta0^2) # <- sanity check

# round 2: reduces degree 3 to degree 1

h1, g1 = split_poly(p1)

assert g1.degree() == h1.degree() == 1

zeta1 = 12 # <------------ the verifier would pick a random zeta

p2 = h1 + zeta1 * g1 # <-- the prover would send a commitment of p2

assert p1(zeta1) == p2(zeta1^2)

h2, g2 = split_poly(p2)

assert h2.degree() == g2.degree() == 0

# round 3: reduces degree 1 to degree 0

zeta2 = 3920 # <---------- the verifier would pick a random zeta

p3 = h2 + zeta2 * g2 # <-- the prover could send p3 in the clear

assert p2(zeta2) == p3

assert p3.degree() == 0

Queries

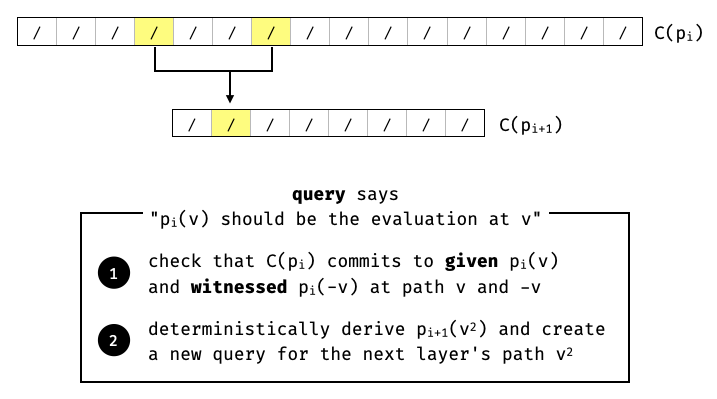

In the real FRI protocol, each layer's polynomial would be sent using a hash-based commitment (e.g. a Merkle tree of its evaluations over a large domain). As such, the verifier must ensure that each commitment consistently represents the proper reduction of the previous layer's polynomial. To do that, they "query" commitments of the different polynomials of the different layers at points/evaluations. Let's see how this works.

Given a polynomial and two of its evaluations at some points and , we can see that the verifier can recover the two halves by computing:

Then, the verifier can compute the next layer's evaluation at as:

We can see this in our previous example:

# first round/reduction

v = 392 # <-------------------------------------- fake sample a point

p0_v, p0_v_neg = p0(v), p0(-v) # <--------------- the 2 queries we need

g0_square = (p0_v + p0_v_neg)/2

h0_square = (p0_v - p0_v_neg)/(2 * v)

assert p0_v == g0_square + v * h0_square # <------ sanity check

In practice, to check that the evaluation on the next layer's polynomial is correct, the verifier would "query" the prover's commitment to the polynomial. These queries are different from the FRI queries (which enforce consistency between layers of reductions), they are evaluation queries or commitment queries and result in practice in the prover providing a Merkle membership proof (also called decommitment in this specification) to the committed polynomial.

As we already have an evaluation of of the next layer's polynomial , we can simply query the evaluation of to continue the FRI query process on the next layer, and so on:

p1_v = p1(v^2) # <-------------------------------- query on the next layer

assert g0_square + zeta0 * h0_square == p1_v # <--- the correctness check

# second round/reduction

p1_v_neg = p1(-v^2) # <-- the 1 query we need

g1_square = (p1_v + p1_v_neg)/2 # g1(v^4)

h1_square = (p1_v - p1_v_neg)/(2 * v^2) # h1(v^4)

assert p1_v == g1_square + v^2 * h1_square # p1(v^2) = g1(v^4) + v^2 * h1(v^4)

p2_v = p2(v^4) # <-- query the next layer

assert p2(v^4) == g1_square + zeta1 * h1_square # p2(v^4) = g1(v^4) + zeta1 * h1(v^4)

# third round/reduction

p2_v_neg = p2(-v^4) # <-- the 1 query we need

g2_square = (p2_v + p2_v_neg)/2 # g2(v^8)

h2_square = (p2_v - p2_v_neg)/(2 * v^4) # h2(v^8)

assert p2_v == g2_square + v^4 * h2_square # p2(v^4) = g2(v^8) + v^4 * h2(v^8)

assert p3 == g2_square + zeta2 * h2_square # we already received p3 at the end of the protocol

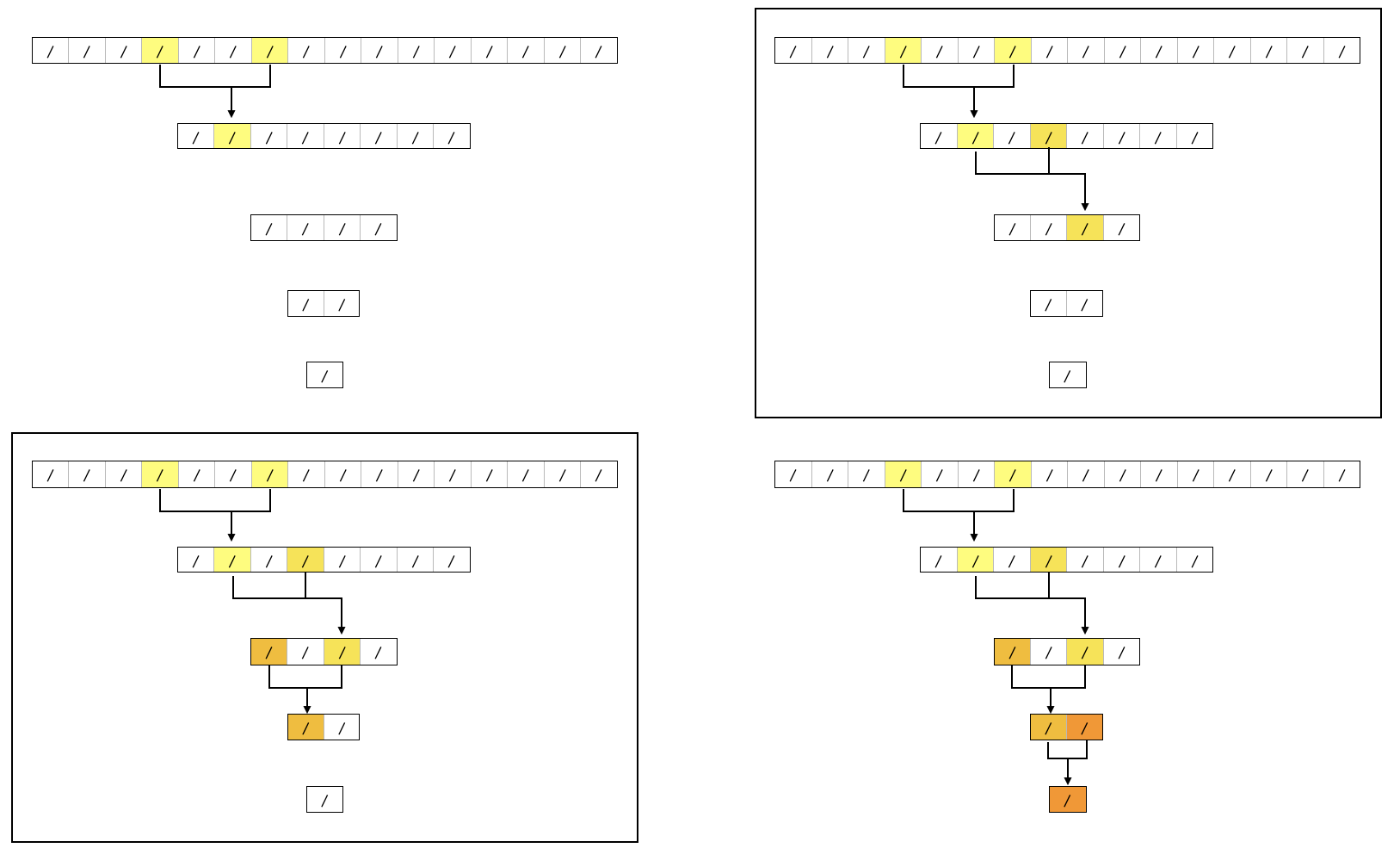

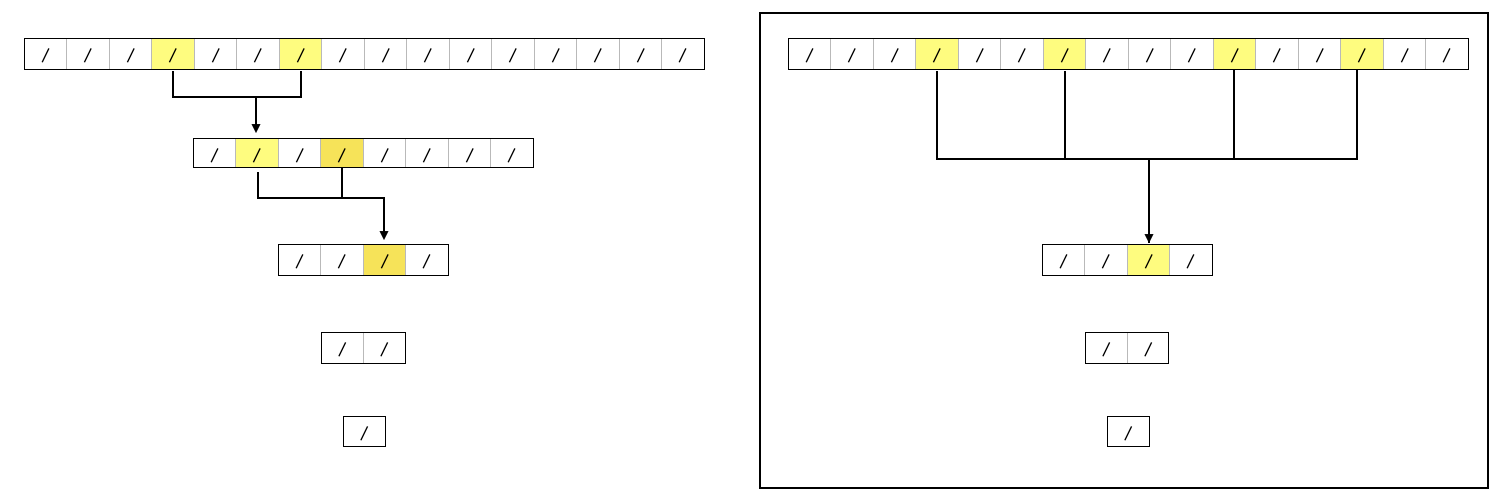

Skipping FRI layers

Section 3.11.1 "Skipping FRI Layers" of the ethSTARK paper describes an optimization which skips some of the layers/rounds. The intuition is the following: if we removed the first round commitment (to the polynomial ), then the verifier would not be able to:

- query to verify that layer

- query to continue the protocol and get

The first point is fine, as there's nothing to check the correctness of. To address the second point, we can use the same technique we use to compute . Remember, we needed and to compute and . But to compute and , we need the quadratic residues of , that is , such that , so that we can compute and from and .

We can easily compute them by using (tau), the generator of the subgroup of order :

tau = find_gen2(2)

assert tau.multiplicative_order() == 4

# so now we can compute the two roots of -v^2 as

assert (tau * v)^2 == -v^2

assert (tau^3 * v)^2 == -v^2

# and when we query p2(v^4) we can verify that it is correct

# if given the evaluations of p0 at v, -v, tau*v, tau^3*v

p0_tau_v = p0(tau * v)

p0_tau3_v = p0(tau^3 * v)

p1_v_square = (p0_v + p0_v_neg)/2 + zeta0 * (p0_v - p0_v_neg)/(2 * v)

p1_neg_v_square = (p0_tau_v + p0_tau3_v)/2 + zeta0 * (p0_tau_v - p0_tau3_v)/(2 * tau * v)

assert p2(v^4) == (p1_v_square + p1_neg_v_square)/2 + zeta1 * (p1_v_square - p1_neg_v_square)/(2 * v^2)

Last Layer Optimization

Section 3.11.2 "FRI Last Layer" of the ethSTARK paper describes an optimization which stops at an earlier round. We show this here by removing the last round.

At the end of the second round we imagine that the verifier receives the coefficients of ( and ) directly:

p2_v = h2 + v^4 * g2 # they can then compute p2(v^4) directly

assert g1_square + zeta1 * h1_square == p2_v # and then check correctness

FRI-PCS

Given a polynomial and an evaluation point , a prover who wants to prove that can prove the related statement for some quotient polynomial of degree :

(This is because if then should be a root of and thus the polynomial can be factored in this way.)

Specifically, FRI-PCS proves that they can produce such a (commitment to a) polynomial .

Aggregating Multiple FRI Proofs

To prove that two polynomials and exist and are of degree at most , a prover simply shows using FRI that a random linear combination of and exists and is of degree at most .

Note that if the FRI check might need to take into account the different degree checks that are being aggregated. For example, if the polynomial should be of degree at most but the polynomial should be of degree at most then a degree correction needs to happen. We refer to the ethSTARK paper for more details as this is out of scope for this specification. (As used in the STARK protocol targeted by this specification, it is enough to show that the polynomials are of low degree.)