Introduction

Stwo is a state-of-the-art framework for creating STARK proofs that provides the following features:

- A frontend designed to be flexible to allow you to express your own constraints

- A backend that leverages Circle STARKs over the Mersenne31 prime field for fast prover performance

- Seamlessly integrated with Cairo

This book will guide you through the process of creating your own constraints and then proving them using Stwo. It will also provide in-depth explanations of the inner workings of how Stwo implements Circle STARKs.

Why Use a Proof System?

At its core, a proof system proves that a statement is valid. For example, it can prove that a function with a certain input results in a certain output, i.e. . To check the statement, we can do it either directly by computing and comparing the result to , or indirectly by verifying the proof. The verifier can benefit from the second option in terms of time and space, if the time to verify the proof is faster than the time to compute the function, or the size of the proof is smaller than the input to the statement.

This property of a proof system is often referred to as succinctness, and it is exactly why proof systems have seen wide adoption in the blockchain space, where computation on-chain is much more expensive compared to off-chain computation. Using a proof system, it becomes possible to replace a large collection of computation to be executed on-chain with a proof of execution of the same collection of computations and verifying it on-chain. This way, proofs can be generated off-chain using large machines and verified on-chain with much less computation.

But there are applications of proof systems beyond just blockchains. Generally speaking, it can be used as auxiliary data to verify that the computation of an untrusted party was done correctly. For example, when we delegate computation to an untrusted server, we can ask it to provide a proof along with the computation result that the result indeed came from running a specific computation. Another example could be to ask a server running an ML model to provide proof that it ran inference on the correct model. The size of the accompanying proof and the time to verify it will be negligible compared to the cost of running the computation, but we gain the guarantee that the computation was done correctly.

Another optional feature of proof systems is zero-knowledge, which means that the proof reveals nothing about the computation other than its validity. In general, the output of the computation will be public (i.e. revealed to the verifier), but the input will be, without loss of generality, private from the verifier. With this feature, the intermediate values computed by the prover while computing will also be hidden from the verifier.

Why Stwo?

Before we dive into why we should choose Stwo, let's define some terminology. When we talked about proof systems in the previous section, we mentioned that we can create a proof of a statement using a proof system. In reality, however, we first need to structure the function involved in the statement in a way that it can be proven. This structuring part is often referred to as the frontend, while the rest of the process of creating a proof is commonly referred to as the backend.

With that out of the way, let's dive into some of the advantages of using Stwo.

First, Stwo is a standalone framework that provides both the frontend and backend and therefore handles the entire proving process. There are other frameworks that only provide the frontend or the backend, which has its advantages as its modular structure makes it possible to pick and choose a backend or frontend of one's liking. However, having a single integrated frontend and backend reduces the complexity of the system and is also easier to maintain.

In addition, Stwo's frontend structures statements as an Algebraic Intermediate Representation (AIR), which is a representation that is especially useful for proving statements that are repetitive (e.g. the CPU in a VM, which essentially repeats the same fetch-decode-execute over and over again).

Stwo's backend is also optimized for prover performance. This is due to largely three factors.

-

It implements STARKs, or hash-based SNARKs, which boasts a faster prover compared to elliptic curve-based SNARKs like Groth16 or PLONK. This improvement comes mainly from running the majority of the computation in a small prime field (32 bits); Elliptic curve-based SNARKs, on the other hand, need to use big prime fields (e.g. 254-bit prime fields), which incur a lot of overhead as most computation does not require that many bits.

-

Even amongst multiple STARK backends, however, Stwo provides state-of-the-art prover performance by running the Mersenne-31 prime field (modulo ), which is faster than another popular 32-bit prime field like BabyBear (modulo ). We suggest going through this post for a breakdown of why this is the case.

-

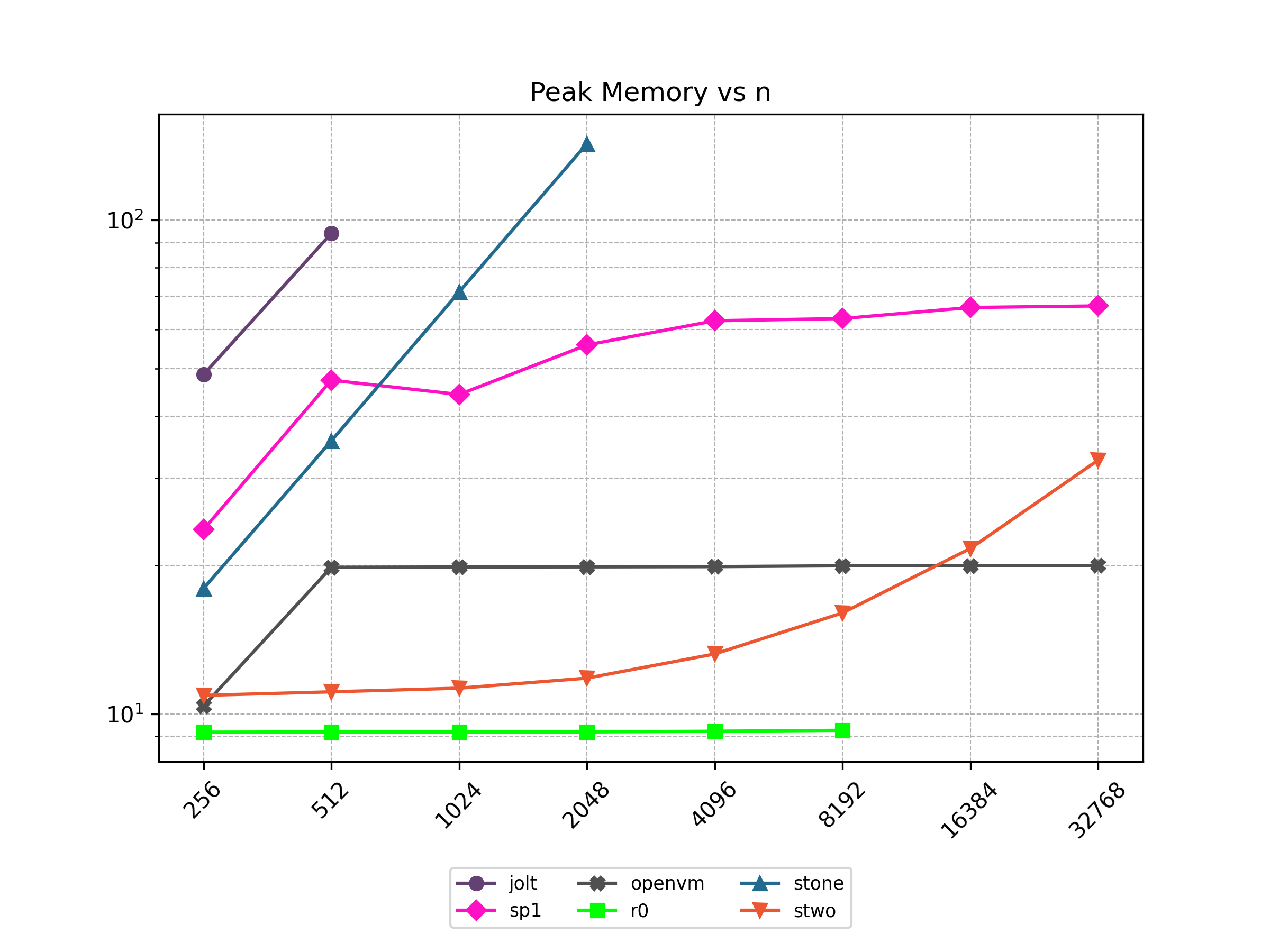

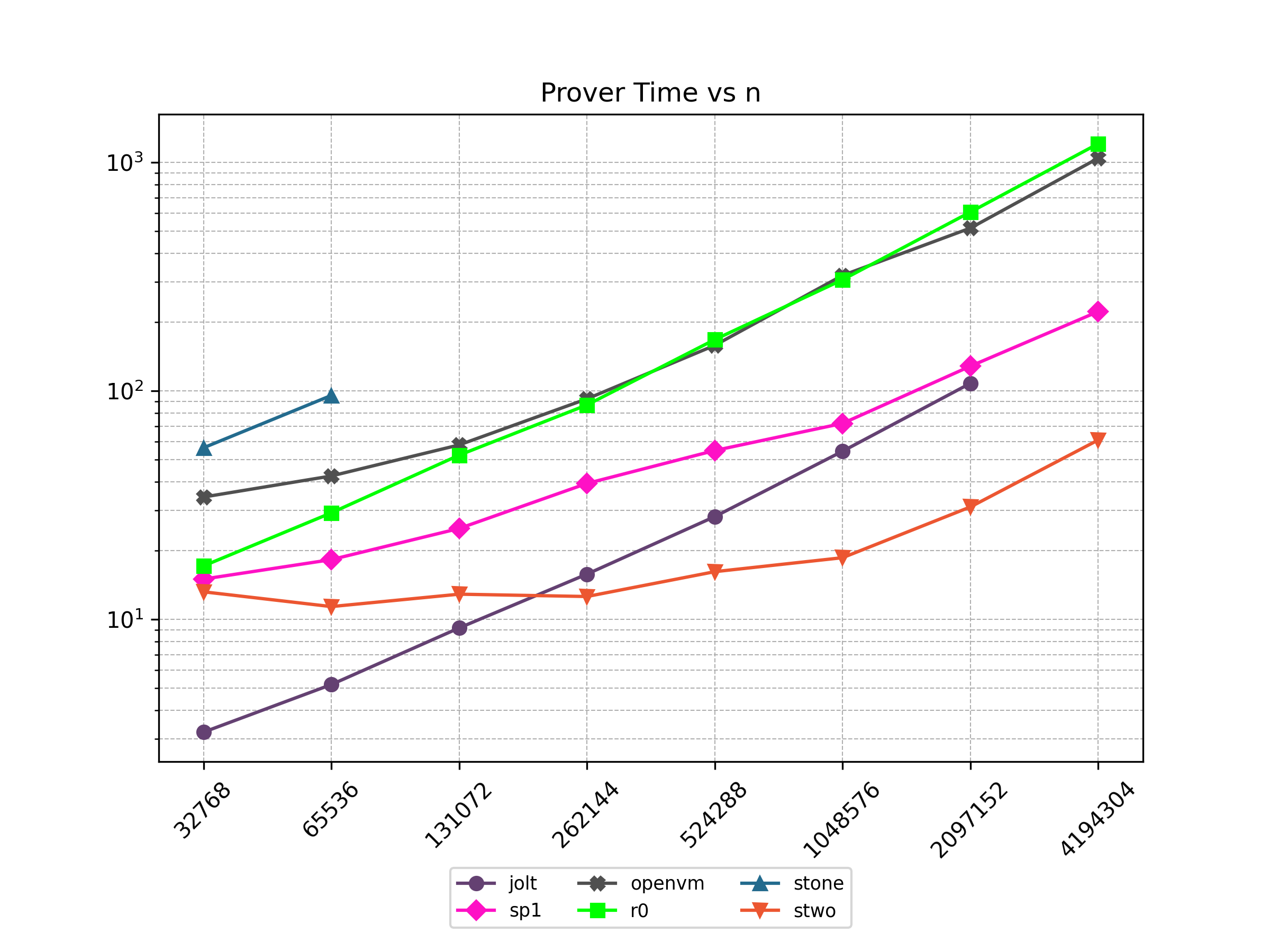

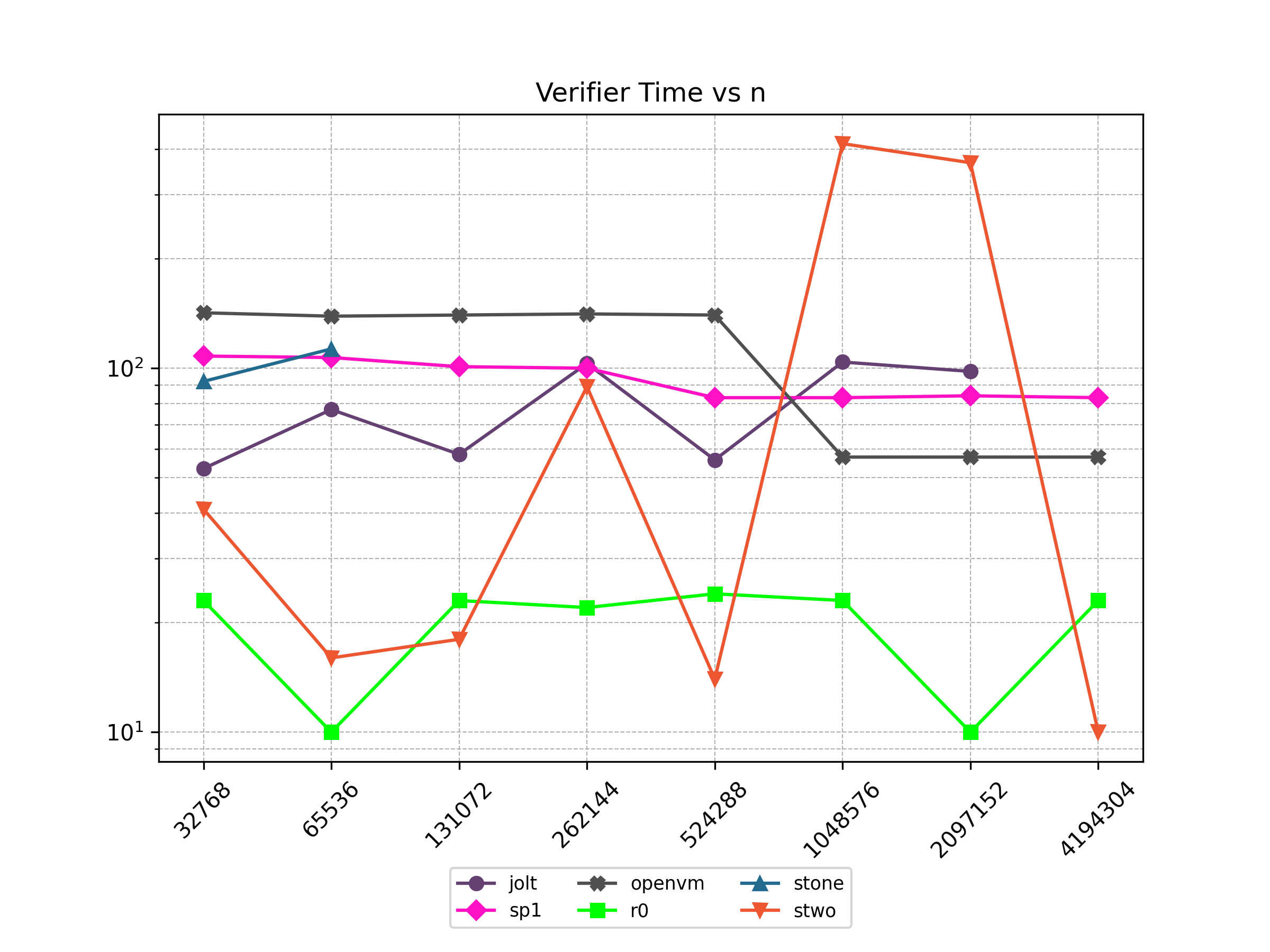

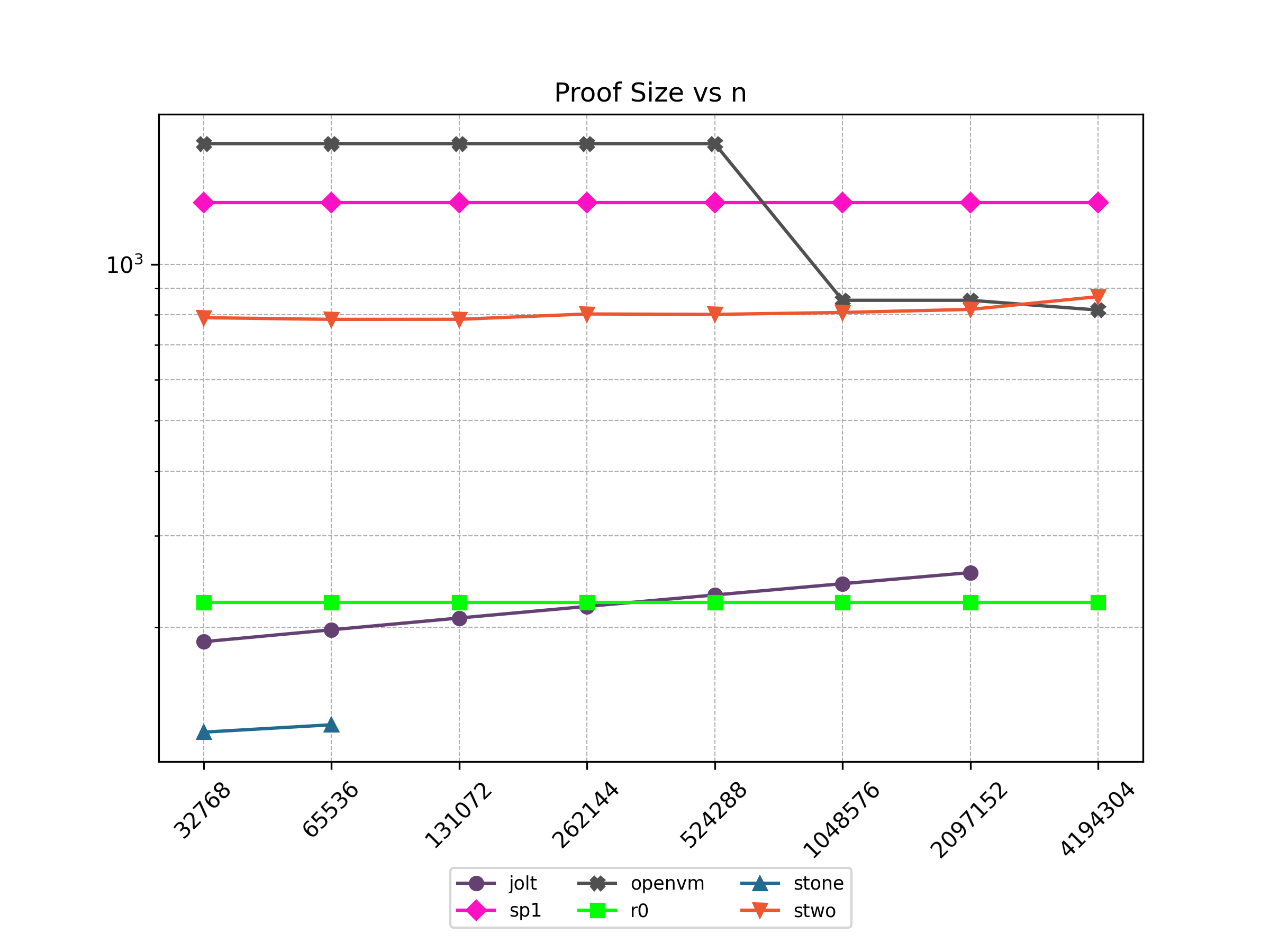

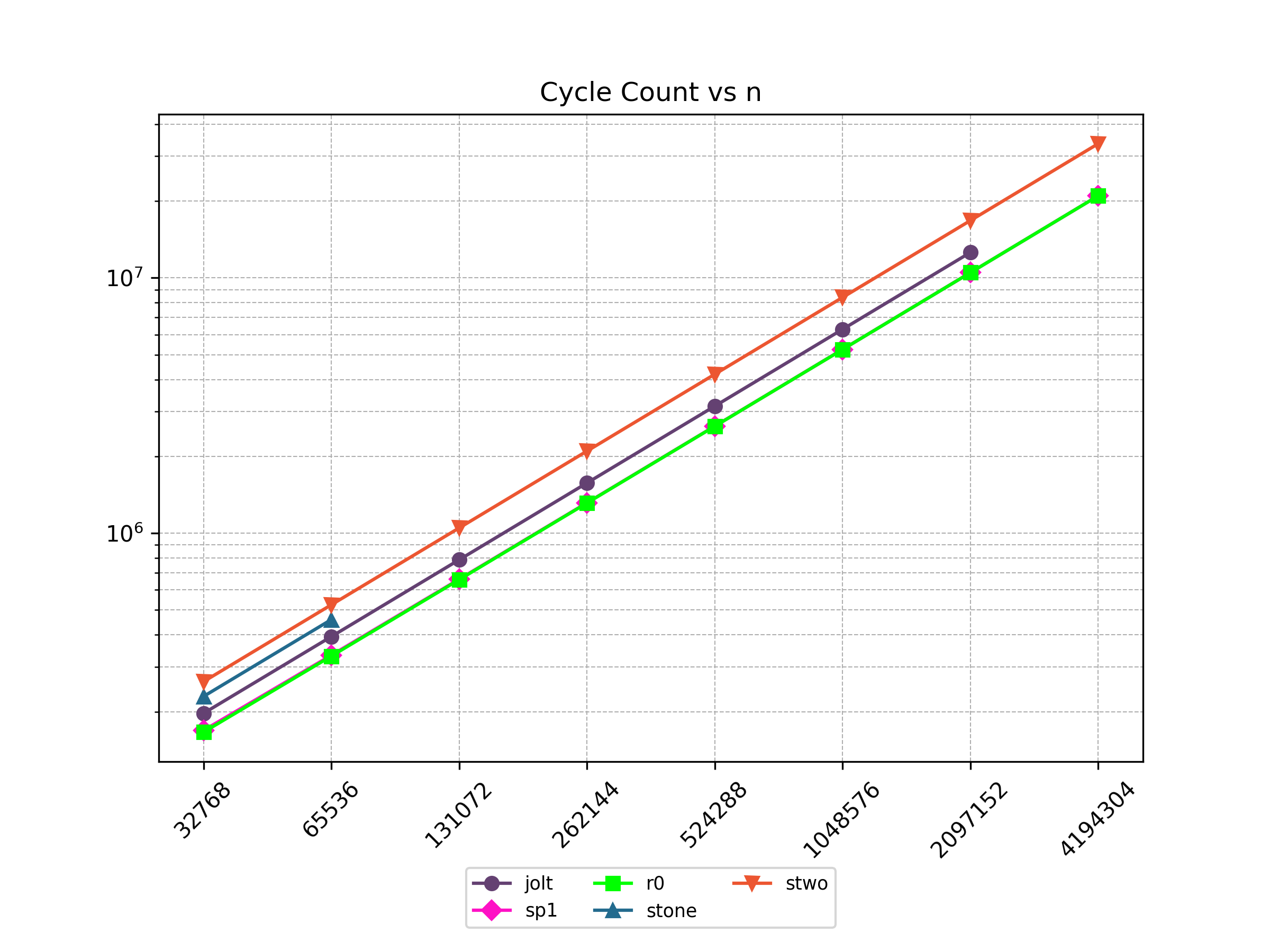

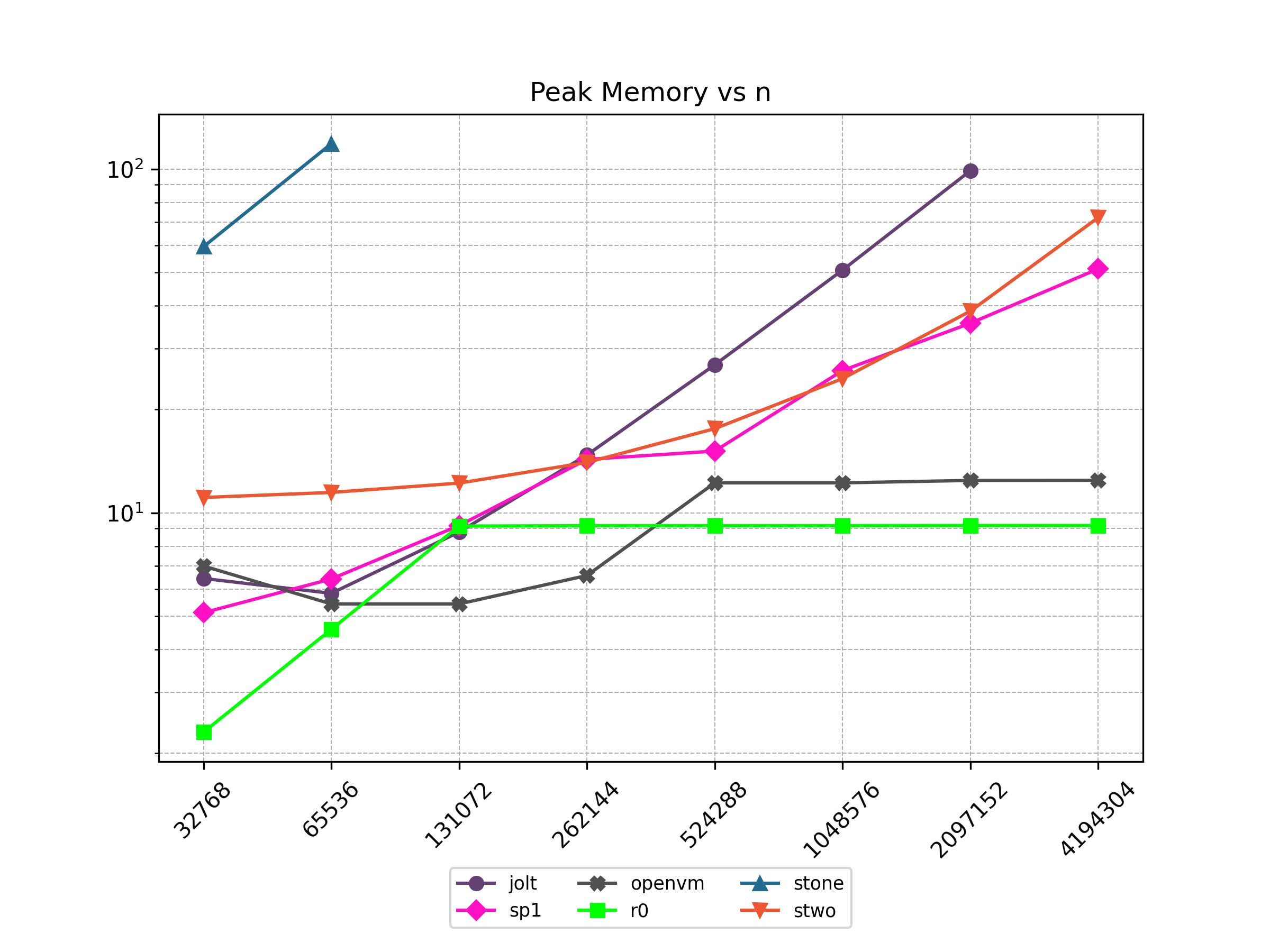

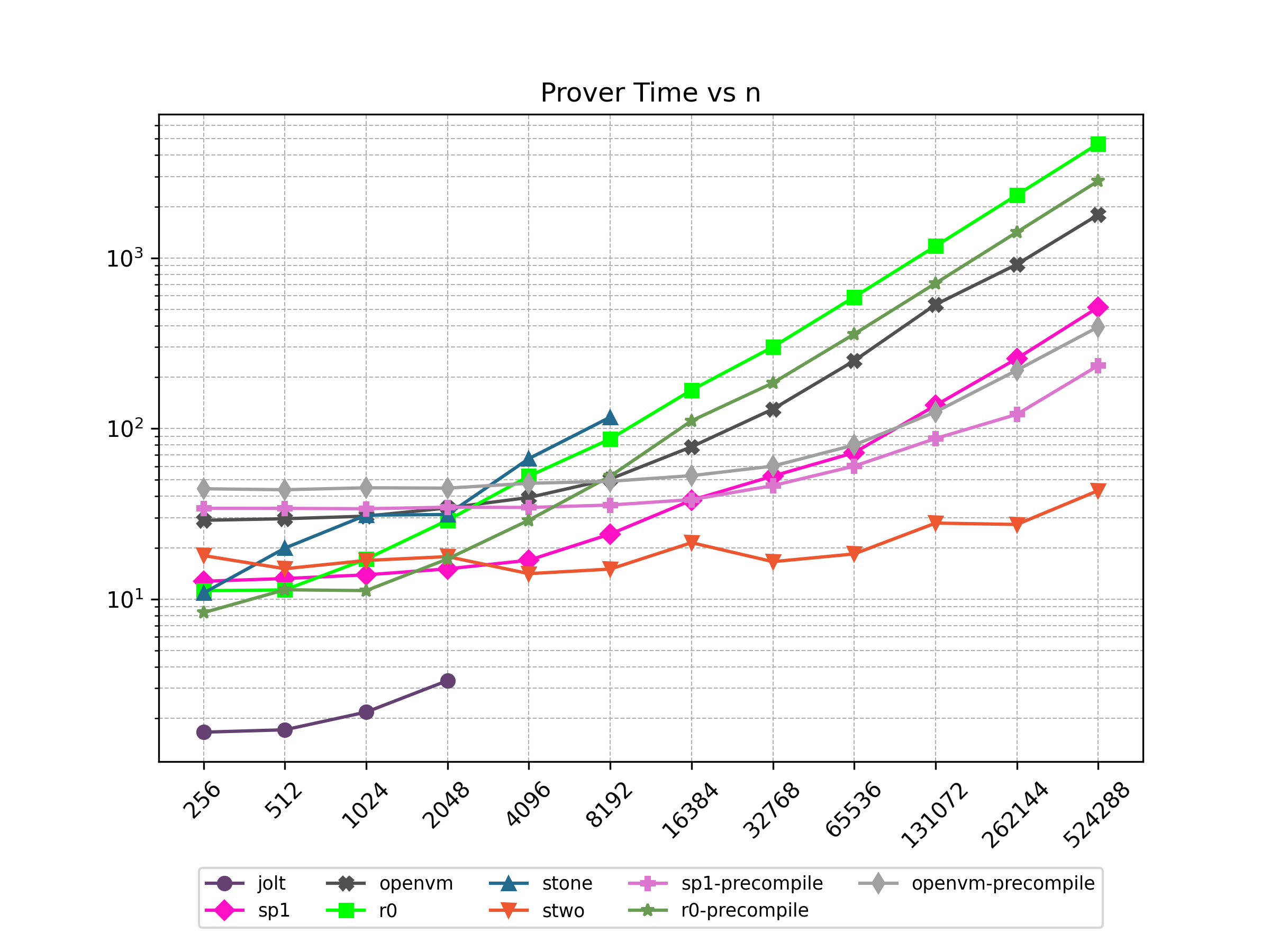

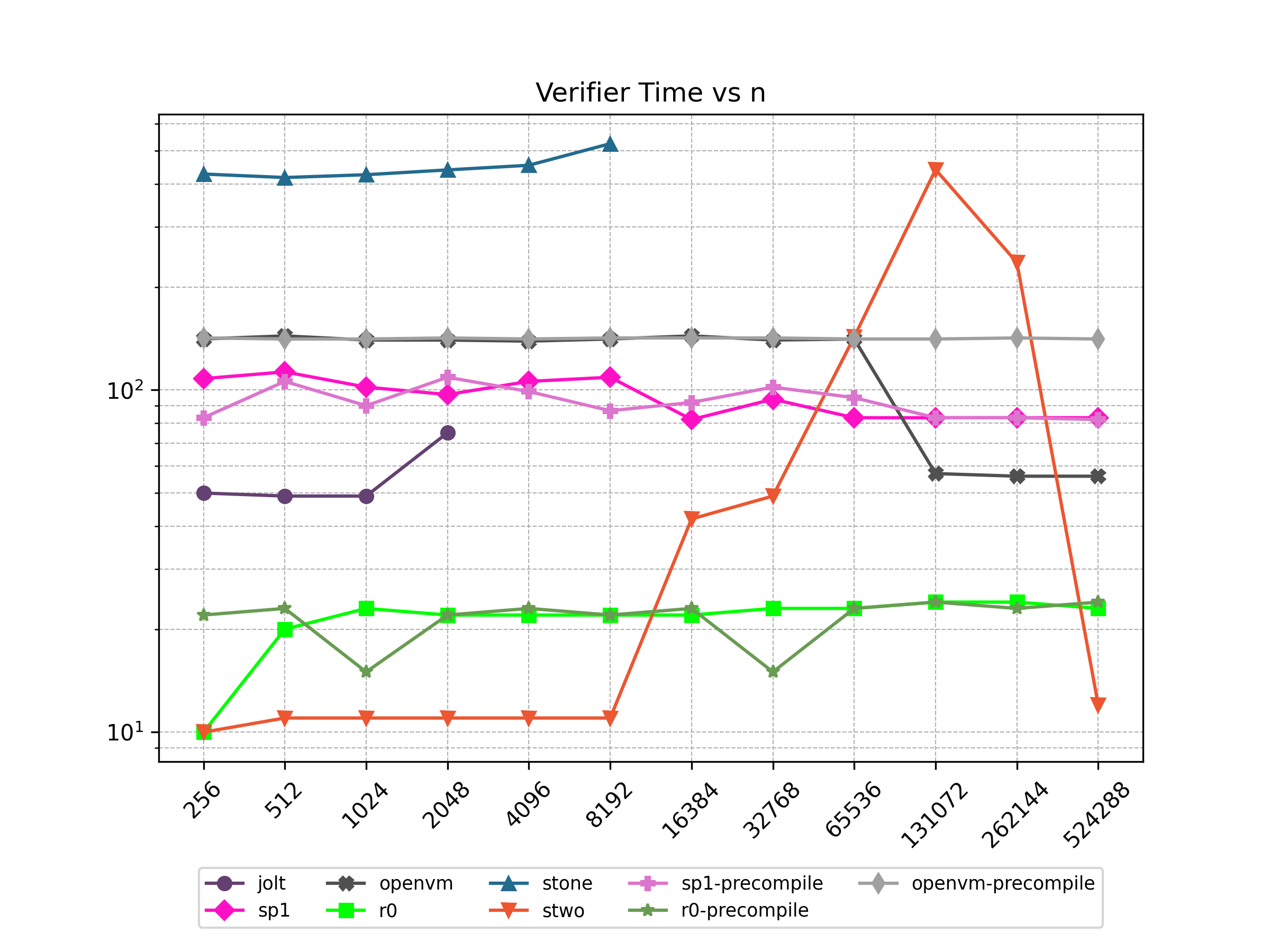

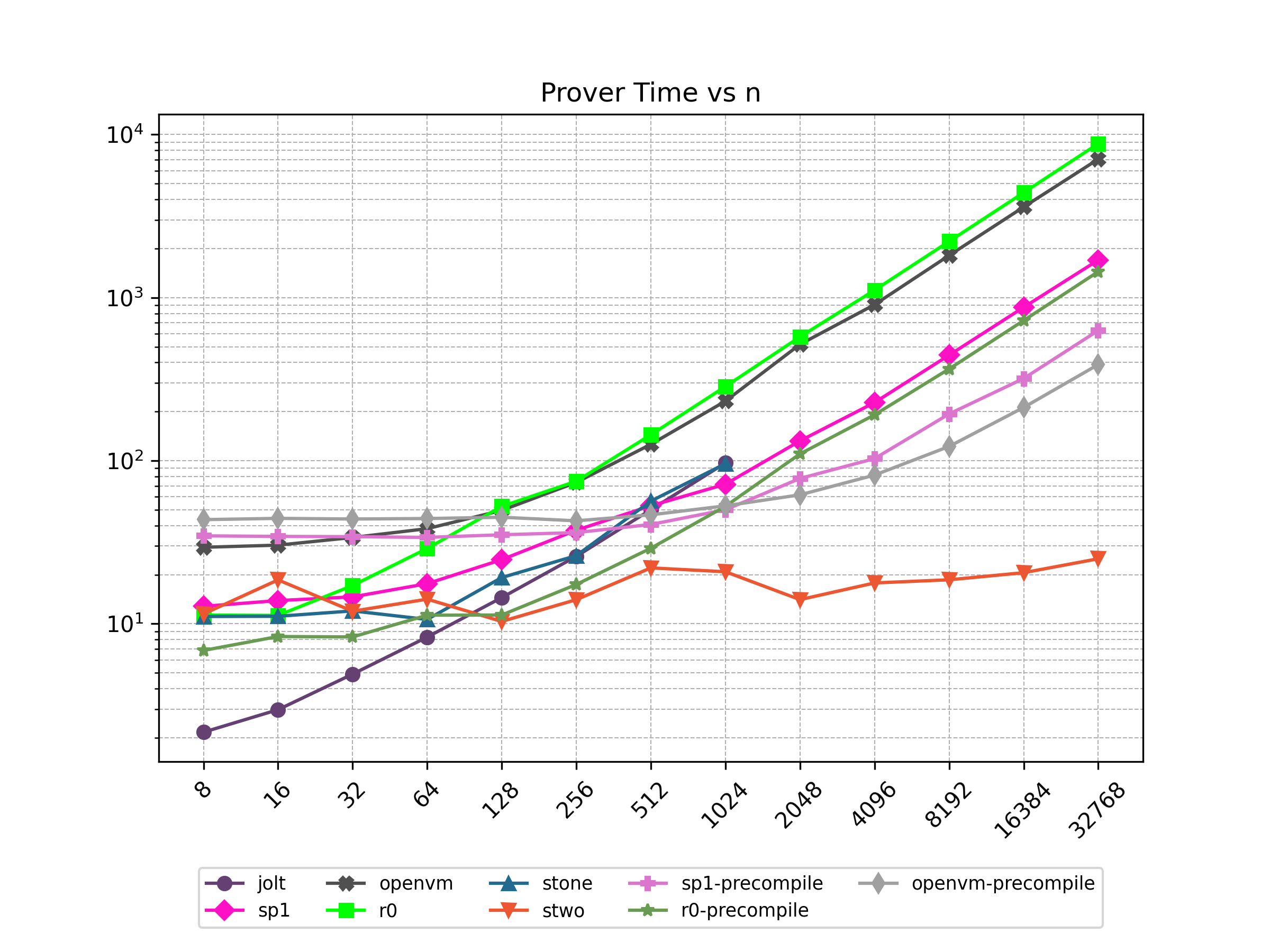

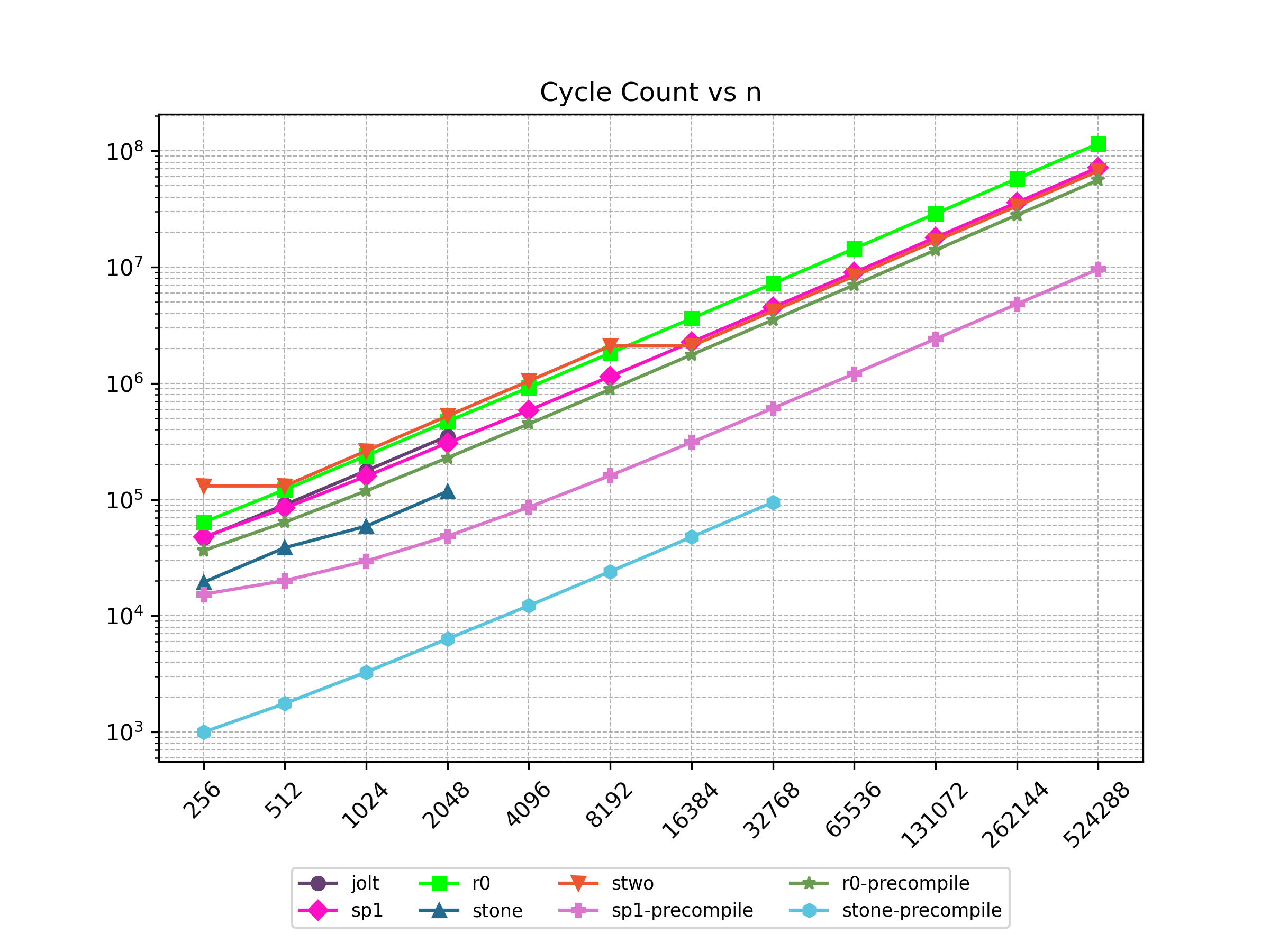

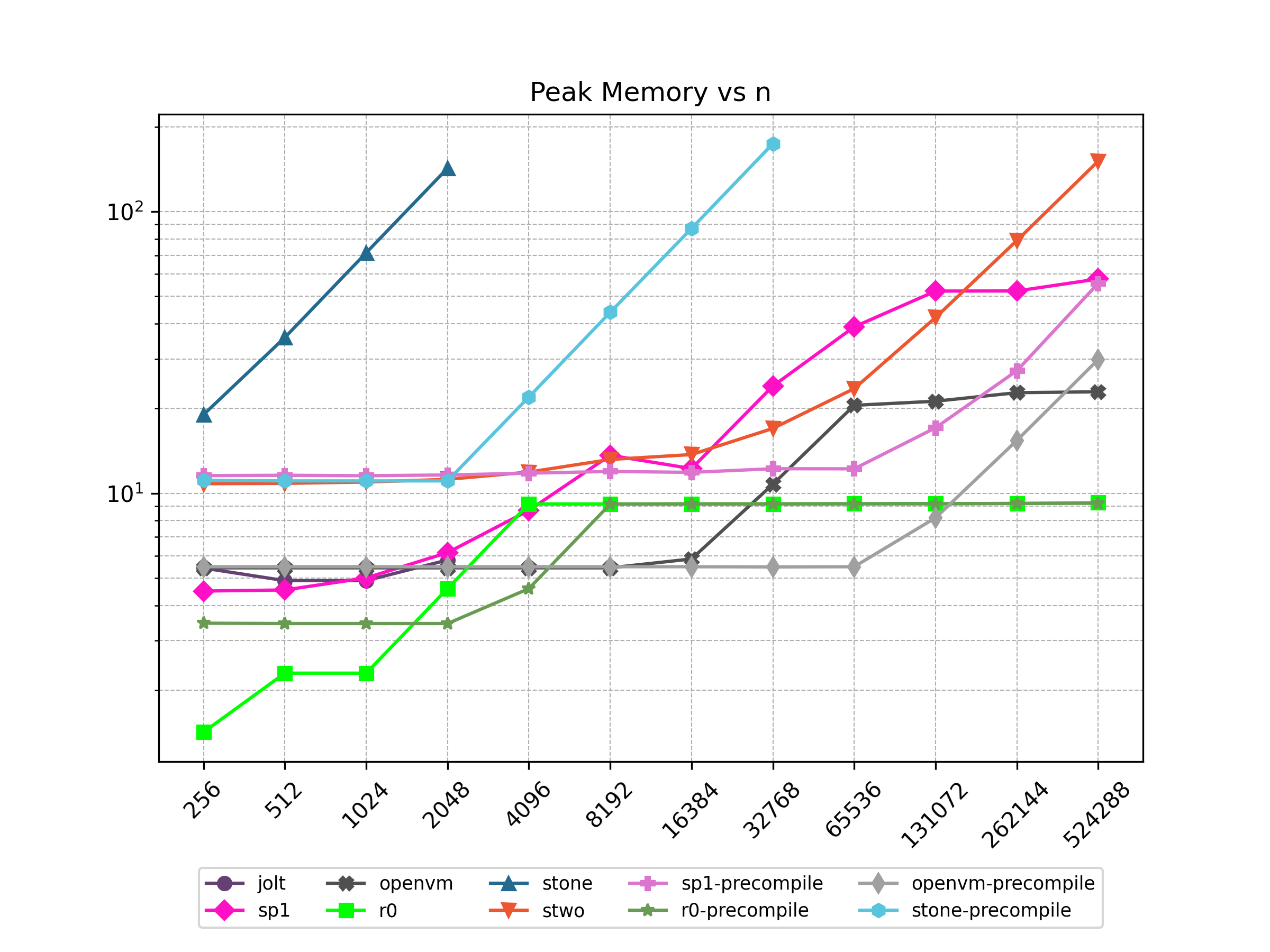

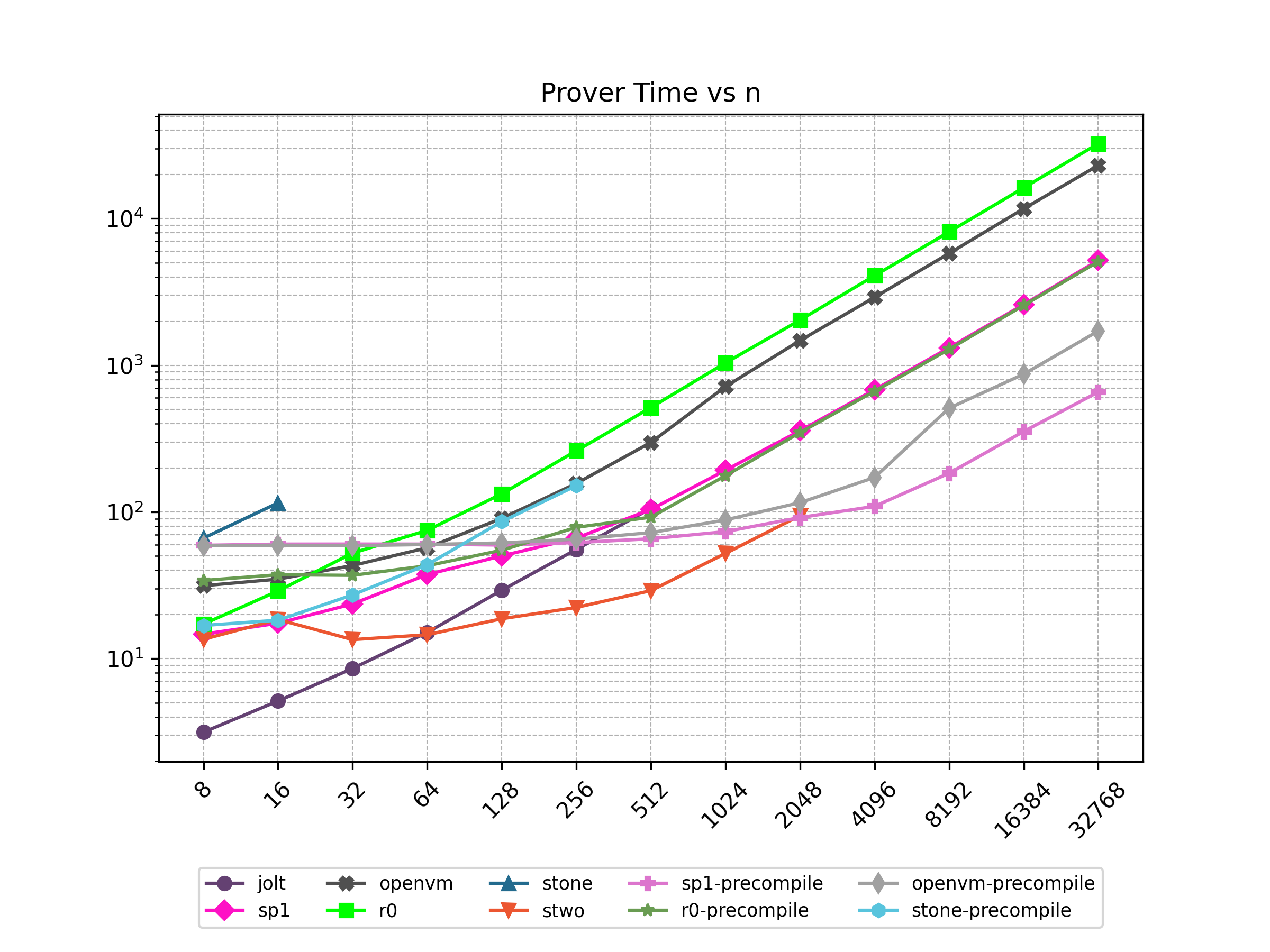

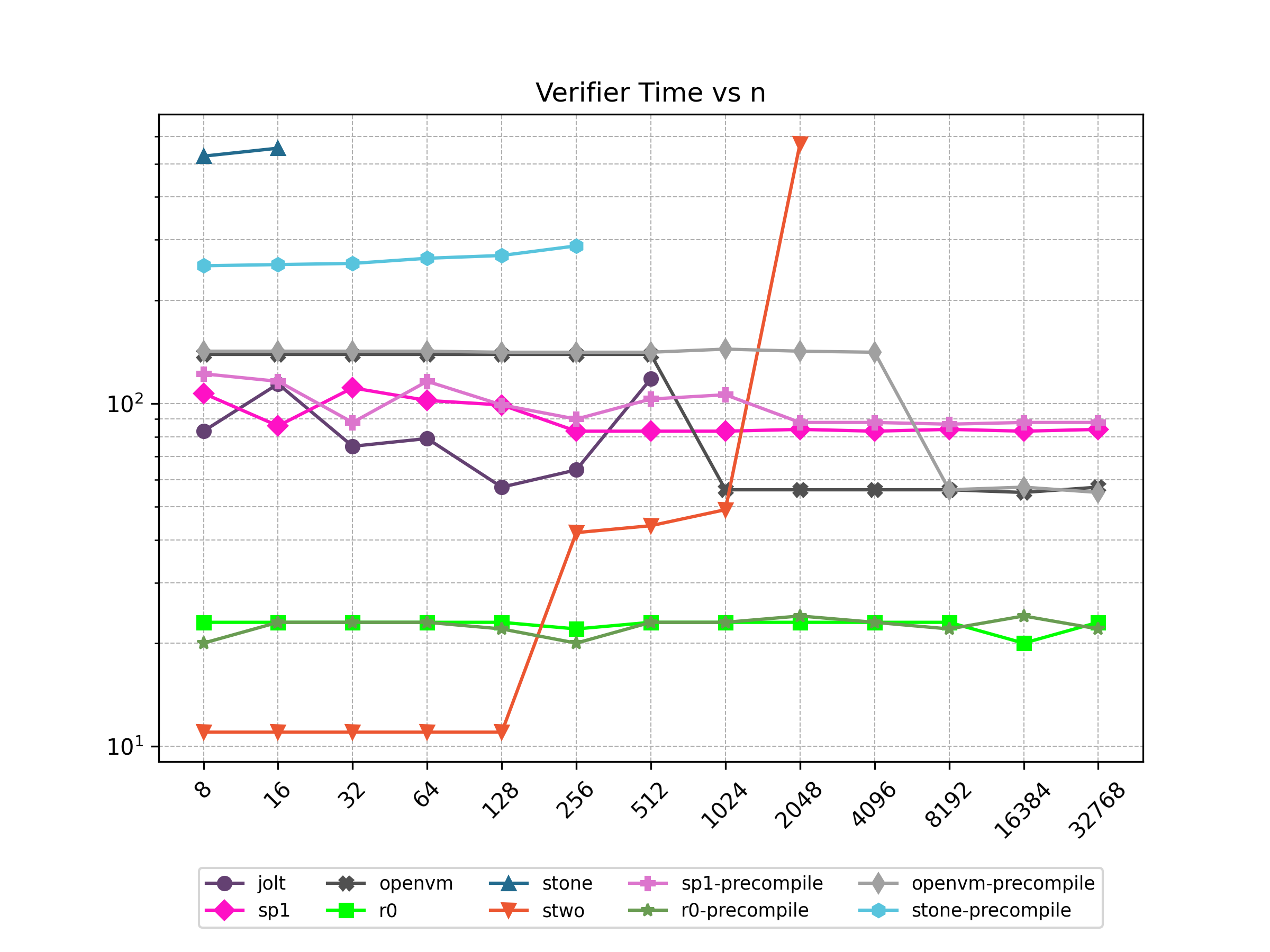

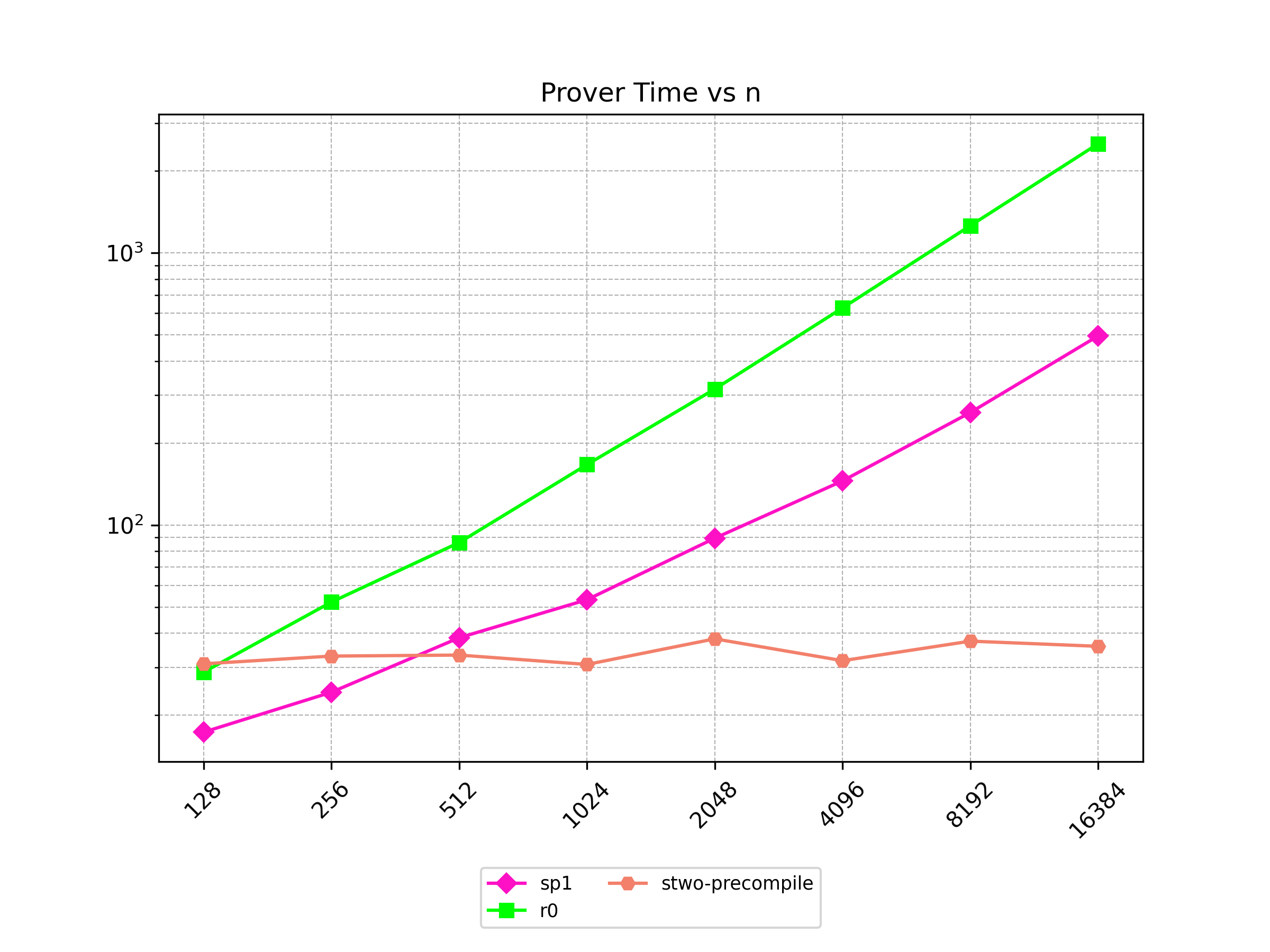

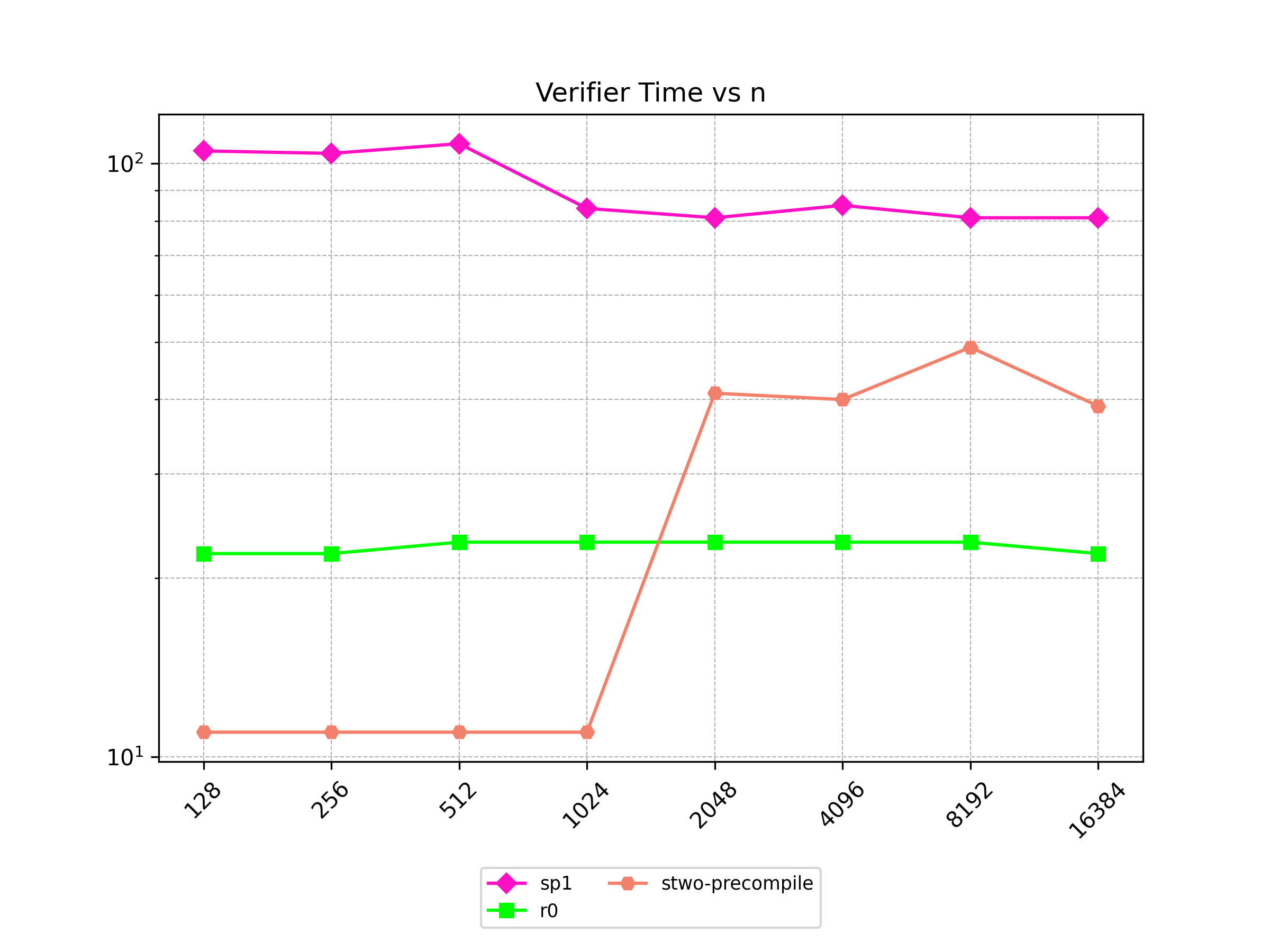

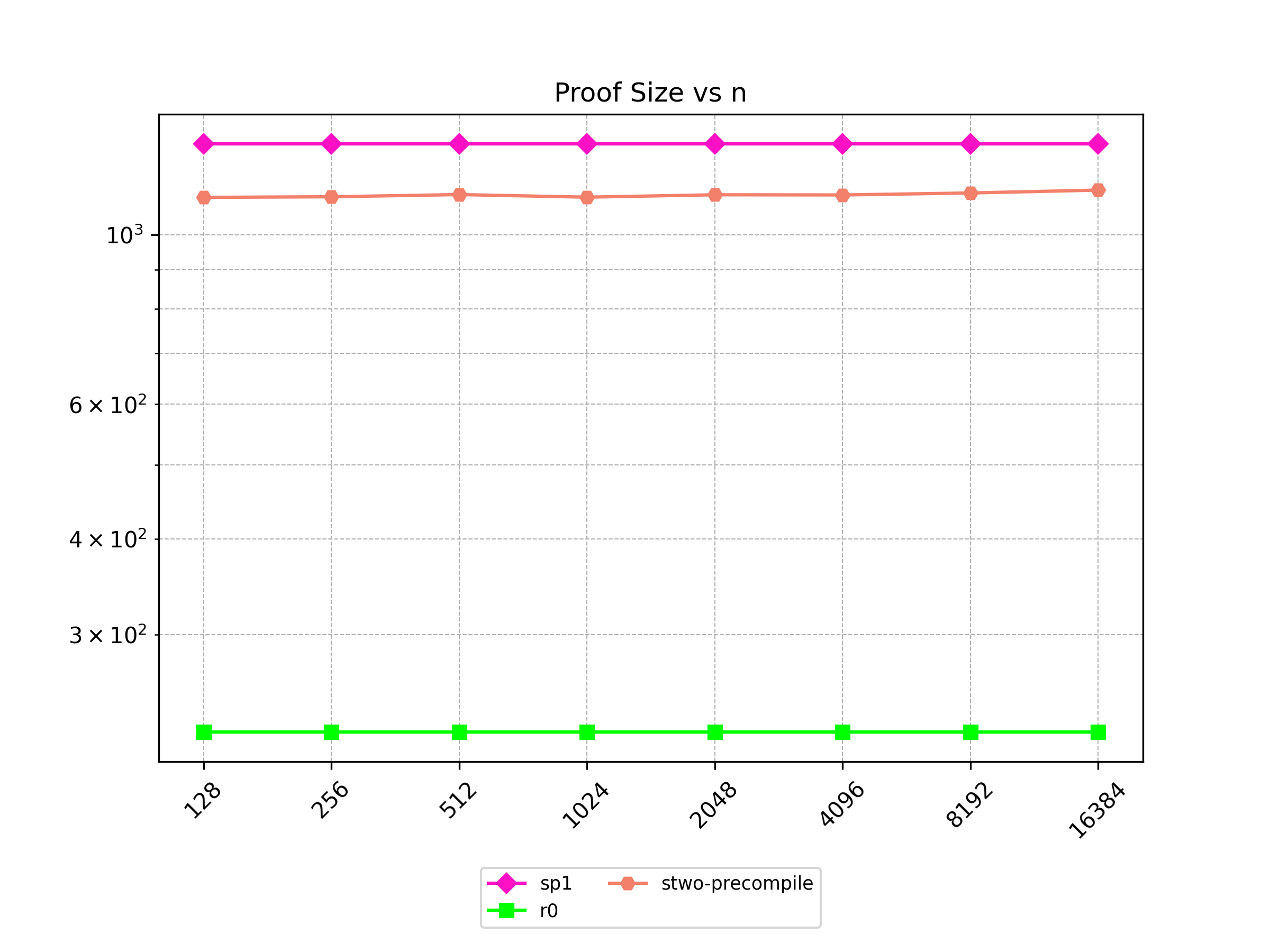

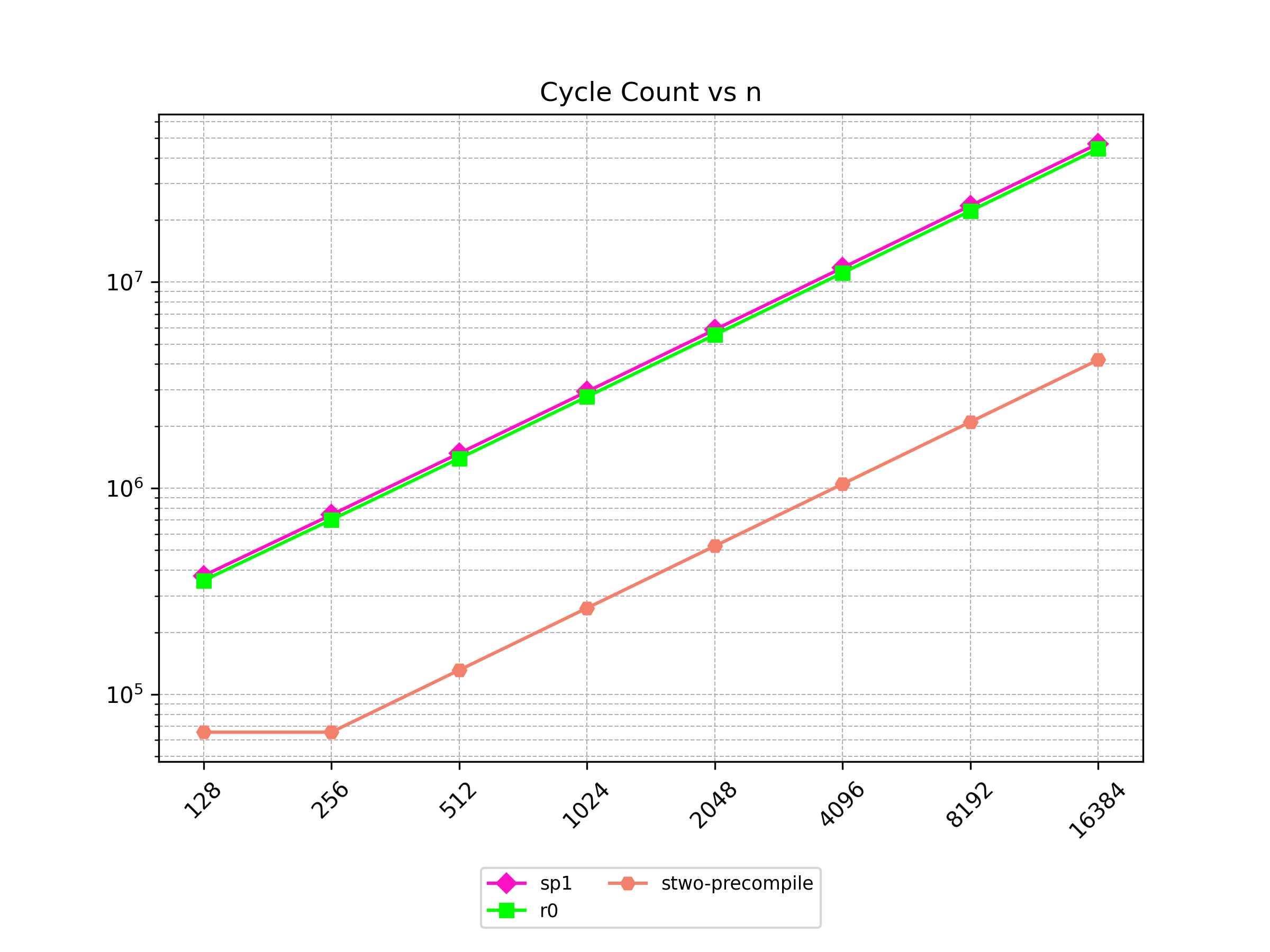

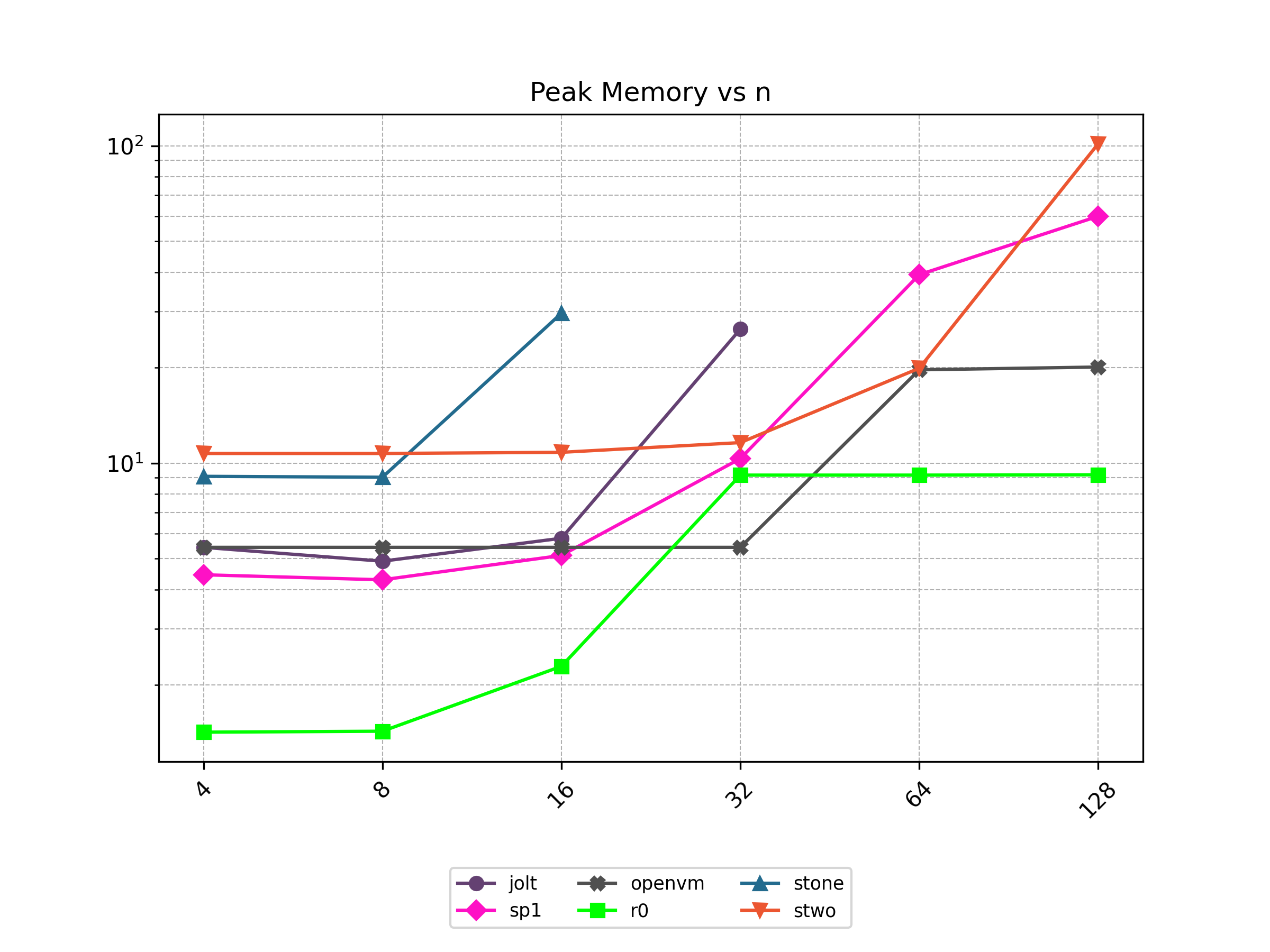

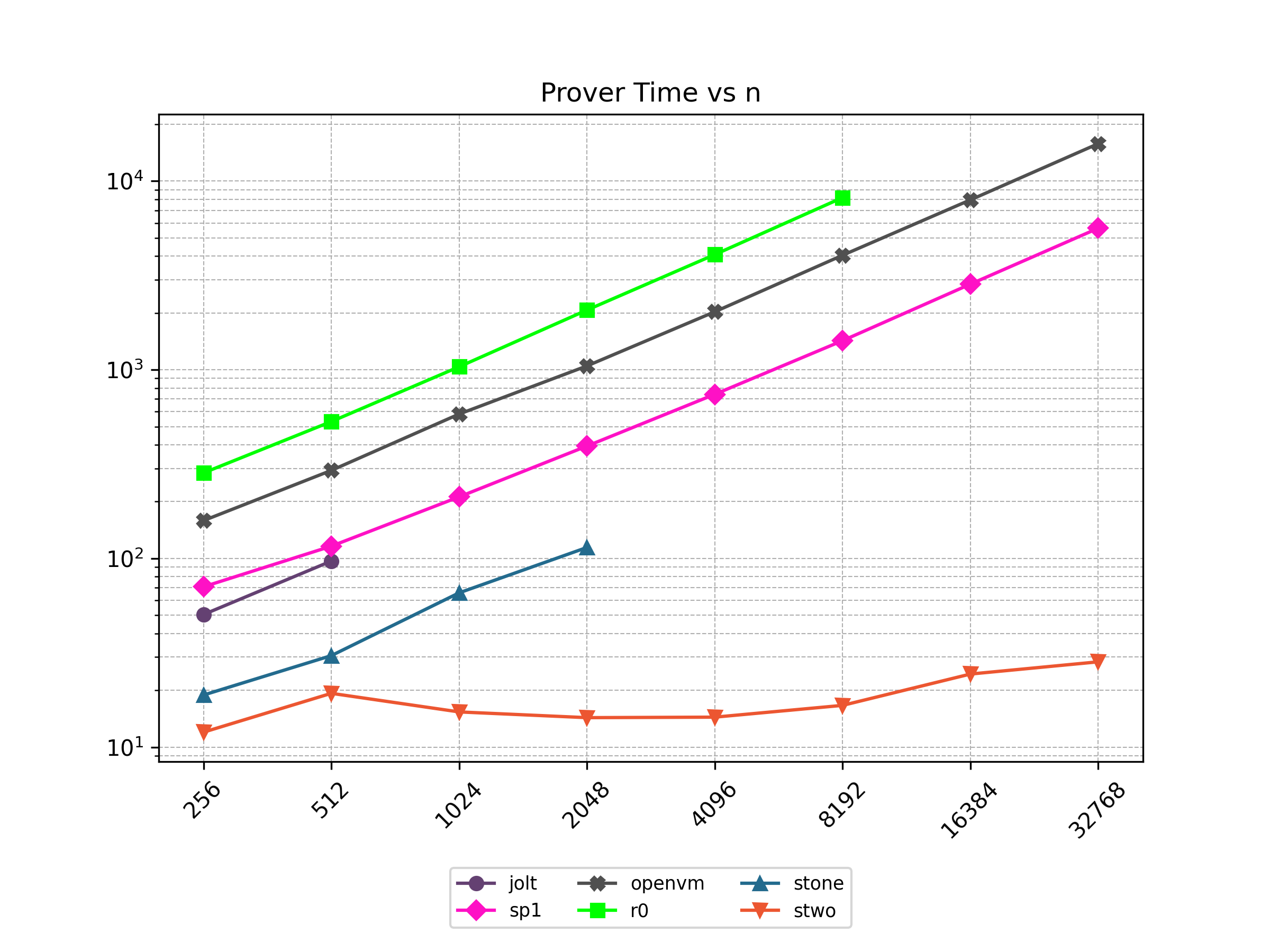

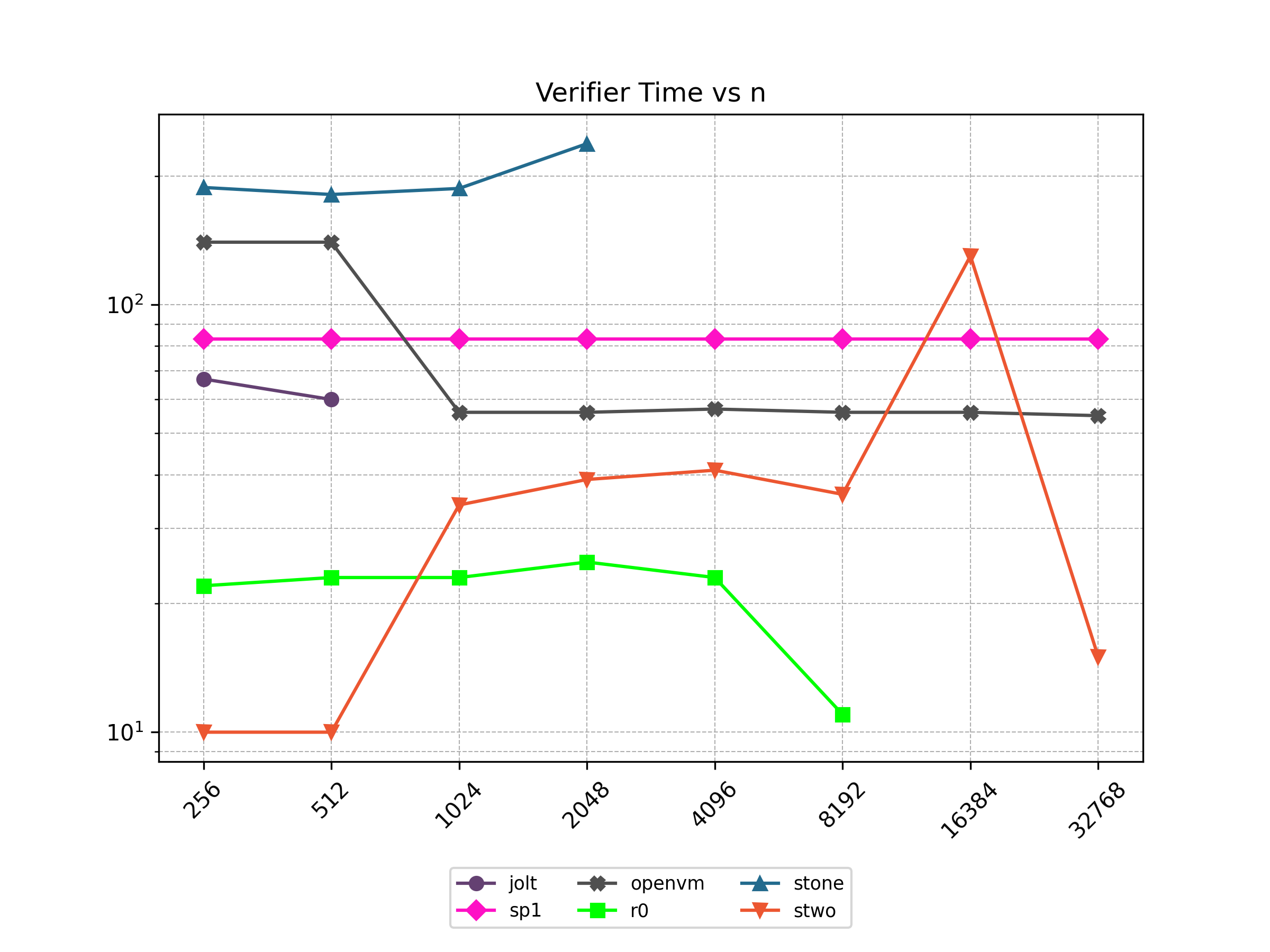

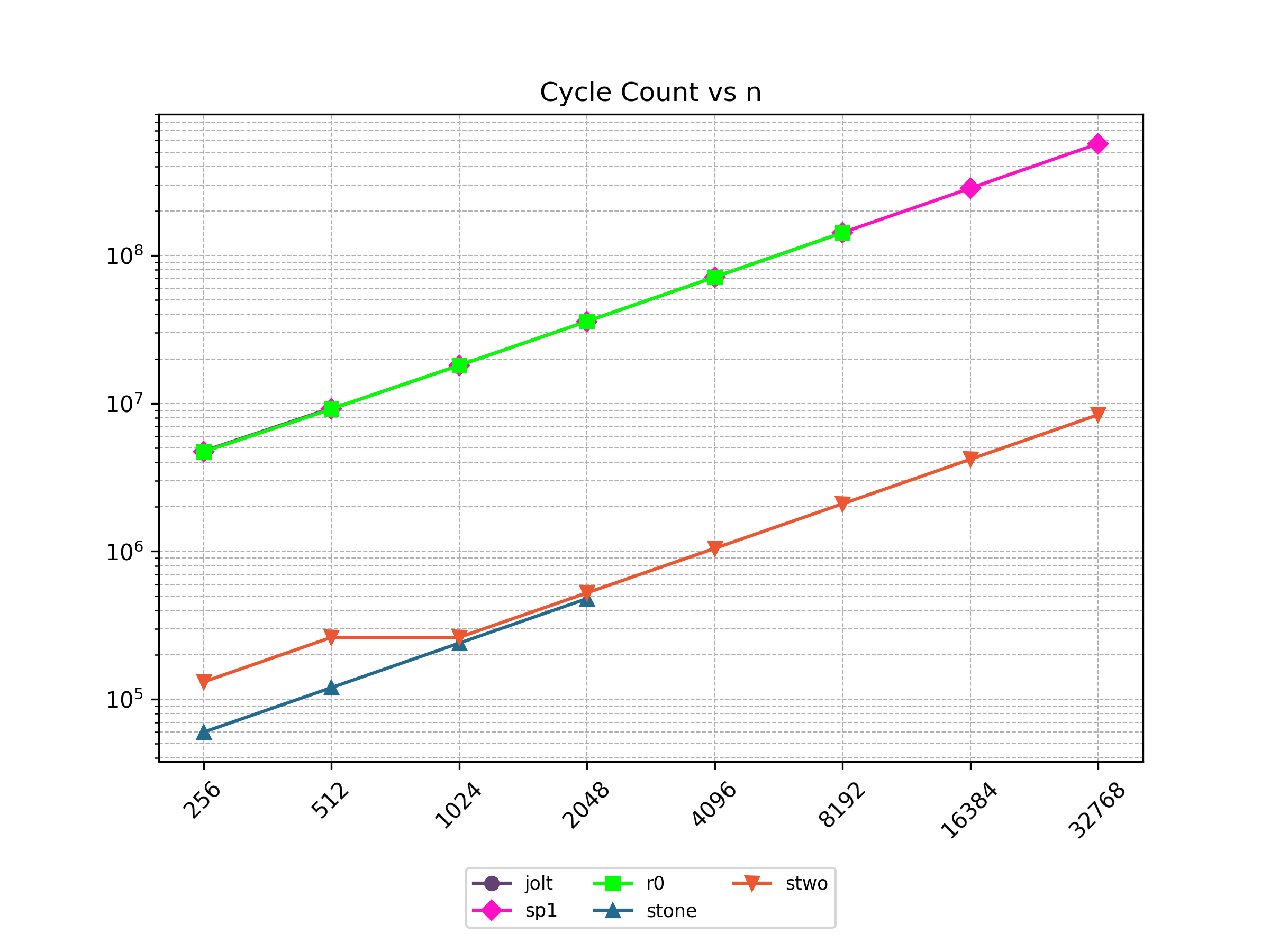

Finally, Stwo offers various CPU and GPU optimizations that improves prover performance as shown in Figure 1 below. It can also be compiled to WASM, allowing for fast proving in web environments.

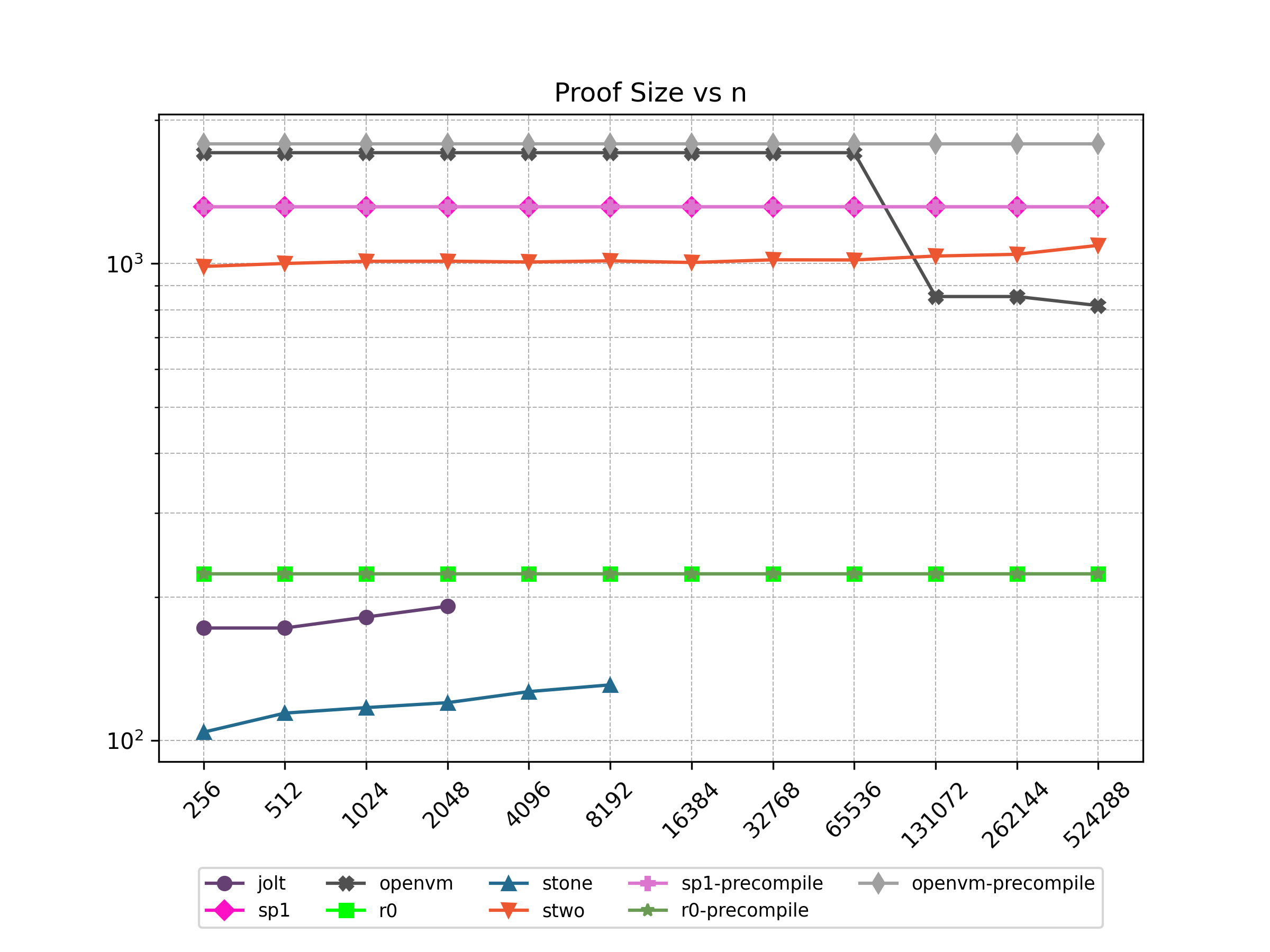

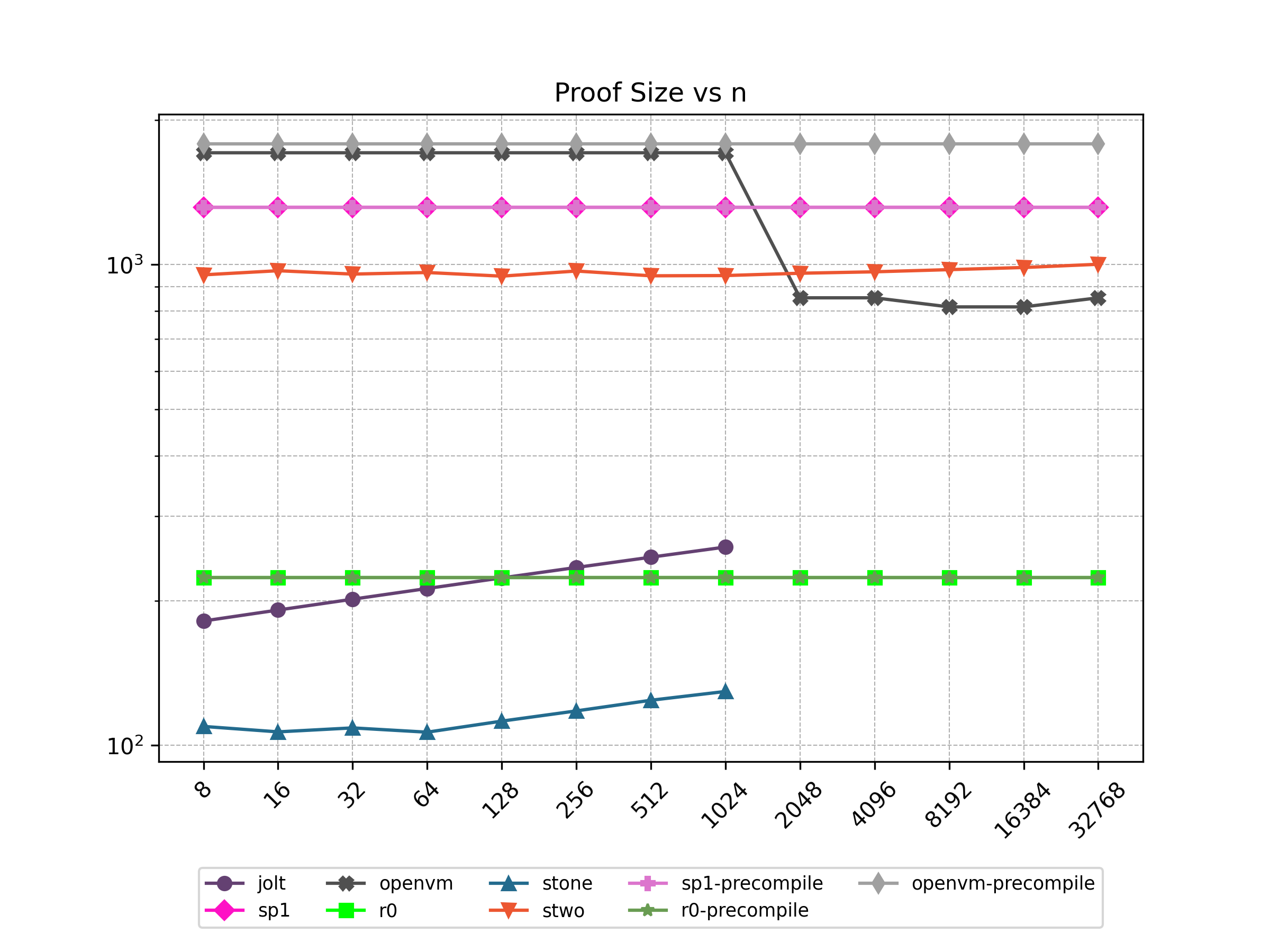

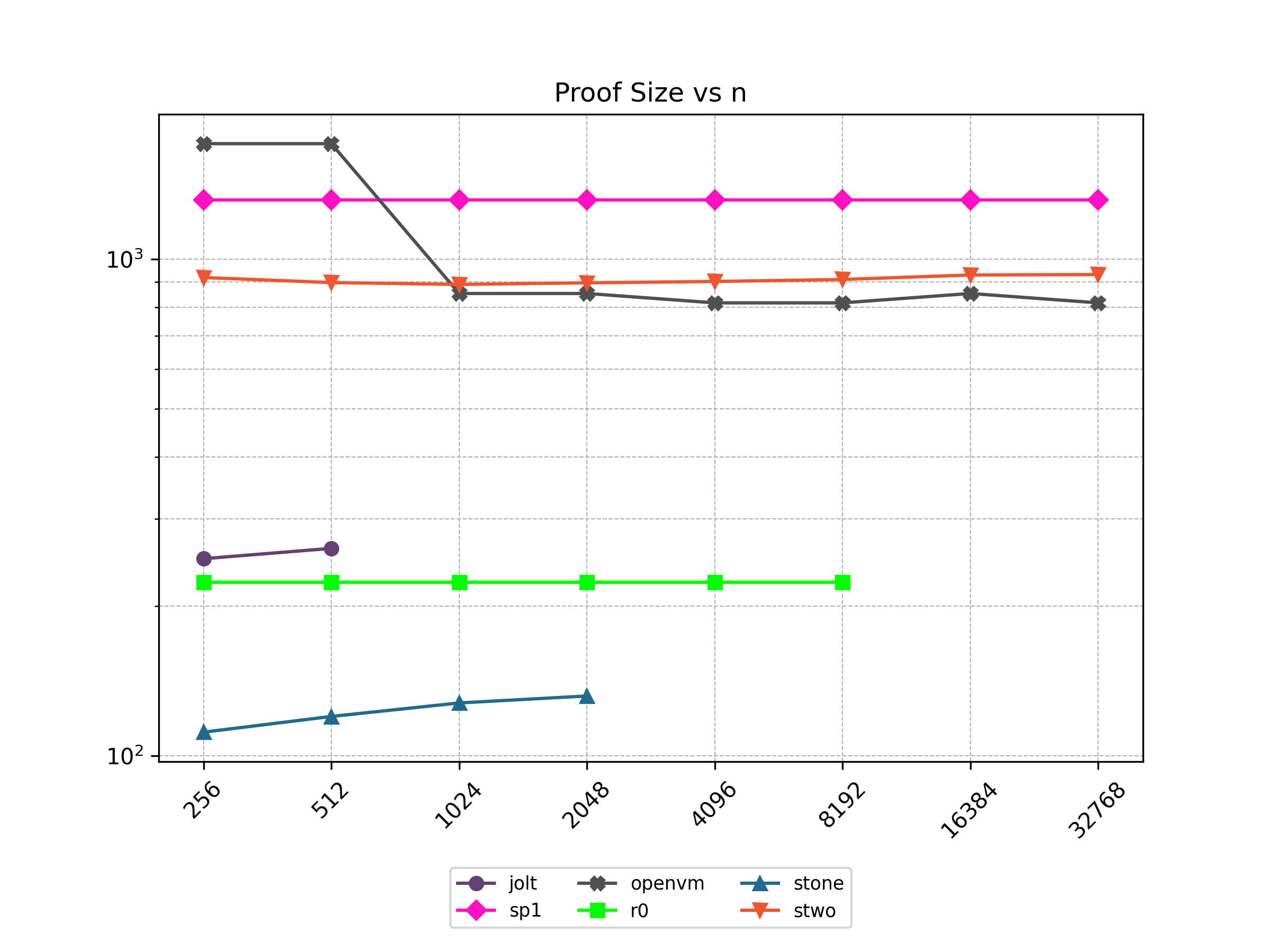

One of the drawbacks of STARKs is that they have a larger proof size compared to elliptic curve-based SNARKs. One way to mitigate this drawback is by batching multiple proofs together to form a single proof.

On zero-knowledge:

As of the time of this writing, Stwo does not provide the "zero-knowledge" feature. "Zero-knowledge" here refers to the fact that the proof should not reveal any additional information other than the validity of the statement, which is not true for Stwo as it reveals to the verifier commitments to its witness values without hiding them by e.g. adding randomness. This reveals some information about the witness values, which may be used in conjunction with other information to infer the witness values.

AIR Development

This section is intended for developers who want to create custom proofs using Stwo (proofs of custom VMs, ML inference, etc.). It assumes that the reader is familiar with Rust and has some background knowledge of cryptography (e.g. finite fields). It also assumes that the reader is familiar with the concept of proof systems and knows what they want to create proofs for, but it does not assume any prior experience with creating them.

All the code that appears throughout this section is available here.

First Breath of AIR

Welcome to the guide for writing AIRs in Stwo!

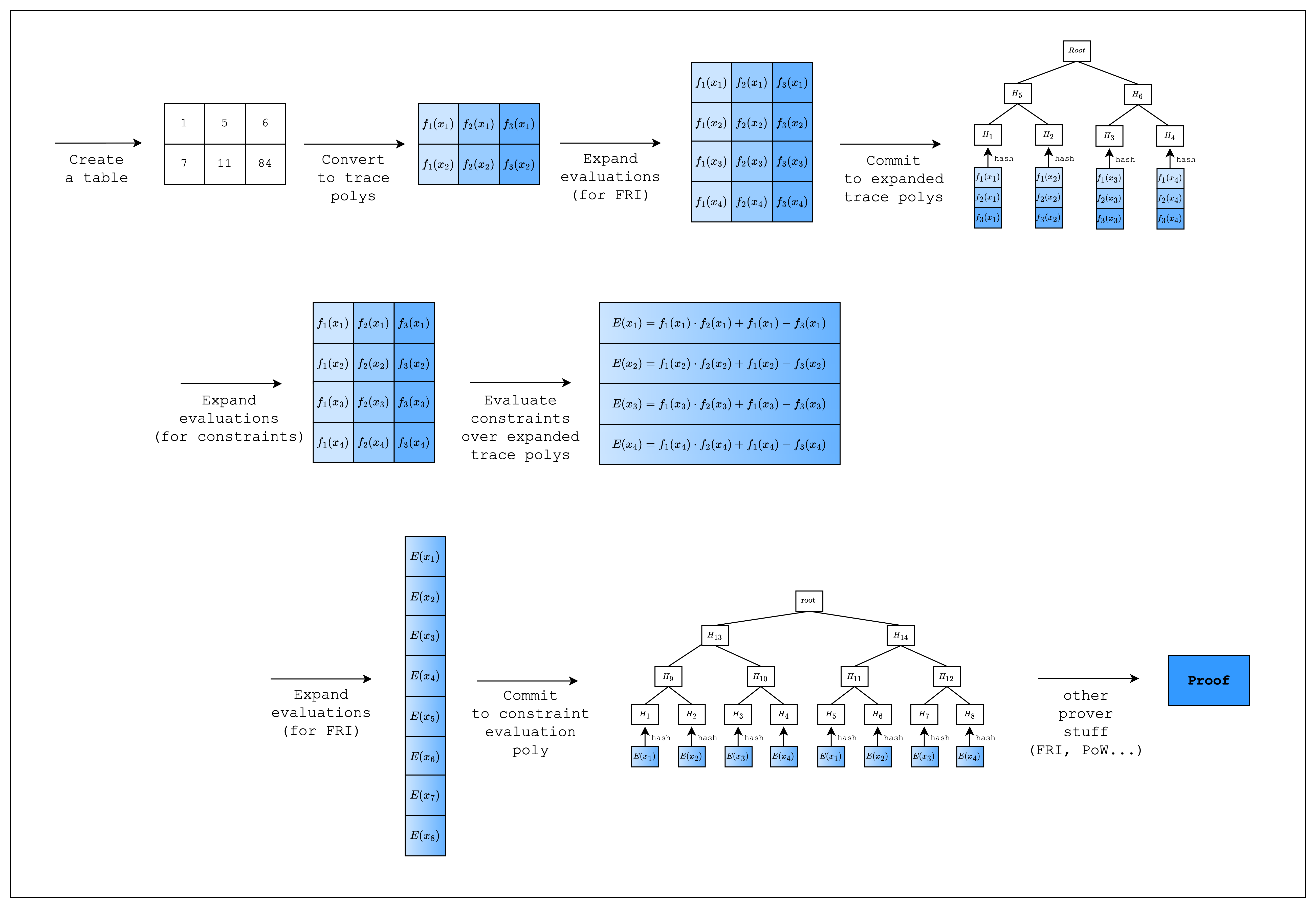

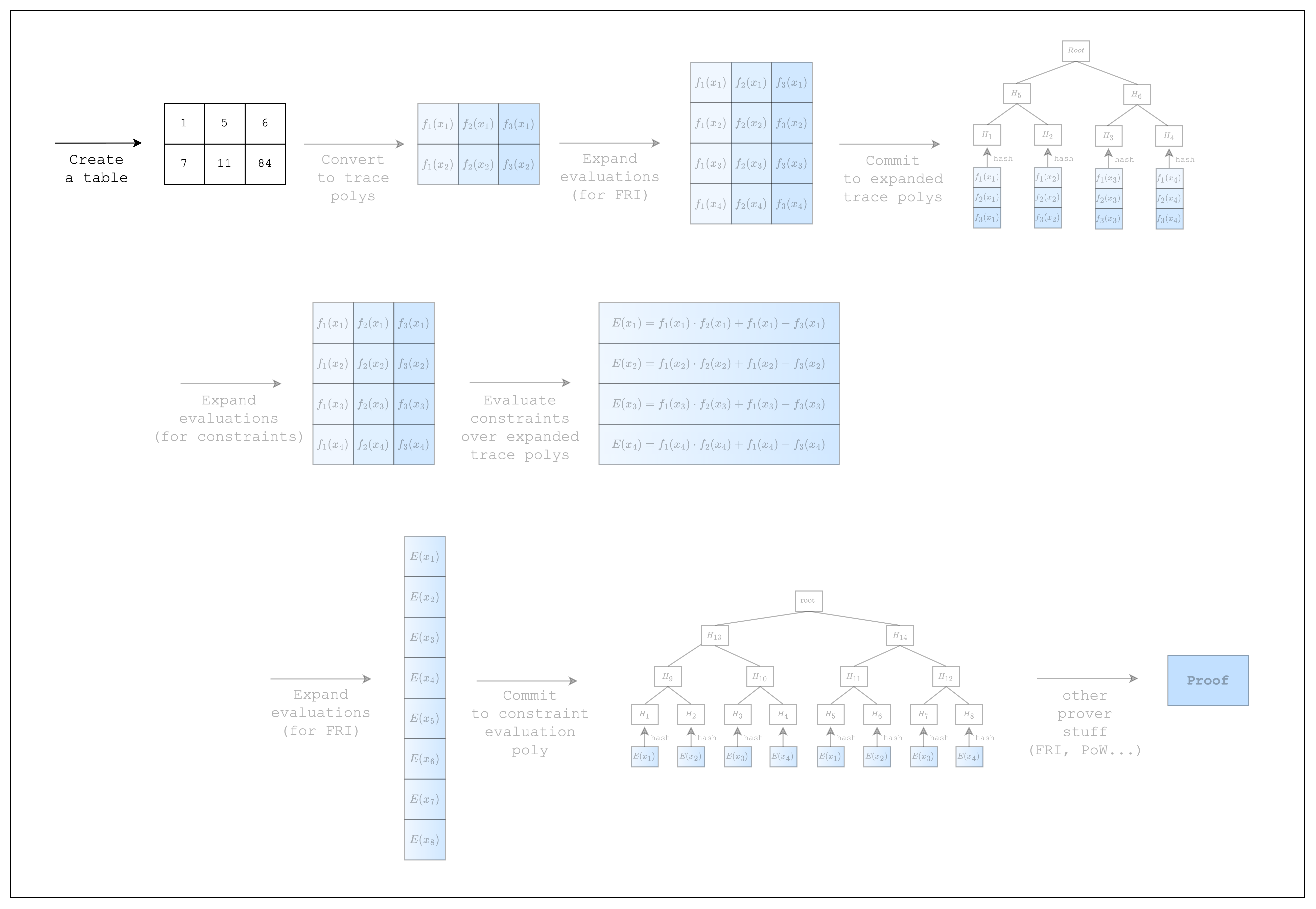

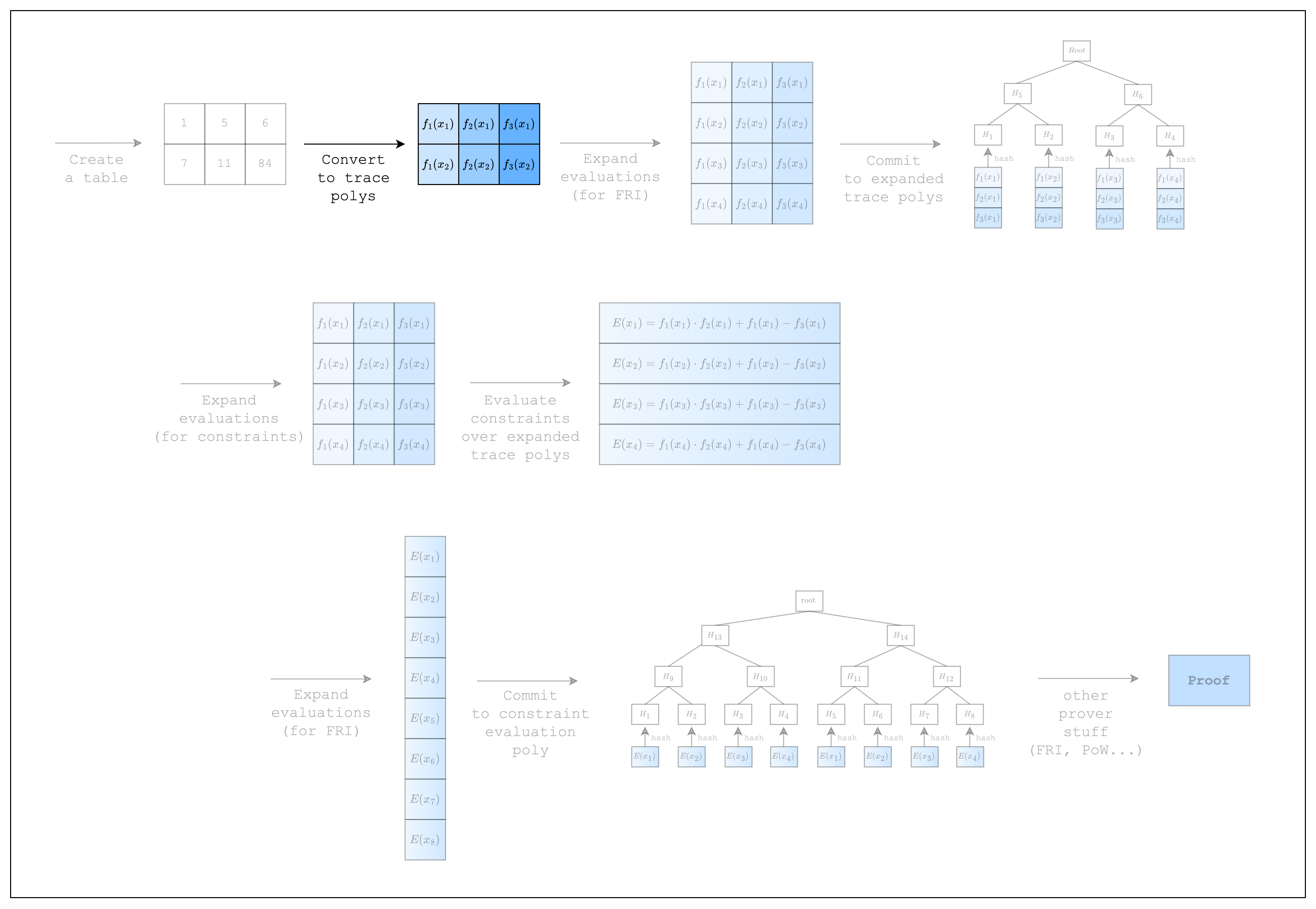

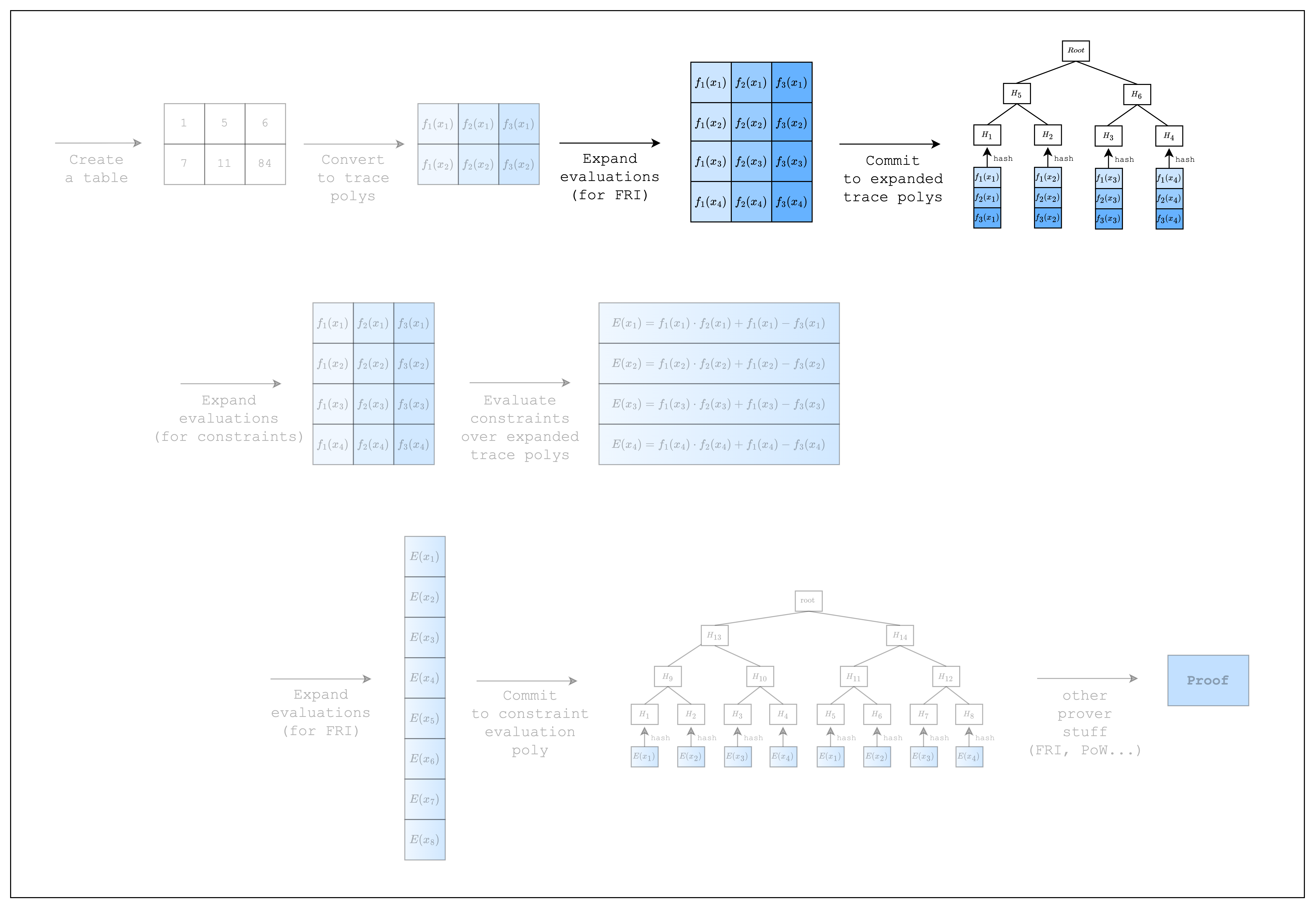

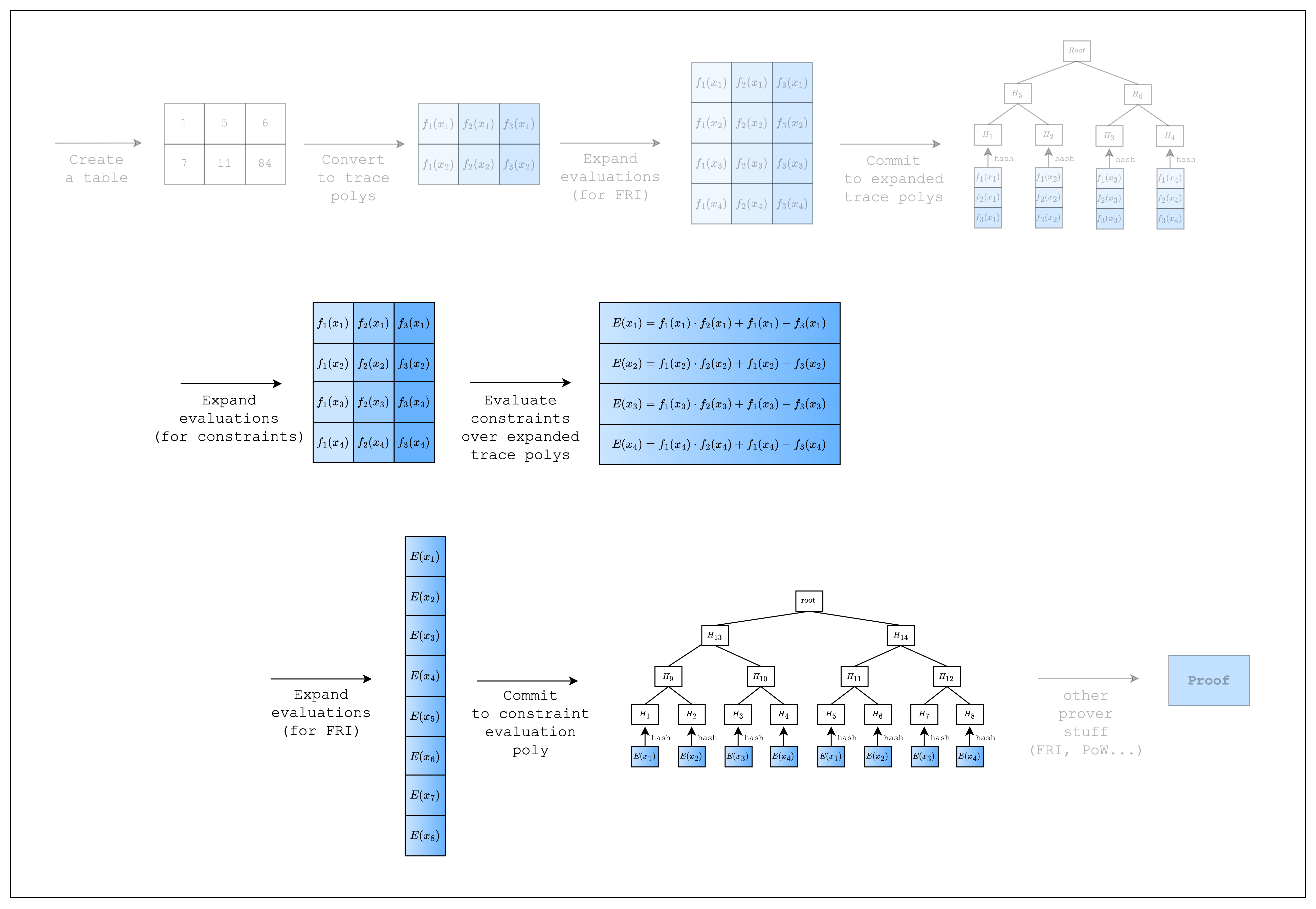

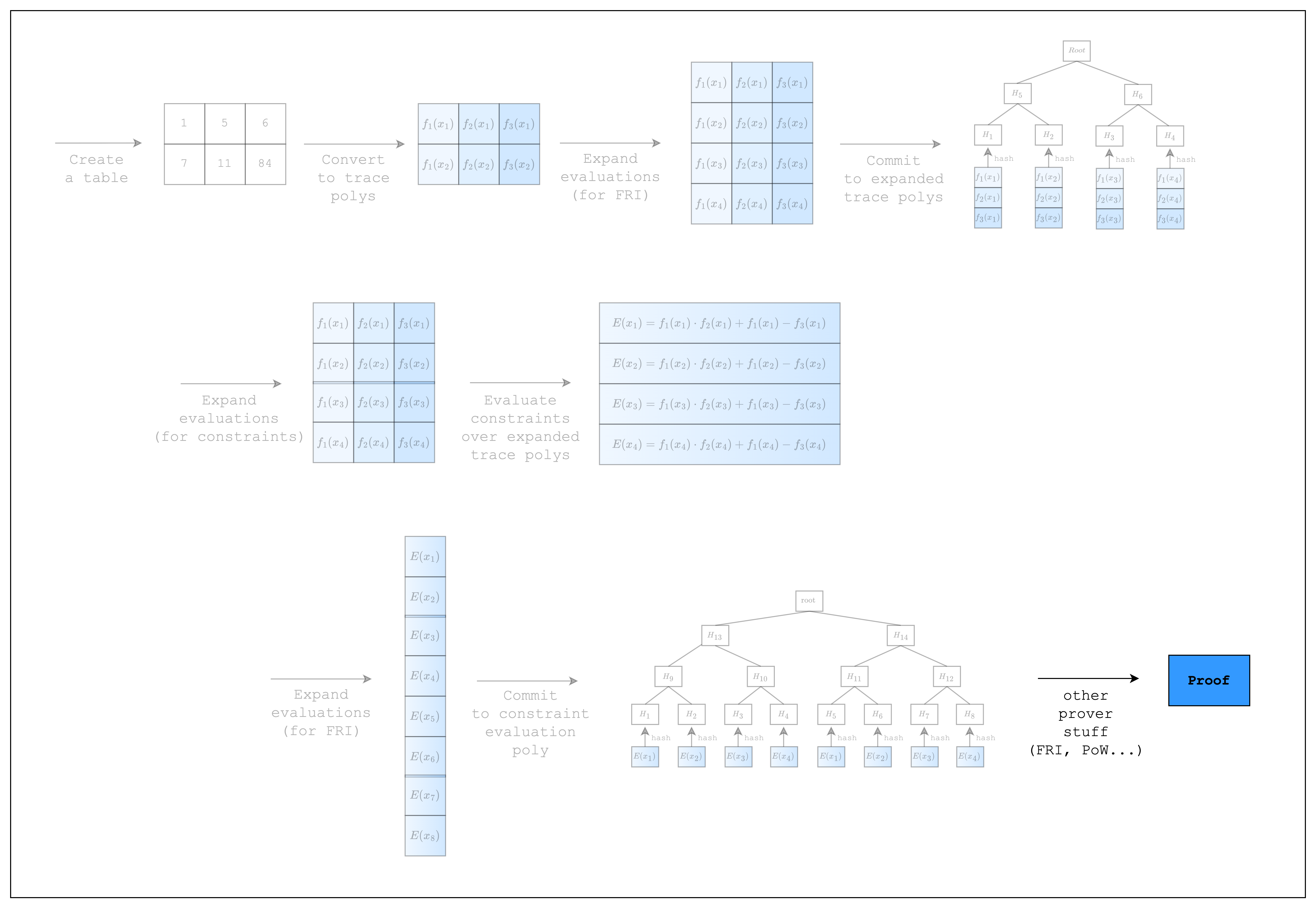

In this section, we will go through the process of writing a simple AIR from scratch. This requires some understanding of the proving lifecycle in Stwo, so we added a diagram showing a high-level overview of the whole process. As we go through each step, please note that the diagram may contain more steps than the code. This is because there are steps that are abstracted away by the Stwo implementation, but are necessary to understand the code that we write when creating an AIR.

Hello World

Let's first set up a Rust project with Stwo.

$ cargo new stwo-example

We need to specify the nightly Rust version to use Stwo.

$ echo -e "[toolchain]\nchannel = \"nightly-2025-01-02\"" > rust-toolchain.toml

Now let's edit the Cargo.toml file as follows:

[package]

name = "stwo-examples"

version = "0.1.0"

edition = "2021"

license = "MIT"

[dependencies]

stwo = { git = "https://github.com/starkware-libs/stwo.git", rev = "75a6b0ac9bcc7101d8658445dded51923ab2586f", features = ["prover"]}

stwo-constraint-framework = { git = "https://github.com/starkware-libs/stwo.git", rev = "75a6b0ac9bcc7101d8658445dded51923ab2586f", package = "stwo-constraint-framework", features = ["prover"] }

num-traits = "0.2.17"

itertools = "0.12.0"

rand = "0.8.5"We are all set!

Writing a Spreadsheet

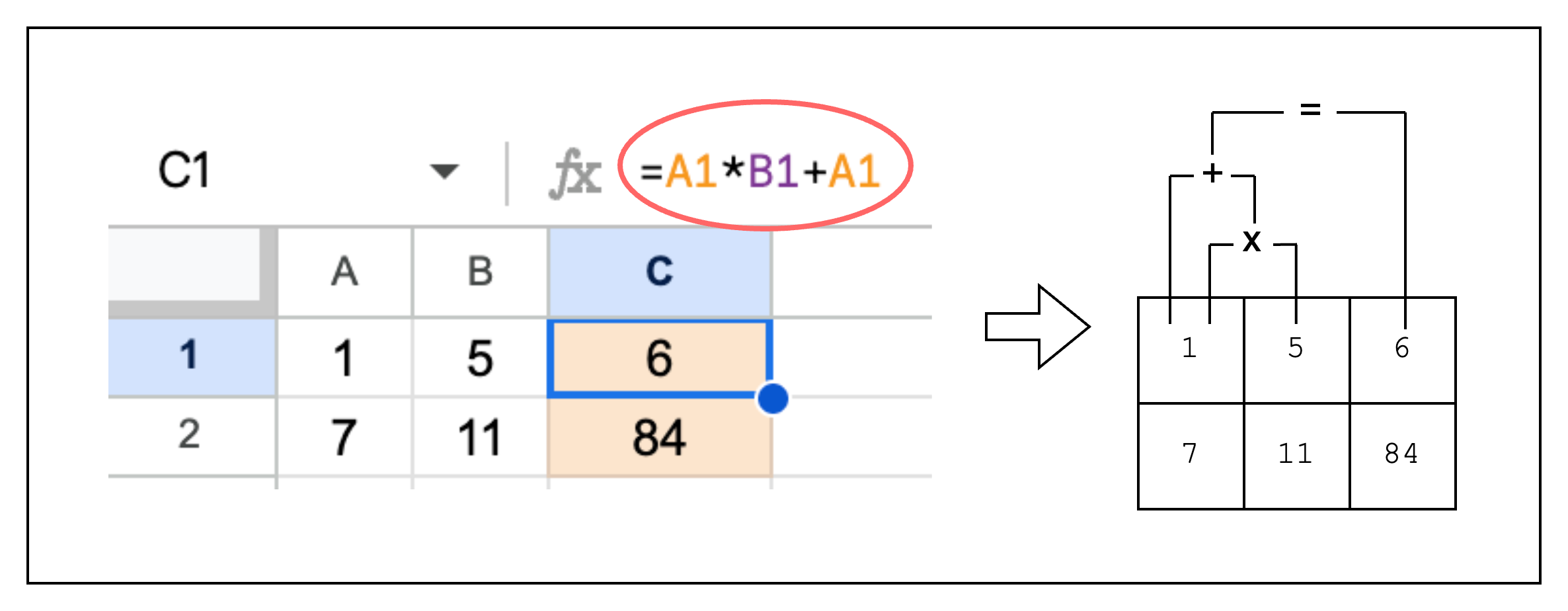

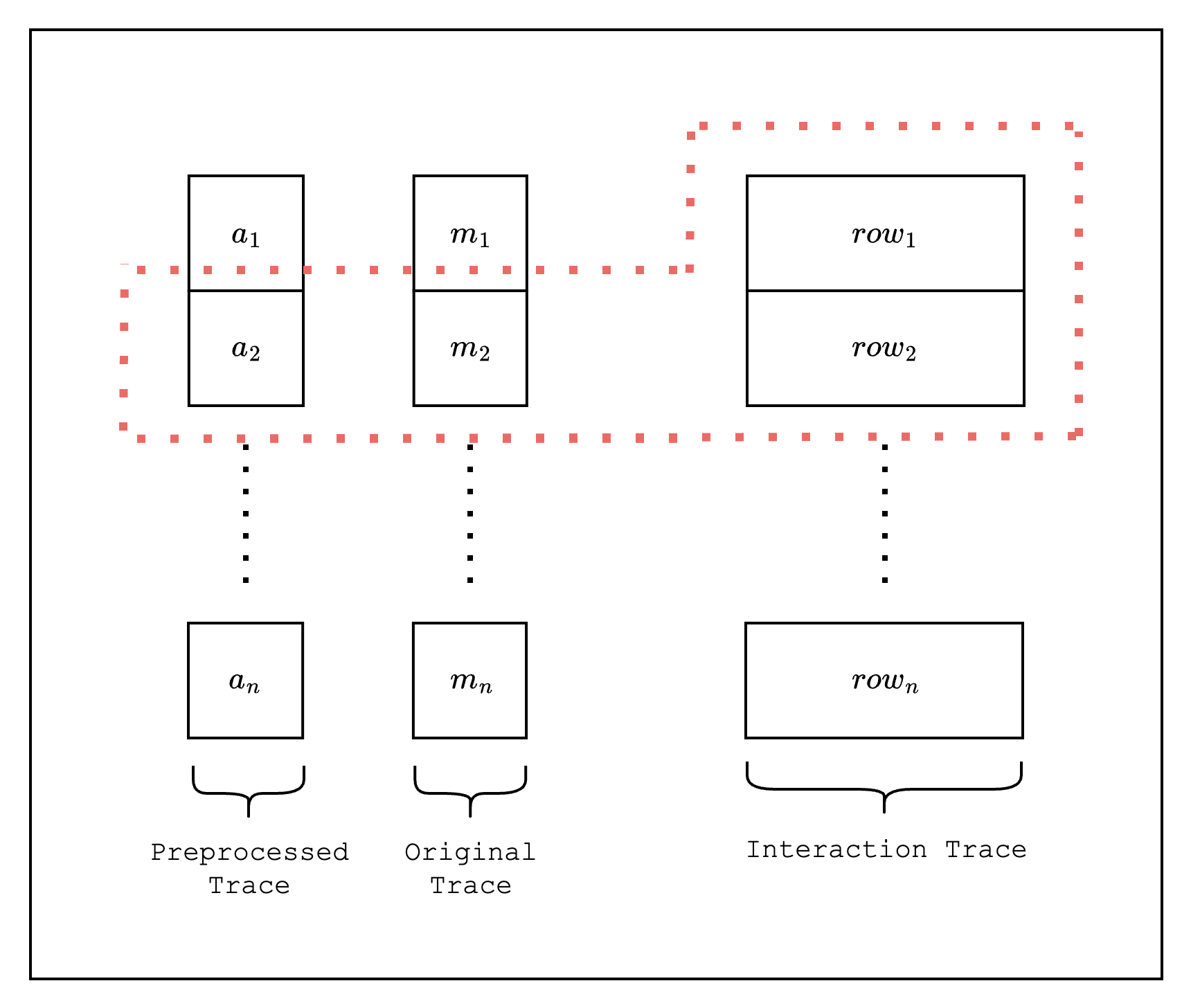

In order to write a proof, we first need to create a table of rows and columns. This is no different from writing integers to an Excel spreadsheet as we can see in Figure 2.

But there is a slight caveat to consider when creating the table. Stwo implements SIMD operations to speed up the prover in the CPU, but this requires providing the table cells in chunks of 16 rows. Simply put, this is because Stwo supports 16 lanes of 32-bit integers, which means that the same instruction can be run simultaneously for 16 different data.

Alas, for our table, we will need to create 14 dummy rows to make the total number of rows equal to 16, as shown in Figure 3. For the sake of simplicity, however, we will omit the dummy rows in the diagrams of the following sections.

Given all that, let's create this table using Stwo.

use stwo::prover::{

backend::{

simd::{column::BaseColumn, m31::N_LANES},

Column,

},

};

use stwo::core::fields::m31::M31;

fn main() {

let num_rows = N_LANES;

let mut col_1 = BaseColumn::zeros(num_rows as usize);

col_1.set(0, M31::from(1));

col_1.set(1, M31::from(7));

let mut col_2 = BaseColumn::zeros(num_rows as usize);

col_2.set(0, M31::from(5));

col_2.set(1, M31::from(11));

}As mentioned above, we instantiate the num_rows of our table as N_LANES=16 to accommodate SIMD operations. Then we create a BaseColumn of N_LANES=16 rows for each column and populate the first two rows with our values and the rest with dummy values.

Note that the values in the BaseColumn need to be of type M31, which refers to the Mersenne-31 prime field that Stwo uses. This means that the integers in the table must lie in the range .

Now that we have our table, let's move on!

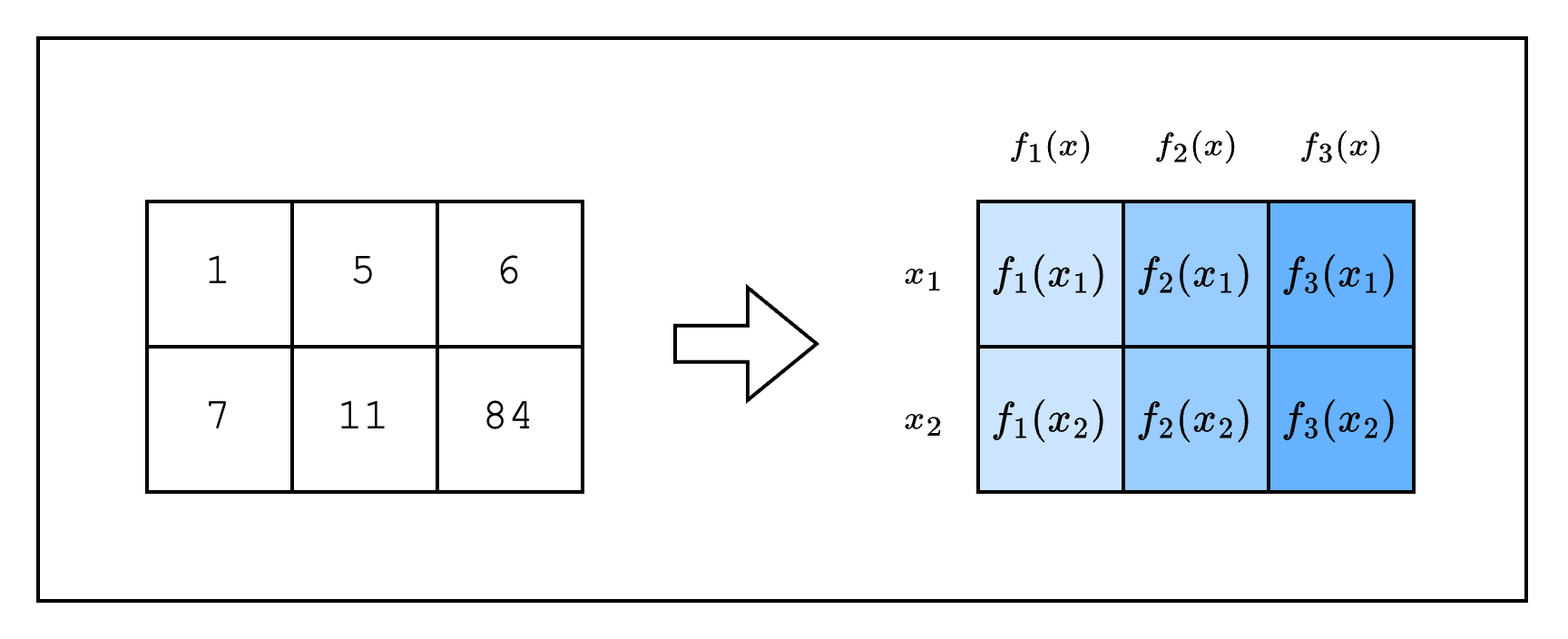

From Spreadsheet to Trace Polynomials

In the previous section, we created a table (aka spreadsheet). In this section, we will convert the table into something called trace polynomials.

In STARKs, the computation trace is represented as evaluations of a polynomial over some domain. Typically this domain is a coset of a multiplicative subgroup. But since the multiplicative subgroup of M31 is not smooth, Stwo works over the circle group which is the subgroup of degree-2 extension of M31 (as explained in the Mersenne Primes and Circle Group sections). Thus the domain in Stwo is formed of points on the circle curve. Note that when we interpolate a polynomial over the points on the circle curve, we get a bivariate trace polynomial .

We will explain why using a polynomial representation is useful in the next section, but for now, let's see how we can create trace polynomials for our code. Note that we are building upon the code from the previous section, so there's not much new code here.

fn main() {

// --snip--

// Convert table to trace polynomials

let domain = CanonicCoset::new(log_num_rows).circle_domain();

let _trace: ColumnVec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>> =

vec![col_1, col_2]

.into_iter()

.map(|col| CircleEvaluation::new(domain, col))

.collect();

}Here, domain refers to the values used to interpolate the trace polynomials. For example, in Figure 2 are the domain values for our example. Note that when creating the domain, we set the log_num_rows to the log of the actual number of rows that are used in the table. In our example, we set it to 4 since Stwo requires that we use at least 16 rows. For a background on what CanonicCoset and .circle_domain() mean, you can refer to the Circle Group section.

Now that we have created 2 trace polynomials for our 2 columns, let's move on to the next section where we commit to those polynomials!

Committing to the Trace Polynomials

Now that we have created the trace polynomials, we need to commit to them.

As we can see in Figure 1, Stwo commits to the trace polynomials by first expanding the trace polynomials (i.e. adding more evaluations) and then committing to the expanded evaluations using a Merkle tree. The rate of expansion (commonly referred to as the blowup factor) is a parameter of the FRI protocol and for the purposes of this tutorial, we will use the default value.

const LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR: u32 = 1;

fn main() {

// --snip--

// Config for FRI and PoW

let config = PcsConfig::default();

// Precompute twiddles for evaluating and interpolating the trace

let twiddles = SimdBackend::precompute_twiddles(

CanonicCoset::new(

log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR + config.fri_config.log_blowup_factor,

)

.circle_domain()

.half_coset,

);

// Create the channel and commitment scheme

let channel = &mut Blake2sChannel::default();

let mut commitment_scheme =

CommitmentSchemeProver::<SimdBackend, Blake2sMerkleChannel>::new(config, &twiddles);

// Commit to the preprocessed trace

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals(vec![]);

tree_builder.commit(channel);

// Commit to the size of the trace

channel.mix_u64(log_num_rows as u64);

// Commit to the original trace

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals(trace);

tree_builder.commit(channel);

}We begin with some setup. First, we create a default PcsConfig instance, which sets the values for the FRI and PoW operations. Setting non-default values is related to the security of the proof, which is outside the scope of this tutorial.

Next, we precompute twiddles, which are factors multiplied during FFT for a particular domain. Notice that the log size of the domain is set to log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR + config.fri_config.log_blowup_factor, which is the max log size of the domain that is needed throughout the proving process. For committing to the trace polynomial, we only need to add config.fri_config.log_blowup_factor but as we will see in the next section, we also need to commit to a polynomial of a higher degree, which is the reason we also add LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR.

The final setup is creating a commitment scheme and a channel. The commitment scheme will be used to commit to the trace polynomials as Merkle trees, while the channel will be used to keep a running hash of all data in the proving process (i.e. transcript of the proof). This is part of the Fiat-Shamir transformation, which derives randomness safely in a non-interactive setting. Here, we use the Blake2sChannel and Blake2sMerkleChannel for the channel and commitment scheme, respectively, but we can also use the Poseidon252Channel and Poseidon252MerkleChannel pair.

Now that we have our setup, we can commit to the trace polynomials. But before we do so, we need to first commit to an empty vector called a preprocessed trace, which doesn't do anything but is required by Stwo. Then, we need to commit to the size of the trace, which is another vital part that the prover should not be able to cheat on. After doing so, we can finally commit to the original trace polynomials.

Now that we have committed to the trace polynomials, we can move on to how we can create constraints over the trace polynomials!

Evaluating Constraints Over Trace Polynomials

Proving Spreadsheet Functions

When we want to perform computations over the cells in a spreadsheet, we don't want to manually fill in the computed values. Instead, we leverage spreadsheet functions to autofill cells based on a given computation.

We can do the same thing with our table, except in addition to autofilling cells, we can also create a constraint that the result was computed correctly. Remember that the purpose of using a proof system is that the verifier can verify a computation was executed correctly without having to execute it themselves? Well, that's exactly why we need to create a constraint.

Now let's say we want to add a new column C to our spreadsheet that computes the product of the previous columns plus the first column. We can set C1 as A1 * B1 + A1 as in Figure 2. The corresponding constraint is expressed as C1 = A1 * B1 + A1. However, we use an alternate representation A1 * B1 + A1 - C1 = 0 because we can only enforce constraints stating that an expression should equal zero. Generalizing this constraint to the whole column, we get col1_row1 * col2_row1 + col1_row1 - col3_row1 = 0.

Identical Constraints Every Row

Obviously, as can be seen in Figure 2, our new constraint is satisfied for every row in the table. This means that we can substitute creating a constraint for each row with a single constraint over the columns, i.e. the trace polynomials.

Thus, col1_row1 * col2_row1 + col1_row1 - col3_row1 = 0 becomes:

The idea that all rows must have the same constraint may seem restrictive, compared to say a spreadsheet where we can define different functions for different rows. However, we will show in later sections how to handle such use-cases.

(Spoiler alert: it involves selectors and components)

Composition Polynomial

We will now give a name to the polynomial that expresses the constraint: a composition polynomial.

Basically, in order to prove that the constraints are satisfied, we need to show that the composition polynomial evaluates to 0 over the original domain (i.e. the domain of size the number of rows in the table).

But first, as can be seen in the upper part of Figure 1, we need to expand the evaluations of the trace polynomials by a factor of 2. This is because when you multiply two trace polynomials of degree n-1 (where n is the number of rows) to compute the constraint polynomial, the degree of the constraint polynomial will be the sum of the degrees of the trace polynomials, which is 2n-2. To adjust for this increase in degree, we double the number of evaluations.

Once we have the expanded evaluations, we can evaluate the composition polynomial . Since we need to do a FRI operation on the composition polynomial as well, we expand the evaluations again by a factor of 2 and commit to them as a merkle tree. This part corresponds to the bottom part of Figure 1.

Implementation

Let's see how this is implemented in the code.

struct TestEval {

log_size: u32,

}

impl FrameworkEval for TestEval {

fn log_size(&self) -> u32 {

self.log_size

}

fn max_constraint_log_degree_bound(&self) -> u32 {

self.log_size + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR

}

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let col_1 = eval.next_trace_mask();

let col_2 = eval.next_trace_mask();

let col_3 = eval.next_trace_mask();

eval.add_constraint(col_1.clone() * col_2.clone() + col_1.clone() - col_3.clone());

eval

}

}

fn main() {

// --snip--

let mut col_3 = BaseColumn::zeros(num_rows);

col_3.set(0, col_1.at(0) * col_2.at(0) + col_1.at(0));

col_3.set(1, col_1.at(1) * col_2.at(1) + col_1.at(1));

// Convert table to trace polynomials

let domain = CanonicCoset::new(log_num_rows).circle_domain();

let trace: ColumnVec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>> =

vec![col_1, col_2, col_3]

.into_iter()

.map(|col| CircleEvaluation::new(domain, col))

.collect();

// --snip--

// Create a component

let _component = FrameworkComponent::<TestEval>::new(

&mut TraceLocationAllocator::default(),

TestEval {

log_size: log_num_rows,

},

QM31::zero(),

);

}First, we add a new column col_3 that contains the result of the computation: col_1 * col_2 + col_1. Note that all the columns are padded with 0 to a length of 16 via BaseColumn::zeros(num_rows) and we got lucky because this satisfies our constraint (i.e. 0 * 0 + 0 - 0 = 0), so we don't need to modify the constraint.

Then, to create a constraint over the trace polynomials, we first create a TestEval struct that implements the FrameworkEval trait. Then, we add our constraint logic in the FrameworkEval::evaluate function. Note that this function is called for every row in the table, so we only need to define the constraint once.

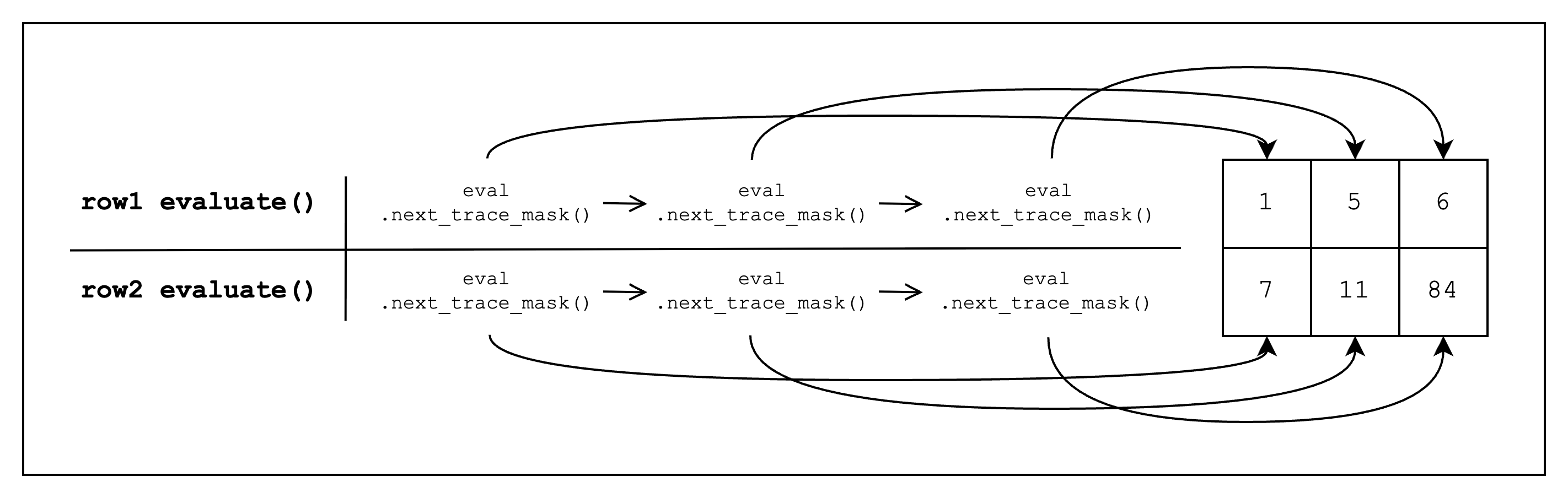

Inside FrameworkEval::evaluate, we call eval.next_trace_mask() consecutively three times, retrieving the cell values of all three columns (see Figure 3 below for a visual representation). Once we retrieve all three column values, we add a constraint of the form col_1 * col_2 + col_1 - col_3, which should equal 0. Note that FrameworkEval::evaluate will be called for every row in the table.

We also need to implement FrameworkEval::max_constraint_log_degree_bound(&self) for FrameworkEval. As mentioned in the Composition Polynomial section, we need to expand the trace polynomial evaluations because the degree of our composition polynomial is higher than that of the trace polynomial. Expanding it by LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR=1 is sufficient for our example as the total degree of the highest degree term is 2, so we return self.log_size + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR. For those who are interested in how to set this value in general, we leave a detailed note below.

What value to set for max_constraint_log_degree_bound(&self)?

self.log_size + max(1, ceil(log2(max_degree - 1))), where max_degree is the maximum total degree of all defined constraint polynomials. For example, the max_degree of constraint is 2, while that of is 4.

e.g.

- degree 1 - 3:

self.log_size + 1 - degree 4 - 5:

self.log_size + 2 - degree 6 - 9:

self.log_size + 3 - degree 10 - 17:

self.log_size + 4 - ...

Now that we know the degree of the composition polynomial, we can see why we need to set the log_size of the domain to log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR + config.fri_config.log_blowup_factor when precomputing twiddles in the following code:

// Precompute twiddles for evaluating and interpolating the trace

let twiddles = SimdBackend::precompute_twiddles(

CanonicCoset::new(

log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR + config.fri_config.log_blowup_factor,

)

.circle_domain()

.half_coset,

);To prove that the composition polynomial evaluates to 0 over the trace domain (since the composition polynomial is composed of constraints that evaluates to 0 over the trace domain), we first divide the composition polynomial by the vanishing polynomial, which is a polynomial that evaluates to 0 over the trace domain. If the composition polynomial is created correctly, this will result in a polynomial instead of a rational function, and we can perform FRI over this polynomial to prove this.

Thus, we need to commit to this new polynomial, which is called the quotient polynomial. We can calculate its degree by subtracting the degree of the vanishing polynomial from the degree of the composition polynomial. Since the trace is of size 1 << log_num_rows, the degree of the vanishing polynomial will be 1 << log_num_rows - 1, so the resulting degree will be 1 << (log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR) - (1 << log_num_rows - 1). However, since we can only commit to a power of two degree, we can just use the 1 << (log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR) value.

If we apply the FRI blowup as well, we finally end up with the following log domain size: log_num_rows + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR + config.fri_config.log_blowup_factor.

Using the new TestEval struct, we can create a new FrameworkComponent::<TestEval> component, which the prover will use to evaluate the constraint. For now, we can ignore the other parameters of the FrameworkComponent::<TestEval> constructor.

We now move on to the final section where we finally create and verify a proof.

Finally, we can break down what an Algebraic Intermediate Representation (AIR) means.

Algebraic means that we are using polynomials to represent the constraints.

Intermediate Representation means that this is a modified representation of our statement so that it can be proven.

So AIR is just another way of saying that we are representing statements to be proven as constraints over polynomials.

Proving and Verifying an AIR

We're finally ready for the last step: prove and verify an AIR!

Since the code is relatively short, let us present it first and then go over the details.

fn main() {

// --snip--

// Prove

let proof = prove(&[&component], channel, commitment_scheme).unwrap();

// Verify

let channel = &mut Blake2sChannel::default();

let commitment_scheme = &mut CommitmentSchemeVerifier::<Blake2sMerkleChannel>::new(config);

let sizes = component.trace_log_degree_bounds();

commitment_scheme.commit(proof.commitments[0], &sizes[0], channel);

channel.mix_u64(log_num_rows as u64);

commitment_scheme.commit(proof.commitments[1], &sizes[1], channel);

verify(&[&component], channel, commitment_scheme, proof).unwrap();

}Prove

As you can see, there is only a single line of code added to create the proof. The prove function performs the FRI and PoW operations under the hood, although, technically, the constraint-related steps in Figure 1 were not performed in the previous section and are only performed once prove is called.

Verify

In order to verify our proof, we need to check that the constraints are satisfied using the commitments from the proof. In order to do that, we need to set up a Blake2sChannel and CommitmentSchemeVerifier<Blake2sMerkleChannel>, along with the same PcsConfig that we used when creating the proof. Then, we need to recreate the Fiat-Shamir channel by passing the Merkle tree commitments and the log_num_rows to the CommitmentSchemeVerifier instance by calling commit (remember: the order is important!). Then, we can verify the proof using the verify function.

Try setting the dummy values in the table to 1 instead of 0. Does it fail? If so, can you see why?

Congratulations! We have come full circle. We now know how to create a table, convert it to trace polynomials, commit to them, create constraints over the trace polynomials, and prove and verify the constraints (i.e. an AIR). In the following sections, we will go over some more complicated AIRs to explain Stwo's other features.

Preprocessed Trace

This section and the following sections are intended for developers who have completed the First Breath of AIR section or are already familiar with the workflow of creating an AIR. If you have not gone through the previous section, we recommend doing so first as the following sections gloss over a lot of boilerplate code.

For those of you who have completed the First Breath of AIR tutorial, you should now be familiar with the concept of a trace as a table of integers that are filled in by the prover (we will now refer to this as the original trace).

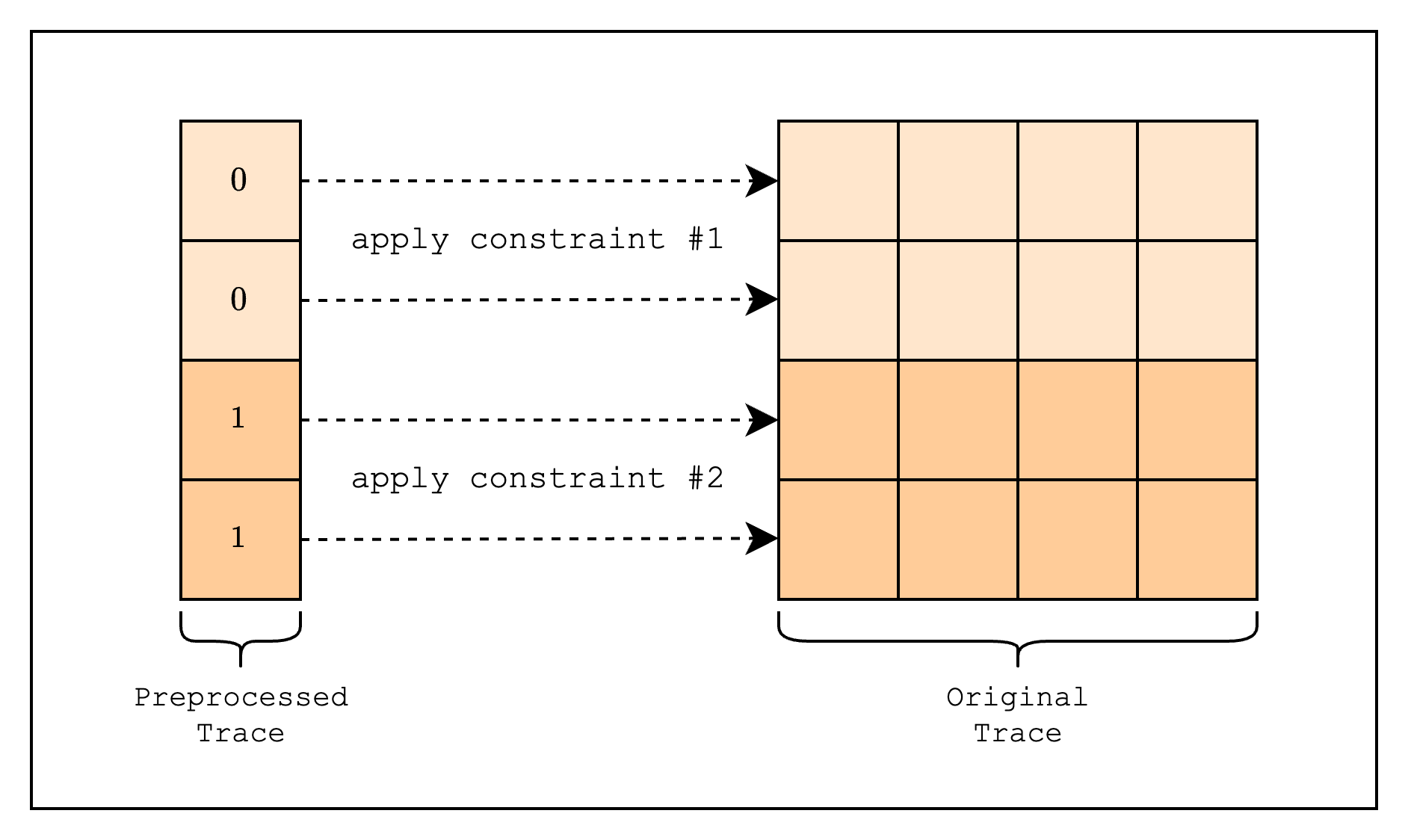

In addition to the original trace, Stwo also has a concept of a preprocessed trace, which is a table whose values are fixed and therefore cannot be arbitrarily chosen by the prover. In other words, these are columns whose values are known in advance of creating a proof and essentially agreed upon by both the prover and the verifier.

One of the use cases of the preprocessed trace is as a selector for different constraints. Remember that in an AIR, the same constraints are applied to every row of the trace? If we go back to the spreadsheet analogy, this means that we can't create a spreadsheet that runs different computations for different rows. To get around this, note that if we multiply a constraint with a "selector" that is zero, the constraint will be trivially satisfied. Building on this, we can create a selector column of 0s and 1s, and multiply the constraint with the selector column. For example, let's say we want to create a constraint that runs different computations for the first 2 rows and the next 2 rows. We can do this by creating a selector column that has value 0 for the first 2 rows and 1 for the next 2 rows and combining it with the constraints as follows:

Another use case is to use the preprocessed trace to express constant values used in constraints. For example, when creating a hash function in an AIR, we often need to use round constants, which the verifier needs to be able to verify or the resulting hash may be invalid. We can also "look up" the constant values as an optimization technique, which we will discuss in more detail in the next section.

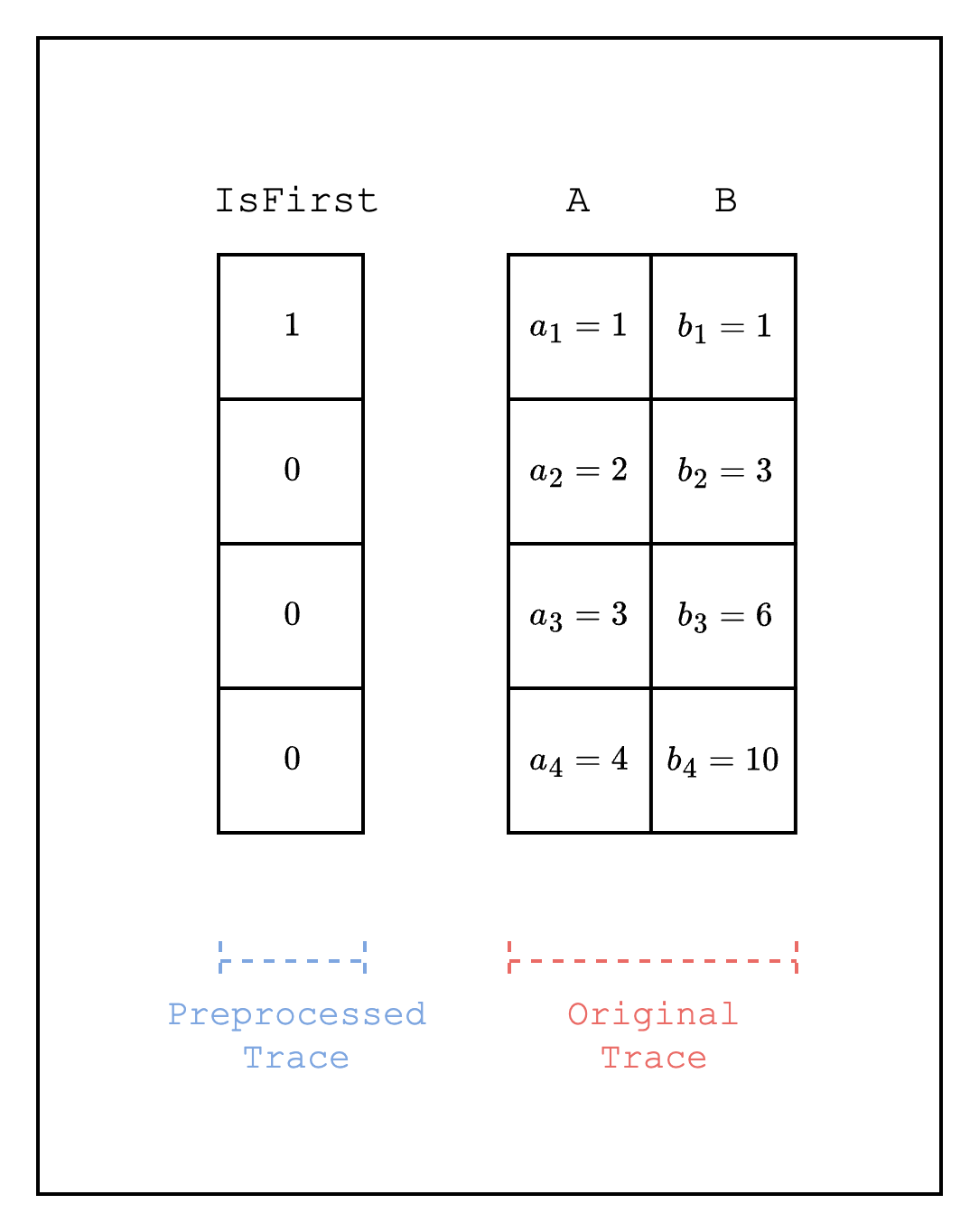

In this section, we will explore how to implement a preprocessed trace as a selector, and we will implement the simplest form: a single IsFirst column, where the value is 1 for the first row and 0 for all other rows.

Boilerplate code is omitted for brevity. Please refer to the full example code for the full implementation.

struct IsFirstColumn {

pub log_size: u32,

}

#[allow(dead_code)]

impl IsFirstColumn {

pub fn new(log_size: u32) -> Self {

Self { log_size }

}

pub fn gen_column(&self) -> CircleEvaluation<SimdBackend, M31, BitReversedOrder> {

let mut col = BaseColumn::zeros(1 << self.log_size);

col.set(0, M31::from(1));

CircleEvaluation::new(CanonicCoset::new(self.log_size).circle_domain(), col)

}

pub fn id(&self) -> PreProcessedColumnId {

PreProcessedColumnId {

id: format!("is_first_{}", self.log_size),

}

}

}First, we need to define an IsFirstColumn struct that will be used as a preprocessed trace. We will use the gen_column() function to generate a CircleEvaluation struct that is 1 for the first row and 0 for all other rows. The id() function is needed to identify this column when evaluating the constraints.

fn main() {

...

// Create and commit to the preprocessed trace

let is_first_column = IsFirstColumn::new(log_size);

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals(vec![is_first_column.gen_column()]);

tree_builder.commit(channel);

// Commit to the size of the trace

channel.mix_u64(log_size as u64);

// Create and commit to the original trace

let trace = gen_trace(log_size);

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals(trace);

tree_builder.commit(channel);

...

}Then, in our main function, we will create and commit to the preprocessed and original traces. For those of you who are curious about why we need to commit to the trace, please refer to the Committing to the Trace Polynomials section.

struct TestEval {

is_first_id: PreProcessedColumnId,

log_size: u32,

}

impl FrameworkEval for TestEval {

fn log_size(&self) -> u32 {

self.log_size

}

fn max_constraint_log_degree_bound(&self) -> u32 {

self.log_size + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR

}

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let is_first = eval.get_preprocessed_column(self.is_first_id.clone());

let col_1 = eval.next_trace_mask();

let col_2 = eval.next_trace_mask();

let col_3 = eval.next_trace_mask();

// If is_first is 1, then the constraint is col_1 * col_2 - col_3 = 0

// If is_first is 0, then the constraint is col_1 * col_2 + col_1 - col_3 = 0

eval.add_constraint(

is_first.clone() * (col_1.clone() * col_2.clone() - col_3.clone())

+ (E::F::from(M31::from(1)) - is_first.clone())

* (col_1.clone() * col_2.clone() + col_1.clone() - col_3.clone()),

);

eval

}

}Now that we have the traces, we need to create a struct that contains the logic for evaluating the constraints. As mentioned before, we need to use the is_first_id field to retrieve the row value of the IsFirstColumn struct. Then, we compose two constraints using the IsFirstColumn row value as a selector and adding them together.

If you're unfamiliar with how max_constraint_log_degree_bound(&self) should be implemented, please refer to this note.

fn main() {

...

// Create a component

let component = FrameworkComponent::<TestEval>::new(

&mut TraceLocationAllocator::default(),

TestEval {

is_first_id: is_first_column.id(),

log_size,

},

QM31::zero(),

);

// Prove

let proof = prove(&[&component], channel, commitment_scheme).unwrap();

// Verify

let channel = &mut Blake2sChannel::default();

let commitment_scheme = &mut CommitmentSchemeVerifier::<Blake2sMerkleChannel>::new(config);

let sizes = component.trace_log_degree_bounds();

commitment_scheme.commit(proof.commitments[0], &sizes[0], channel);

channel.mix_u64(log_size as u64);

commitment_scheme.commit(proof.commitments[1], &sizes[1], channel);

verify(&[&component], channel, commitment_scheme, proof).unwrap();

}Finally, we can create a FrameworkComponent using the TestEval struct and then prove and verify the component.

Static Lookups

In the previous section, we showed how to create a preprocessed trace. In this section, we will introduce the concept of an interaction trace, and use it with the preprocessed trace to implement static lookups.

Let's start with a brief introduction to lookups. A lookup is a way to connect values from one part of the table to another part of the table. A simple example is when we want to copy values across parts of the table. At first glance, this seems feasible using a constraint. For example, we can copy values to by creating a constraint that is equal to . The limitation with this approach, however, is that the same constraint needs to be satisfied over every row in the columns. In other words, we can only check that is an exact copy of :

But what if we want to check that is a copy of regardless of the order of the values? This can be done by comparing that the grand product of the random linear combinations of all values in is equal to the grand product of the random linear combinations of all values in :

where is a random value from the verifier.

By taking the logarithmic derivative of each side of the equation, we can rewrite it:

We can go further and allow each of the original values to be copied a different number of times. This is supported by modifying the check to the following:

Where represents the multiplicity, or the number of times appears in .

In Stwo, these fractions (which we will hereafter refer to as LogUp fractions) are stored in a special type of trace called an interaction trace. An interaction trace is used to contain values that involve interaction between the prover and the verifier. As mentioned above, a LogUp fraction requires a random value from the verifier, which is why it is stored in an interaction trace.

Range-check AIR

We will now walk through the implementation of a static lookup, which is a lookup where the values that are being looked up are static, i.e. part of the preprocessed trace. Specifically, we will implement a range-check AIR, which checks that a certain value is within a given range. This is especially useful for frameworks like Stwo that use finite fields because it allows checking for underflow and overflow.

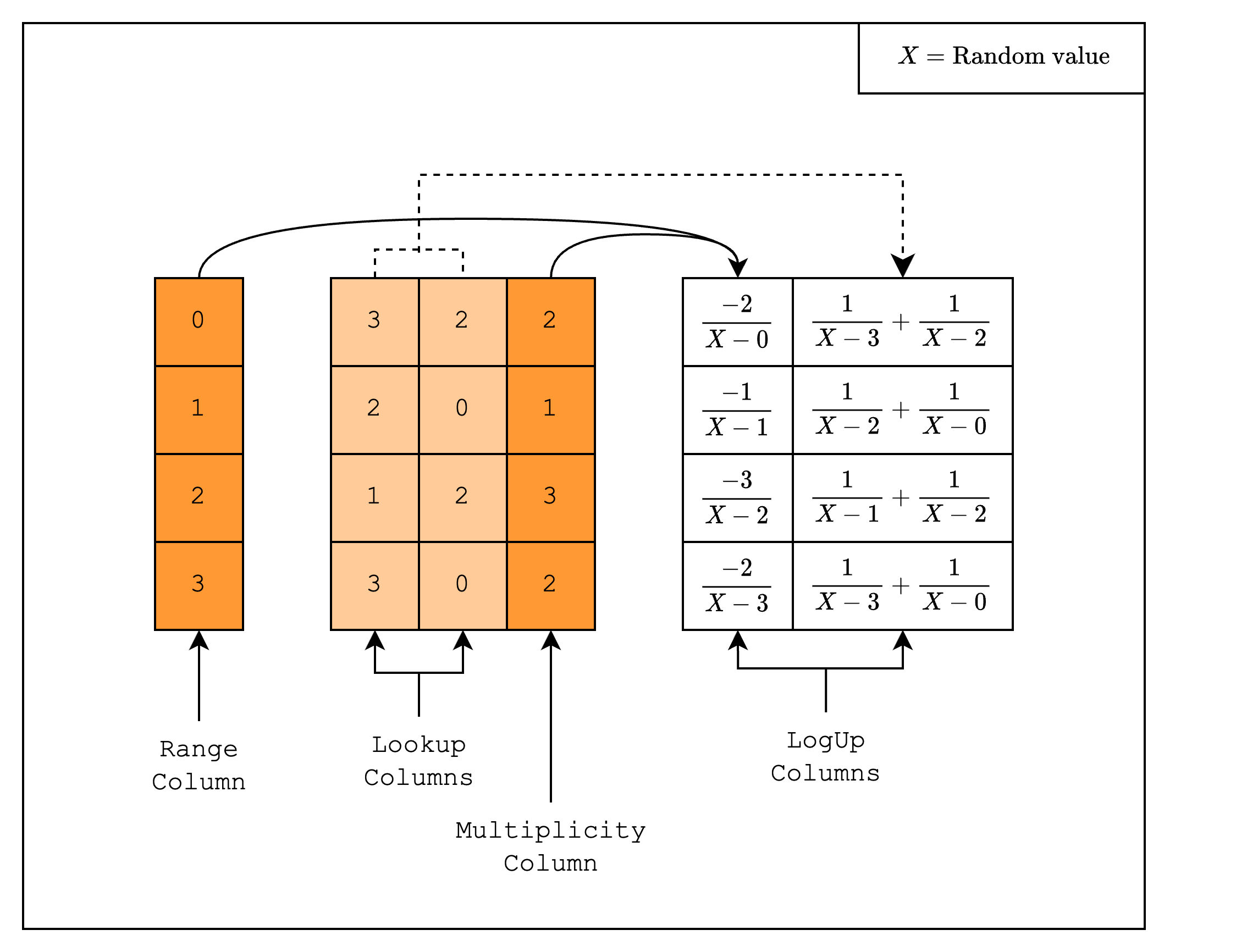

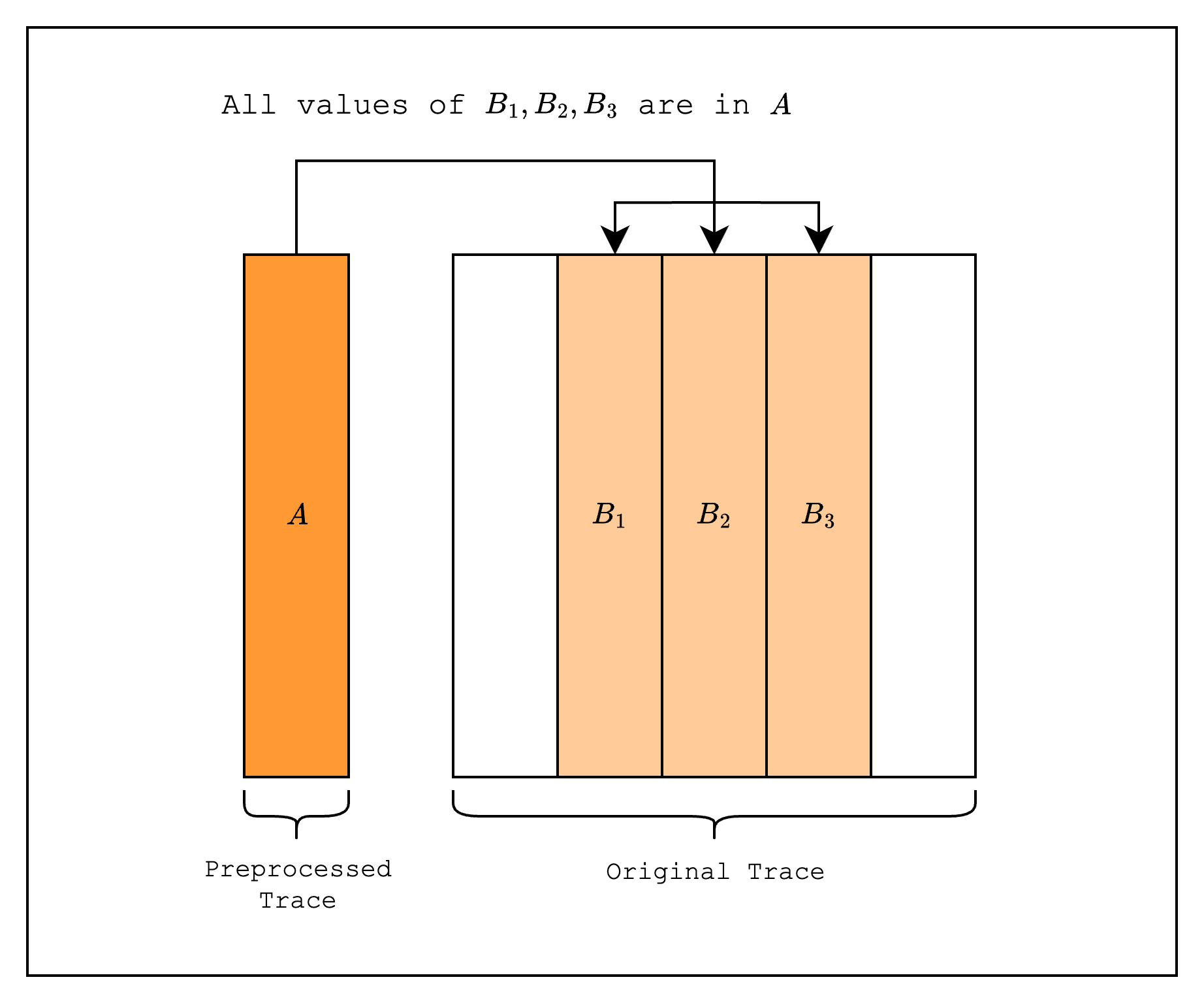

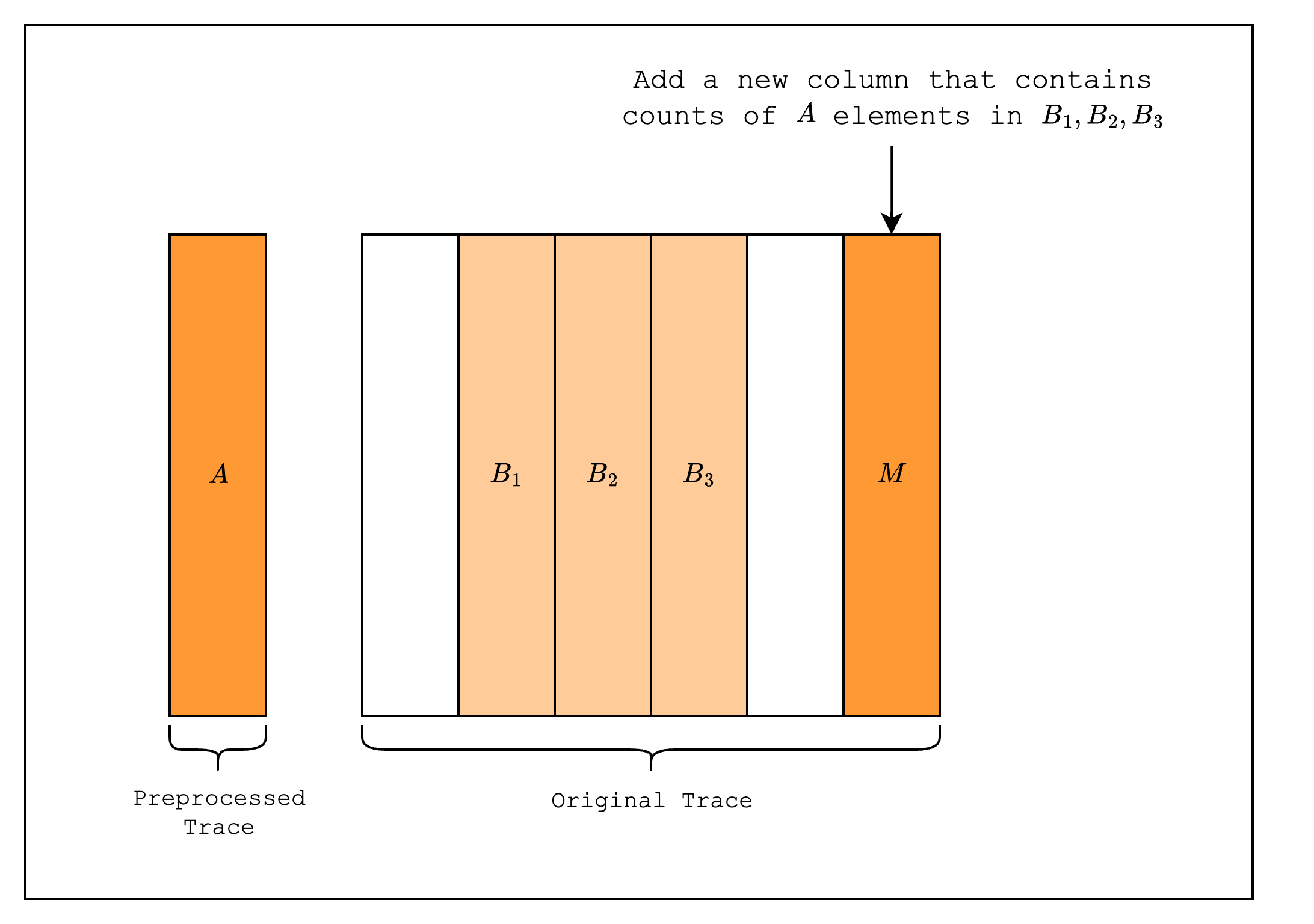

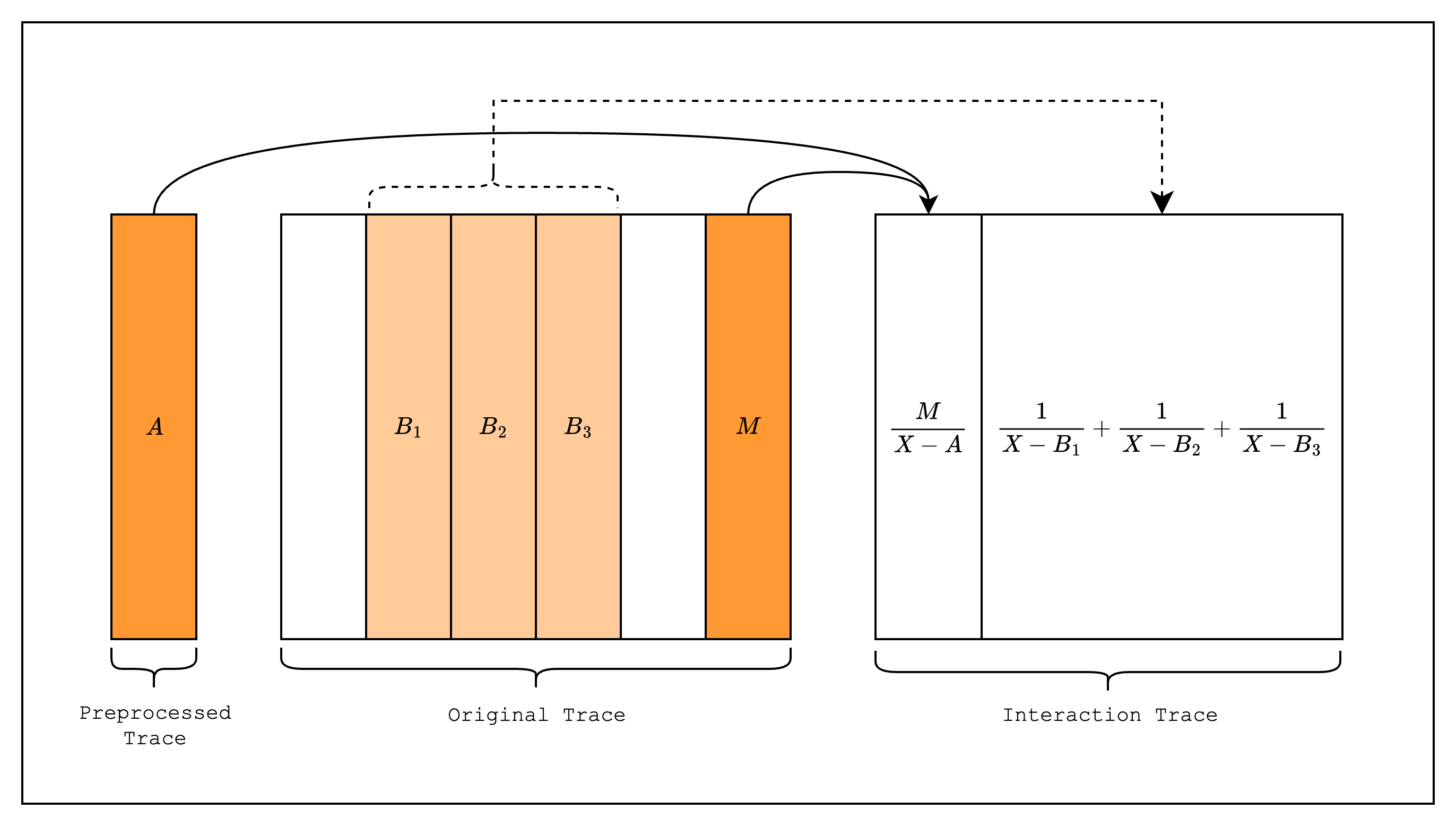

A range-check checks that all values in a column are within a certain range. For example, as in Figure 1, we can check that all values in the range-checked columns are between 0 and 3. We do this by first creating a multiplicity column that counts the number of times each value in the preprocessed trace appears in the range-checked columns.

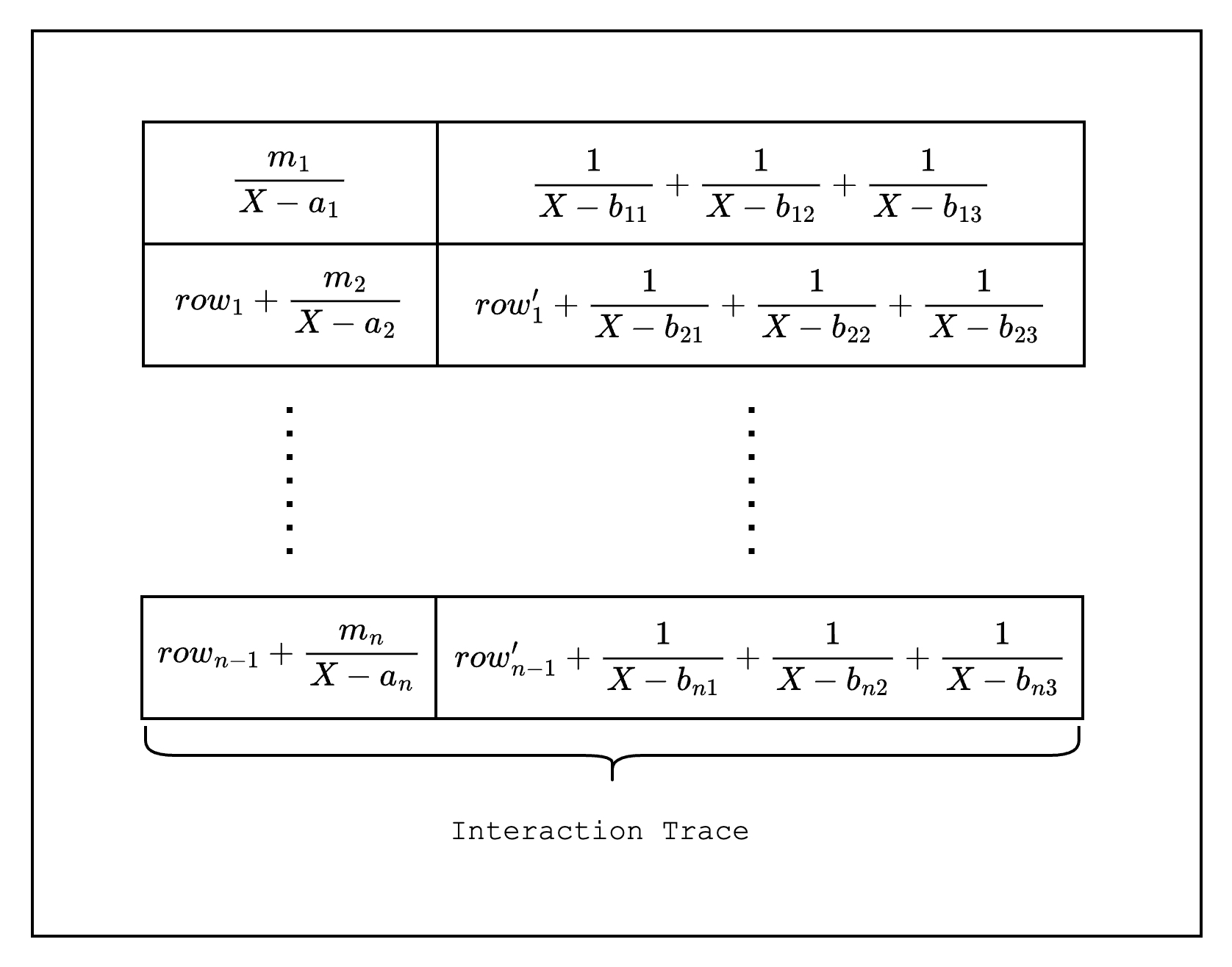

Then, we create two LogUp columns as part of the interaction trace. The first column contains in each row a fraction with numerator equal to the multiplicity and denominator equal to the random linear combination of the value in the range column. For example, for row 1, the fraction should be , where is a random value. The second column contains batches of fractions where the denominator of each fraction is the random linear combination of the value in the range-checked column. Note that the numerator of each fraction is always -1, i.e. we apply a negation, because we want the sum of the first column to be equal to the sum of the second column.

If we add all the fractions in the two columns together, we get 0. This means that the verifier will be convinced with high probability that the values in the range-checked columns are a subset of the values in the range column.

Implementation

Now let's move on to the implementation where we create a 4-bit range-check AIR. We do this by creating a preprocessed trace column with the integers , then using a lookup to force the values in the original trace columns to lie in the values of the preprocessed column.

struct RangeCheckColumn {

pub log_size: u32,

}

#[allow(dead_code)]

impl RangeCheckColumn {

pub fn new(log_size: u32) -> Self {

Self { log_size }

}

pub fn gen_column(&self) -> CircleEvaluation<SimdBackend, M31, BitReversedOrder> {

let col = BaseColumn::from_iter((0..(1 << self.log_size)).map(|i| M31::from(i)));

CircleEvaluation::new(CanonicCoset::new(self.log_size).circle_domain(), col)

}

pub fn id(&self) -> PreProcessedColumnId {

PreProcessedColumnId {

id: format!("range_check_{}_bits", self.log_size),

}

}

}First, we need to create the range-check column as a preprocessed column. This should look familiar to the code from the previous section.

fn gen_trace(log_size: u32) -> Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>> {

// Create a table with random values

let mut rng = rand::thread_rng();

let lookup_col_1 =

BaseColumn::from_iter((0..(1 << log_size)).map(|_| M31::from(rng.gen_range(0..16))));

let lookup_col_2 =

BaseColumn::from_iter((0..(1 << log_size)).map(|_| M31::from(rng.gen_range(0..16))));

let mut multiplicity_col = BaseColumn::zeros(1 << log_size);

lookup_col_1

.as_slice()

.iter()

.chain(lookup_col_2.as_slice().iter())

.for_each(|value| {

let index = value.0 as usize;

multiplicity_col.set(index, multiplicity_col.at(index) + M31::from(1));

});

// Convert table to trace polynomials

let domain = CanonicCoset::new(log_size).circle_domain();

vec![

lookup_col_1.clone(),

lookup_col_2.clone(),

multiplicity_col.clone(),

]

.into_iter()

.map(|col| CircleEvaluation::new(domain, col))

.collect()

}Next, we create the original trace columns. The first two columns are random values in the range , and the third column contains the counts of the values in the range-check column.

relation!(SmallerThan16Elements, 1);

fn gen_logup_trace(

range_log_size: u32,

log_size: u32,

range_check_col: &BaseColumn,

lookup_col_1: &BaseColumn,

lookup_col_2: &BaseColumn,

multiplicity_col: &BaseColumn,

lookup_elements: &SmallerThan16Elements,

) -> (

Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>>,

SecureField,

) {

let mut logup_gen = LogupTraceGenerator::new(range_log_size);

let mut col_gen = logup_gen.new_col();

for simd_row in 0..(1 << (range_log_size - LOG_N_LANES)) {

let numerator: PackedSecureField = PackedSecureField::from(multiplicity_col.data[simd_row]);

let denom: PackedSecureField = lookup_elements.combine(&[range_check_col.data[simd_row]]);

col_gen.write_frac(simd_row, -numerator, denom);

}

col_gen.finalize_col();

let mut col_gen = logup_gen.new_col();

for simd_row in 0..(1 << (log_size - LOG_N_LANES)) {

let lookup_col_1_val: PackedSecureField =

lookup_elements.combine(&[lookup_col_1.data[simd_row]]);

let lookup_col_2_val: PackedSecureField =

lookup_elements.combine(&[lookup_col_2.data[simd_row]]);

// 1 / denom1 + 1 / denom2 = (denom1 + denom2) / (denom1 * denom2)

let numerator = lookup_col_1_val + lookup_col_2_val;

let denom = lookup_col_1_val * lookup_col_2_val;

col_gen.write_frac(simd_row, numerator, denom);

}

col_gen.finalize_col();

logup_gen.finalize_last()

}

fn main() {

...

// Draw random elements to use when creating the random linear combination of lookup values in the LogUp columns

let lookup_elements = SmallerThan16Elements::draw(channel);

// Create and commit to the LogUp columns

let (logup_cols, claimed_sum) = gen_logup_trace(

range_log_size,

log_num_rows,

&range_check_col,

&trace[0],

&trace[1],

&trace[2],

&lookup_elements,

);

...

}Now we need to create the LogUp columns.

First, note that we are creating a SmallerThan16Elements instance using the macro relation!. This macro creates an API for performing random linear combinations. Under the hood, it creates two random values that can create a random linear combination of an arbitrary number of elements. In our case, we only need to combine one value (value in ), which is why we pass in 1 to the macro.

Inside gen_logup_trace, we create a LogupTraceGenerator instance. This is a helper class that allows us to create LogUp columns. Every time we create a new column, we need to call new_col() on the LogupTraceGenerator instance.

You may notice that we are iterating over BaseColumn in chunks of 16, or 1 << LOG_N_LANES values. This is because we are using the SimdBackend, which runs 16 lanes simultaneously, so we need to preserve this structure. The Packed in PackedSecureField means that it packs 16 values into a single value.

You may also notice that we are using a SecureField instead of just the Field. This is because the random value we created by SmallerThan16Elements lies in the degree-4 extension field . This is necessary for the security of the protocol and interested readers can refer to the Mersenne Primes section for more details.

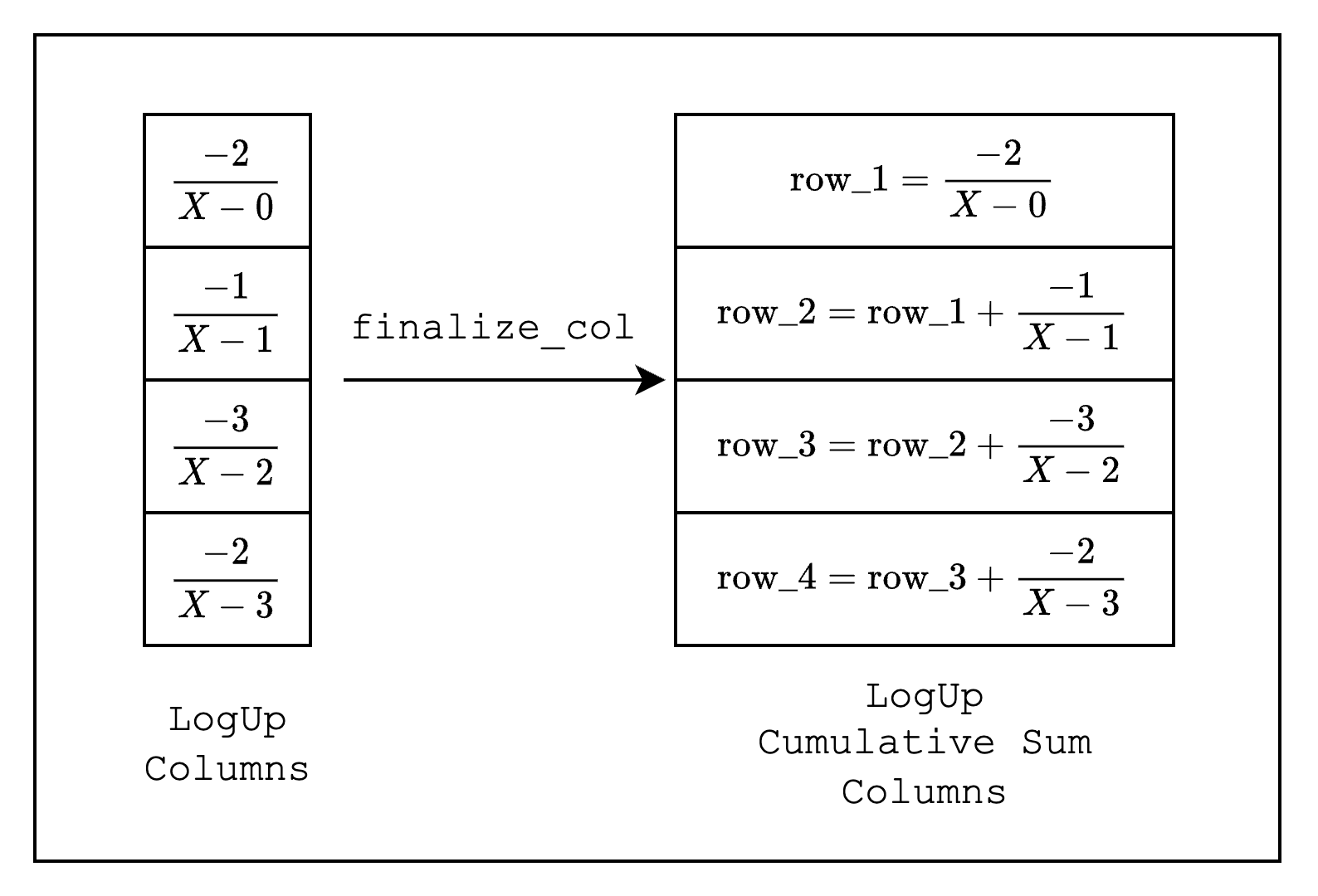

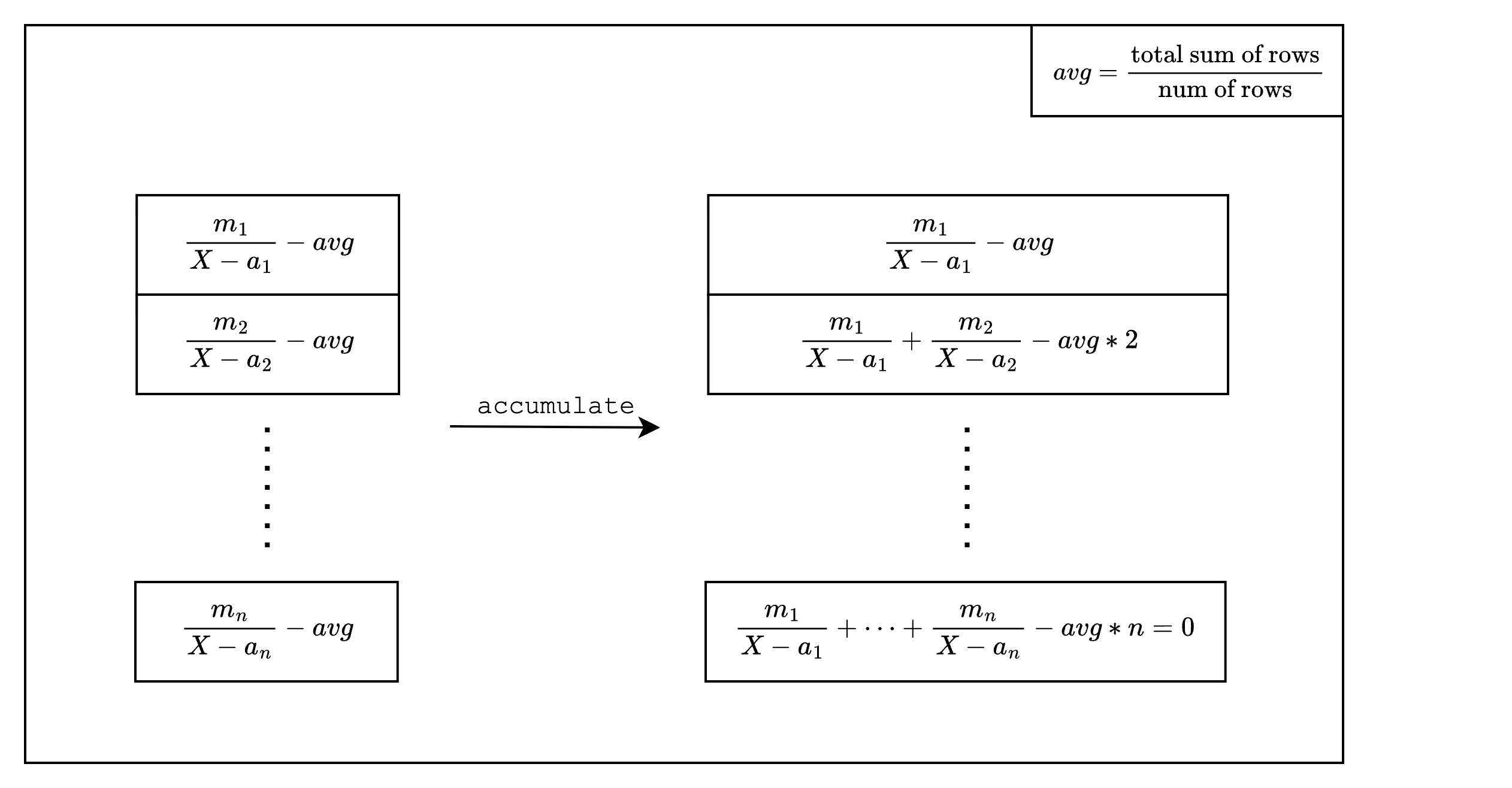

Once we set the fractions for each simd_row, we need to call finalize_col() to finalize the column. This process modifies the LogUp columns from individual fractions to cumulative sums of the fractions as shown in Figure 2.

Finally, we need to call finalize_last() on the LogupTraceGenerator instance to finalize the LogUp columns, which will return the LogUp columns as well as the sum of the fractions in the LogUp columns.

struct TestEval {

range_check_id: PreProcessedColumnId,

log_size: u32,

lookup_elements: SmallerThan16Elements,

}

impl FrameworkEval for TestEval {

fn log_size(&self) -> u32 {

self.log_size

}

fn max_constraint_log_degree_bound(&self) -> u32 {

self.log_size + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR

}

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let range_check_col = eval.get_preprocessed_column(self.range_check_id.clone());

let lookup_col_1 = eval.next_trace_mask();

let lookup_col_2 = eval.next_trace_mask();

let multiplicity_col = eval.next_trace_mask();

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

-E::EF::from(multiplicity_col),

&[range_check_col],

));

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

E::EF::one(),

&[lookup_col_1],

));

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

E::EF::one(),

&[lookup_col_2],

));

eval.finalize_logup_batched(&vec![0, 1, 1]);

eval

}

}The last piece of the puzzle is to create the constraints. We use the same TestEval struct as in the previous sections, but the evaluate function will look slightly different. Instead of calling add_constraint on the EvalAtRow instance, we will call add_to_relation, which recreates the fractions that we added in the LogUp columns using values in the range-check, lookup, and multiplicity columns.

Once we add the fractions as constraints, we call the finalize_logup_batched function, which indicates how we want to batch the fractions. In our case, we added 3 fractions but want to create batches where the last two fractions are batched together, so we pass in &vec![0, 1, 1].

// Verify

assert_eq!(claimed_sum, SecureField::zero());

let channel = &mut Blake2sChannel::default();

let commitment_scheme = &mut CommitmentSchemeVerifier::<Blake2sMerkleChannel>::new(config);

let sizes = component.trace_log_degree_bounds();

commitment_scheme.commit(proof.commitments[0], &sizes[0], channel);

channel.mix_u64((log_num_rows) as u64);

commitment_scheme.commit(proof.commitments[1], &sizes[1], channel);

commitment_scheme.commit(proof.commitments[2], &sizes[2], channel);

verify(&[&component], channel, commitment_scheme, proof).unwrap();When we verify the proof, as promised, we check that the claimed_sum, which is the sum of the fractions in the LogUp columns, is 0.

And that's it! We have successfully created a static lookup for a range-check.

How many fractions can we batch together?

This depends on how we set the max_constraint_log_degree_bound function, as discussed in this note. More specifically, we can batch up to exactly the blowup factor.

e.g.

self.log_size + 1-> 2 fractionsself.log_size + 2-> 4 fractionsself.log_size + 3-> 8 fractionsself.log_size + 4-> 16 fractions- ...

Note that unlike what Figure 1 shows, the size of the range column and the range-checked columns do not have to be the same. As we will learn in the Components section, we can create separate components for the range-check and the range-checked columns to support such cases.

Dynamic Lookups

In the last section, we implemented a static lookup. A dynamic lookup is the same as a static lookup except that the values that are being looked up are not known before the proving process (i.e. they are not preprocessed columns but trace columns).

In this section, we will implement one of the simplest dynamic lookups: a permutation check.

A permutation check simply checks that two sets of values have the same elements, but not necessarily in the same order. For example, the values and are a permutation of each other, but and are not.

If you went through the previous section, you should have a good intuition for how to implement this. First, create two original trace columns that each contain a random permutation of the same set of values. Then, create a LogUp column where the first original trace column is added as a fraction with multiplicity and the second original trace column is added as a fraction with multiplicity . Then, check that the claimed_sum, or the sum of the fractions in the two LogUp columns, is .

Let's move on to the implementation.

fn gen_trace(log_size: u32) -> Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>> {

let mut rng = rand::thread_rng();

let values = (0..(1 << log_size)).map(|i| i).collect::<Vec<_>>();

// Create a random permutation of the values

let mut random_values = values.clone();

random_values.shuffle(&mut rng);

let random_col_1 = BaseColumn::from_iter(random_values.iter().map(|v| M31::from(*v)));

// Create another random permutation of the values

let mut random_values = random_values.clone();

random_values.shuffle(&mut rng);

let random_col_2 = BaseColumn::from_iter(random_values.iter().map(|v| M31::from(*v)));

// Convert table to trace polynomials

let domain = CanonicCoset::new(log_size).circle_domain();

vec![random_col_1, random_col_2]

.into_iter()

.map(|col| CircleEvaluation::new(domain, col))

.collect()

}

fn gen_logup_trace(

log_size: u32,

random_col_1: &BaseColumn,

random_col_2: &BaseColumn,

lookup_elements: &LookupElements,

) -> (

Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>>,

SecureField,

) {

let mut logup_gen = LogupTraceGenerator::new(log_size);

let mut col_gen = logup_gen.new_col();

for row in 0..(1 << (log_size - LOG_N_LANES)) {

// 1 / random - 1 / ordered = (ordered - random) / (random * ordered)

let random_val: PackedSecureField = lookup_elements.combine(&[random_col_1.data[row]]);

let ordered_val: PackedSecureField = lookup_elements.combine(&[random_col_2.data[row]]);

col_gen.write_frac(row, ordered_val - random_val, random_val * ordered_val);

}

col_gen.finalize_col();

logup_gen.finalize_last()

}

fn main() {

...

// Create and commit to the trace columns

let trace = gen_trace(log_size);

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals(trace.clone());

tree_builder.commit(channel);

// Draw random elements to use when creating the random linear combination of lookup values in the LogUp columns

let lookup_elements = LookupElements::draw(channel);

// Create and commit to the LogUp columns

let (logup_cols, claimed_sum) =

gen_logup_trace(log_size, &trace[0], &trace[1], &lookup_elements);

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals(logup_cols);

tree_builder.commit(channel);

...

}Looking at the code above, we can see that it looks very similar to the implementation in the previous section. Instead of creating a preprocessed column, we create two columns where the first column is a random permutation of values [0, 1 << log_size) and the second column contains the values in order. Note that this is equivalent to "looking up" all values in the first trace column once. And since all the values are looked up exactly once, we do not need a separate multiplicity column.

Then, we create a LogUp column that contains the values .

struct TestEval {

log_size: u32,

lookup_elements: LookupElements,

}

impl FrameworkEval for TestEval {

fn log_size(&self) -> u32 {

self.log_size

}

fn max_constraint_log_degree_bound(&self) -> u32 {

self.log_size + LOG_CONSTRAINT_EVAL_BLOWUP_FACTOR

}

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let random_col = eval.next_trace_mask();

let ordered_col = eval.next_trace_mask();

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

E::EF::one(),

&[random_col],

));

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

-E::EF::one(),

&[ordered_col],

));

eval.finalize_logup_in_pairs();

eval

}

}The TestEval struct is also very similar to the one in the previous section. The only difference is that we call add_to_relation twice and add them together by calling finalize_logup_in_pairs() on the TestEval instance. This is equivalent to calling the finalize_logup_batched function with &vec![0, 0].

Local Row Constraints

Until now, we have only considered constraints that apply over values in a single row. But what if we want to express constraints over multiple adjacent rows? For example, we may want to ensure that the difference between the values in two adjacent rows is always the same.

Turns out we can implement this as an AIR constraint, as long as the same constraints are applied to all rows. We will build upon the example in the previous section, where we created two columns and proved that they are permutations of each other by asserting that the second column looks up all values in the first column exactly once.

Here, we will create two columns and prove that not only are they permutations of each other, but also that the second column is a sorted version of the first column. Since the sorted column will contain in order the values , this is equivalent to asserting that the difference between every current row and the previous row is .

We will implement this in three iterations, fixing a different issue in each iteration.

First Try

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let unsorted_col = eval.next_trace_mask();

let [sorted_col_prev_row, sorted_col_curr_row] =

eval.next_interaction_mask(ORIGINAL_TRACE_IDX, [-1, 0]);

// New constraint

eval.add_constraint(

E::F::one() - (sorted_col_curr_row.clone() - sorted_col_prev_row.clone()),

);

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

E::EF::one(),

&[unsorted_col],

));

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

-E::EF::one(),

&[sorted_col_curr_row],

));

eval.finalize_logup_in_pairs();

eval

}The logic for creating the trace and LogUp columns is basically the same as in the previous section (except that one of the columns is now sorted), so we omit them for brevity.

Another change is in the evaluate function, where we call eval.next_interaction_mask(ORIGINAL_TRACE_IDX, [-1, 0]) instead of eval.next_trace_mask(). The function next_trace_mask() is a wrapper for next_interaction_mask(ORIGINAL_TRACE_IDX, [0]), where the first parameter specifies which part of the trace to retrieve values from (see this figure for an example of the different parts of a trace). Since we want to retrieve values from the original trace, we set the value of the first parameter to ORIGINAL_TRACE_IDX. Next, the second parameter indicates the row offset of the value we want to retrieve. Since we want to retrieve both the previous and current row values for the sorted column, we set the value of the second parameter to [-1, 0].

Once we have these values, we can now assert that the difference between the current and previous row is always one with the constraint: E::F::one() - (sorted_col_curr_row.clone() - sorted_col_prev_row.clone()).

But this will fail with a ConstraintsNotSatisfied error, can you see why? (You can try running it yourself here)

Second Try

The issue was that when calling evaluate on the first row of our trace, the previous row value wraps around to the last row because there are no negative indices.

This means that in our example, we are expecting the 0 - 15 = 1 constraint to hold, which is clearly not true.

To fix this, we can use the IsFirstColumn preprocessed column that we created in the Preprocessed Trace section. So we will copy over the same code for creating the preprocessed column and modify our new constraint as follows:

let is_first_col = eval.get_preprocessed_column(self.is_first_id.clone());

eval.add_constraint(

(E::F::one() - is_first_col.clone())

* (E::F::one() - (sorted_col_curr_row.clone() - sorted_col_prev_row.clone())),

);Now, we have a constraint that is disabled for the first row, which is exactly what we want.

Still, however, this will fail with the same ConstraintsNotSatisfied error. (You can run it here)

Third Try

So when we were creating CircleEvaluation instances from our BaseColumn instances, the order of the elements that we were creating it with was actually not the order that Stwo understands it to be. Instead, it assumes that the values are in the bit-reversed, circle domain order. It's not important to understand what this order is, specifically, but this does mean that when Stwo tries to find the -1 offset when calling evaluate, it will find the previous value assuming that it's in a different order. This means that when we create a CircleEvaluation instance, we need to convert it to a bit-reversed circle domain order.

Thus, every time we create a CircleEvaluation instance, we need to convert the order of the values in the BaseColumn beforehand.

impl IsFirstColumn {

...

pub fn gen_column(&self) -> CircleEvaluation<SimdBackend, M31, BitReversedOrder> {

let mut col = BaseColumn::zeros(1 << self.log_size);

col.set(0, M31::from(1));

//////////////////////////////////////////////////////////////

// Convert the columns to bit-reversed circle domain order

bit_reverse_coset_to_circle_domain_order(col.as_mut_slice());

//////////////////////////////////////////////////////////////

CircleEvaluation::new(CanonicCoset::new(self.log_size).circle_domain(), col)

}

...

}

fn gen_trace(log_size: u32) -> Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>> {

// Create a table with random values

let mut rng = rand::thread_rng();

let sorted_values = (0..(1 << log_size)).map(|i| i).collect::<Vec<_>>();

let mut unsorted_values = sorted_values.clone();

unsorted_values.shuffle(&mut rng);

let mut unsorted_col = BaseColumn::from_iter(unsorted_values.iter().map(|v| M31::from(*v)));

let mut sorted_col = BaseColumn::from_iter(sorted_values.iter().map(|v| M31::from(*v)));

// Convert table to trace polynomials

let domain = CanonicCoset::new(log_size).circle_domain();

////////////////////////////////////////////////////////////////////

// Convert the columns to bit-reversed circle domain order

bit_reverse_coset_to_circle_domain_order(unsorted_col.as_mut_slice());

bit_reverse_coset_to_circle_domain_order(sorted_col.as_mut_slice());

////////////////////////////////////////////////////////////////////

vec![unsorted_col, sorted_col]

.into_iter()

.map(|col| CircleEvaluation::new(domain, col))

.collect()

}Voilà, we have successfully implemented the constraint. You can run it here.

Things to consider when implementing constraints over multiple rows:

- Change the order of elements in

BaseColumnin-place viabit_reverse_coset_to_circle_domain_orderbefore creating aCircleEvaluationinstance. This is required because Stwo assumes that the values are in the bit-reversed, circle domain order. - For the first row, the 'previous' row is the last row of the trace, so you may need to disable the constraint for the first row. This is typically done by using a preprocessed column.

Components

So now that we know how to create a self-contained AIR, the inevitable question arises: How do we make this modular?

Fortunately, Stwo provides an abstraction called components that allows us to create independent AIRs and compose them together. In other proving frontends, this is also commonly referred to as a chip, but the idea is the same.

One of the most common use cases of components is to separate frequently used functions (e.g. a hash function) from the main component into a separate component and reuse it, avoiding trace column bloat. Even if the function is not frequently used, it can be useful to separate it into a component to avoid the degree of the constraints becoming too high. This second point is possible because when we create a new component and connect it to the old component, we do it by using lookups, which means that the constraints of the new component are not added to the degree of the old component.

Hash Function Example

To illustrate how to use components, we will create two components where the main component calls a hash function component. For simplicity, instead of an actual hash function, the second component will compute from an input . This component will have, in total, three columns: [input, intermediate, output], which will correspond to the values . Our main component, on the other hand, will have two columns, [input, output], which corresponds to the values .

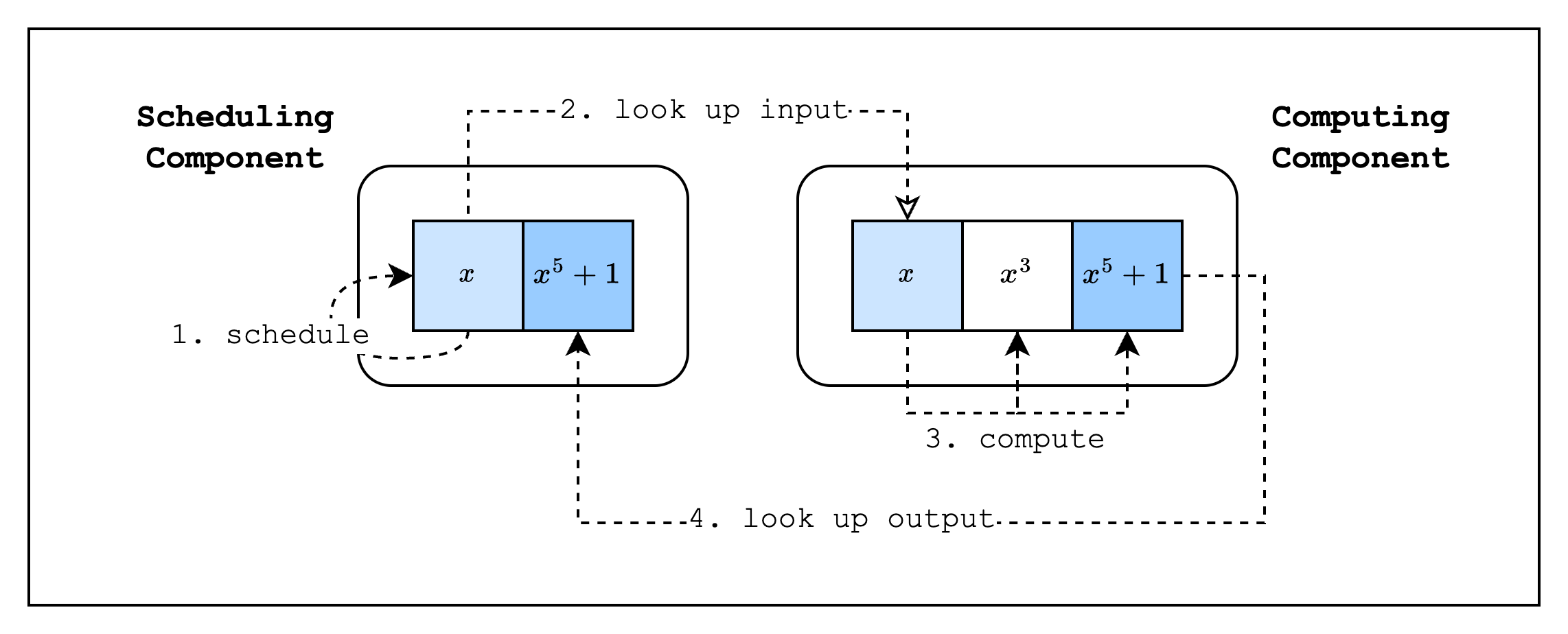

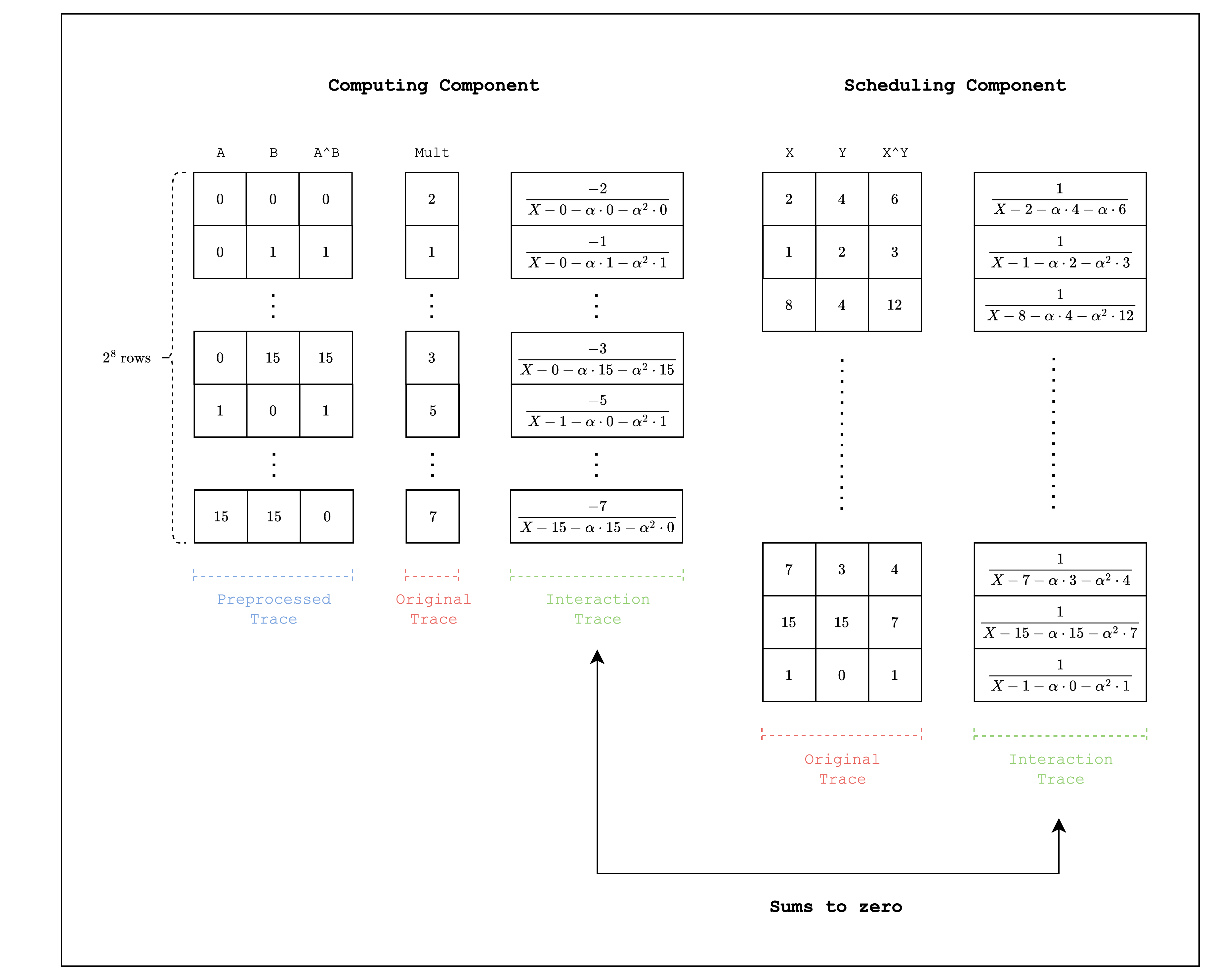

We'll refer to the main component as the scheduling component and the hash function component as the computing component, since the main component is essentially scheduling the hash function component to run its function with a given input and the hash function component computes on the provided input. As can be seen in Figure 1, the input and output of each component are connected by lookups.

Design

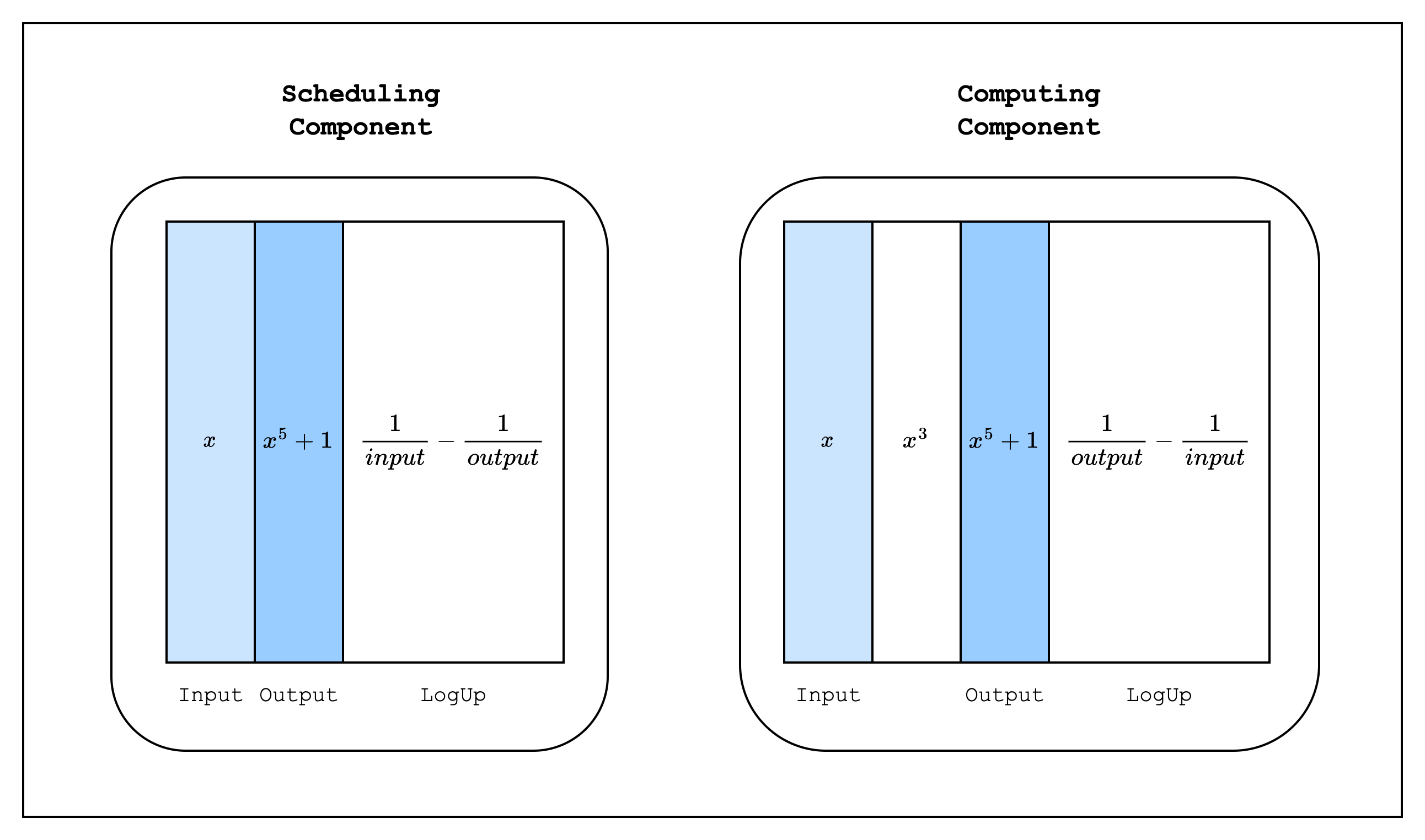

When we implement this in Stwo, the traces of each component will look like Figure 2 above. Each component has its own original and LogUp traces, and the inputs and outputs of each component are connected by lookups. Since the scheduling component sets the LogUp value as a positive multiplicity and the computing component sets the same value as a negative multiplicity, the verifier can simply check that the sum of the two LogUp columns is zero. Note that we combine the input and output randomly as

to form a single lookup. This is because we want to ensure that each input is paired with the correct output. If we add the input and output as separate lookups as

A malicious prover can switch the output with a different row and still come up with a valid proof. For example, the following scheduling component

| Input | Output |

|---|---|

| x | y^5 + 1 |

| y | x^5 + 1 |

And the following computing component

| Input | Intermediate | Output |

|---|---|---|

| x | x^3 + 1 | x^5 + 1 |

| y | y^3 + 1 | y^5 + 1 |

would be valid.

Implementation

Let's move on to the implementation.

fn main() {

// --snip--

// Create trace columns

let scheduling_trace = gen_scheduling_trace(log_size);

let computing_trace = gen_computing_trace(log_size, &scheduling_trace[0], &scheduling_trace[1]);

// Statement 0

let statement0 = ComponentsStatement0 { log_size };

statement0.mix_into(channel);

// Commit to the trace columns

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals([scheduling_trace.clone(), computing_trace.clone()].concat());

tree_builder.commit(channel);

// Draw random elements to use when creating the random linear combination of lookup values in the LogUp columns

let lookup_elements = ComputationLookupElements::draw(channel);

// Create LogUp columns

let (scheduling_logup_cols, scheduling_claimed_sum) = gen_scheduling_logup_trace(

log_size,

&scheduling_trace[0],

&scheduling_trace[1],

&lookup_elements,

);

let (computing_logup_cols, computing_claimed_sum) = gen_computing_logup_trace(

log_size,

&computing_trace[0],

&computing_trace[2],

&lookup_elements,

);

// Statement 1

let statement1 = ComponentsStatement1 {

scheduling_claimed_sum,

computing_claimed_sum,

};

statement1.mix_into(channel);

// Commit to the LogUp columns

let mut tree_builder = commitment_scheme.tree_builder();

tree_builder.extend_evals([scheduling_logup_cols, computing_logup_cols].concat());

tree_builder.commit(channel);

let components = Components::new(&statement0, &lookup_elements, &statement1);

let stark_proof = prove(&components.component_provers(), channel, commitment_scheme).unwrap();

let proof = ComponentsProof {

statement0,

statement1,

stark_proof,

};

// --snip--

}The code above for proving the components should look pretty familiar by now. Since we need to do everything twice as many times, we create structs like ComponentsStatement0, ComponentsStatement1, Components, and ComponentsProof, but the main logic is the same.

Let's take a closer look at how the LogUp columns are generated.

fn gen_scheduling_logup_trace(

log_size: u32,

scheduling_col_1: &CircleEvaluation<SimdBackend, M31, BitReversedOrder>,

scheduling_col_2: &CircleEvaluation<SimdBackend, M31, BitReversedOrder>,

lookup_elements: &ComputationLookupElements,

) -> (

Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>>,

SecureField,

) {

// --snip--

let scheduling_input_output: PackedSecureField =

lookup_elements.combine(&[scheduling_col_1.data[row], scheduling_col_2.data[row]]);

col_gen.write_frac(row, PackedSecureField::one(), scheduling_input_output);

// --snip--

fn gen_computing_logup_trace(

log_size: u32,

computing_col_1: &CircleEvaluation<SimdBackend, M31, BitReversedOrder>,

computing_col_3: &CircleEvaluation<SimdBackend, M31, BitReversedOrder>,

lookup_elements: &ComputationLookupElements,

) -> (

Vec<CircleEvaluation<SimdBackend, M31, BitReversedOrder>>,

SecureField,

) {

// --snip--

let computing_input_output: PackedSecureField =

lookup_elements.combine(&[computing_col_1.data[row], computing_col_3.data[row]]);

col_gen.write_frac(row, -PackedSecureField::one(), computing_input_output);

// --snip--

}As you can see, the LogUp values of the input and output columns of both the scheduling and computing components are batched together, but in the scheduling component, the output LogUp value is subtracted from the input LogUp value, while in the computing component, the input LogUp value is subtracted from the output LogUp value. This means that when the LogUp sums from both components are added together, they should cancel out to zero.

Next, let's check how the constraints are created.

impl FrameworkEval for SchedulingEval {

// --snip--

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let input_col = eval.next_trace_mask();

let output_col = eval.next_trace_mask();

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

E::EF::one(),

&[input_col, output_col],

));

eval.finalize_logup();

eval

}

}

impl FrameworkEval for ComputingEval {

// --snip--

fn evaluate<E: EvalAtRow>(&self, mut eval: E) -> E {

let input_col = eval.next_trace_mask();

let intermediate_col = eval.next_trace_mask();

let output_col = eval.next_trace_mask();

eval.add_constraint(

intermediate_col.clone() - input_col.clone() * input_col.clone() * input_col.clone(),

);

eval.add_constraint(

output_col.clone()

- intermediate_col.clone() * input_col.clone() * input_col.clone()

- E::F::one(),

);

eval.add_to_relation(RelationEntry::new(

&self.lookup_elements,

-E::EF::one(),

&[input_col, output_col],

));

eval.finalize_logup();

eval

}

}As you can see, we define the LogUp constraints for each component, and we also add two constraints that make sure the computations and are correct.

fn main() {

// --snip--

// Verify claimed sums

assert_eq!(

scheduling_claimed_sum + computing_claimed_sum,

SecureField::zero()

);

// Unpack proof

let statement0 = proof.statement0;

let statement1 = proof.statement1;

let stark_proof = proof.stark_proof;

// Create channel and commitment scheme

let channel = &mut Blake2sChannel::default();

let commitment_scheme = &mut CommitmentSchemeVerifier::<Blake2sMerkleChannel>::new(config);

let log_sizes = statement0.log_sizes();

// Preprocessed columns.

commitment_scheme.commit(stark_proof.commitments[0], &log_sizes[0], channel);

// Commit to statement 0

statement0.mix_into(channel);

// Trace columns.

commitment_scheme.commit(stark_proof.commitments[1], &log_sizes[1], channel);

// Draw lookup element.

let lookup_elements = ComputationLookupElements::draw(channel);

// Commit to statement 1

statement1.mix_into(channel);

// Interaction columns.

commitment_scheme.commit(stark_proof.commitments[2], &log_sizes[2], channel);

// Create components

let components = Components::new(&statement0, &lookup_elements, &statement1);

verify(

&components.components(),

channel,

commitment_scheme,

stark_proof,

)

.unwrap();Finally, we verify the components!

Additional Examples

Here, we introduce some additional AIRs that may help in designing more complex AIRs.

Selectors

A selector is a column of 0s and 1s that selectively enables or disables a constraint. One example of a selector is the IsFirst column which has a value of 1 only on the first row. This can be used when constraints are defined over both the current and previous rows but we need to make an exception for the first row.

For example, as seen in Figure 1, when we want to track the cumulative sum of a column, i.e. , the previous row of the first row points to the last row, creating an incorrect constraint . Thus, we need to disable the constraint for the first row and enable a separate constraint . This can be achieved by using a selector column that has a value of 1 on the first row and 0 on the other rows and multiplying the constraint by the selector column:

where refers to the previous value of in the multiplicative subgroup of the finite field.

IsZero

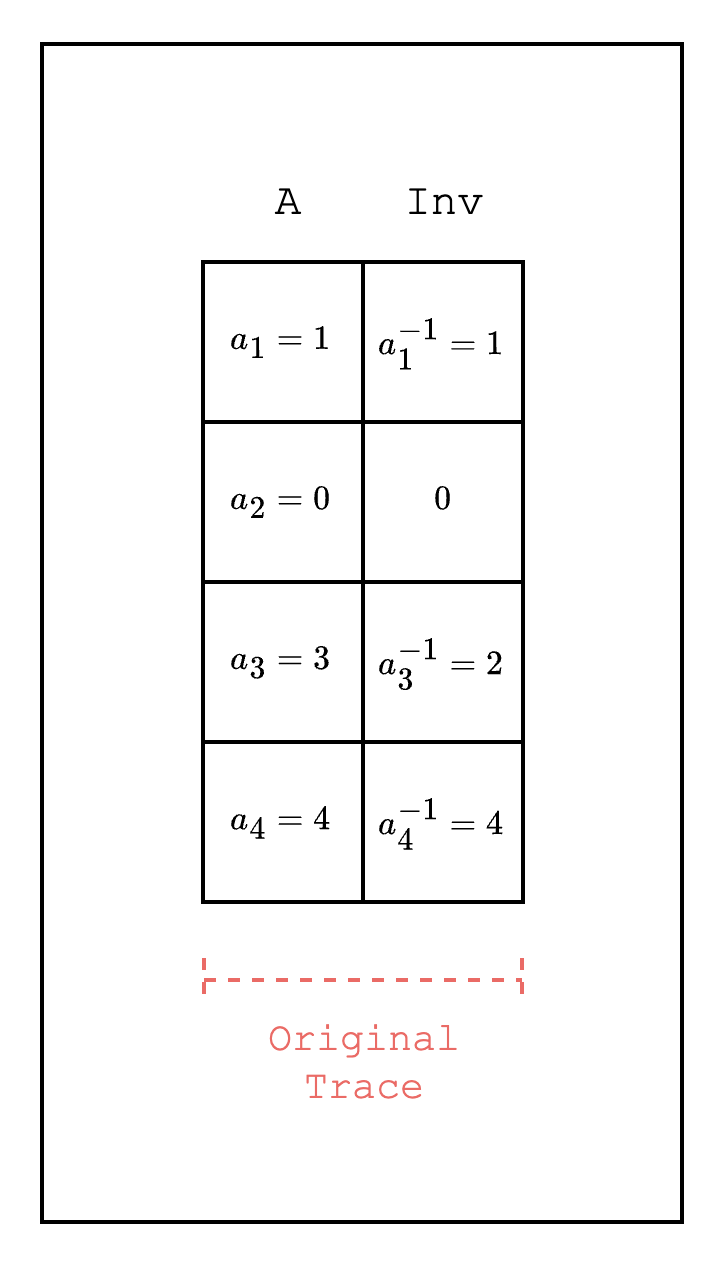

Checking that a certain field element is zero is a common use case when writing AIRs. To do this efficiently, we can use the property of finite fields that a non-zero field element always has a multiplicative inverse.

For example, in Figure 2, we want to check whether a field element in is zero. We create a new column that contains the multiplicative inverse of each field element . We then use the multiplication of the two columns and check whether the result is 0 or 1. Note that if the existing column has a zero element, we can insert any value in the new column since the multiplication will always be zero.

This way, we can create a constraint that uses the IsZero condition as part of the constraint, e.g. , which checks if is 0 and if is not 0.

Public Inputs

When writing AIRs, we may want to expose some values in the trace to the verifier to check in the open. For example, when running an AIR for a Cairo program, we may want to check that the program that was executed is the correct one.

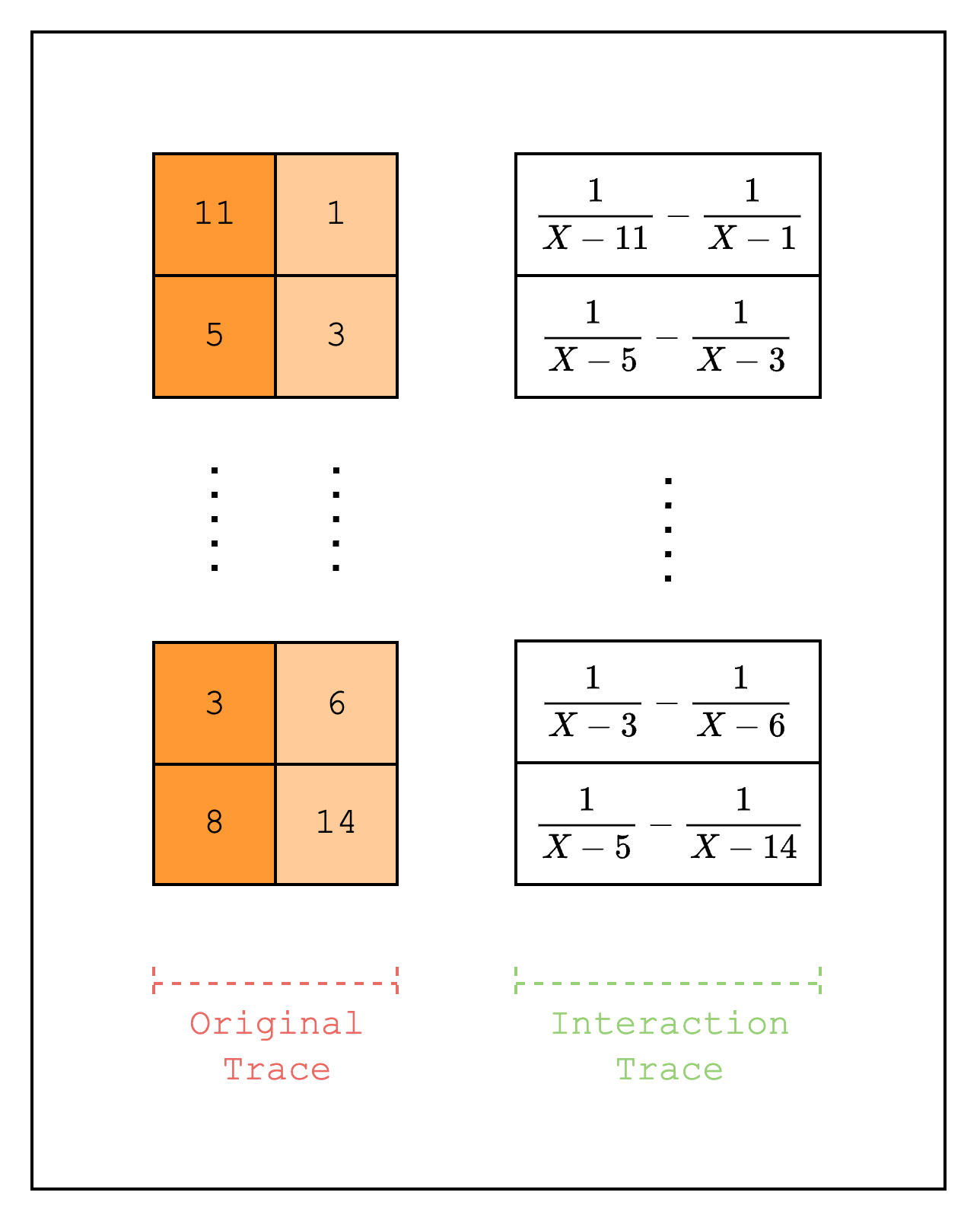

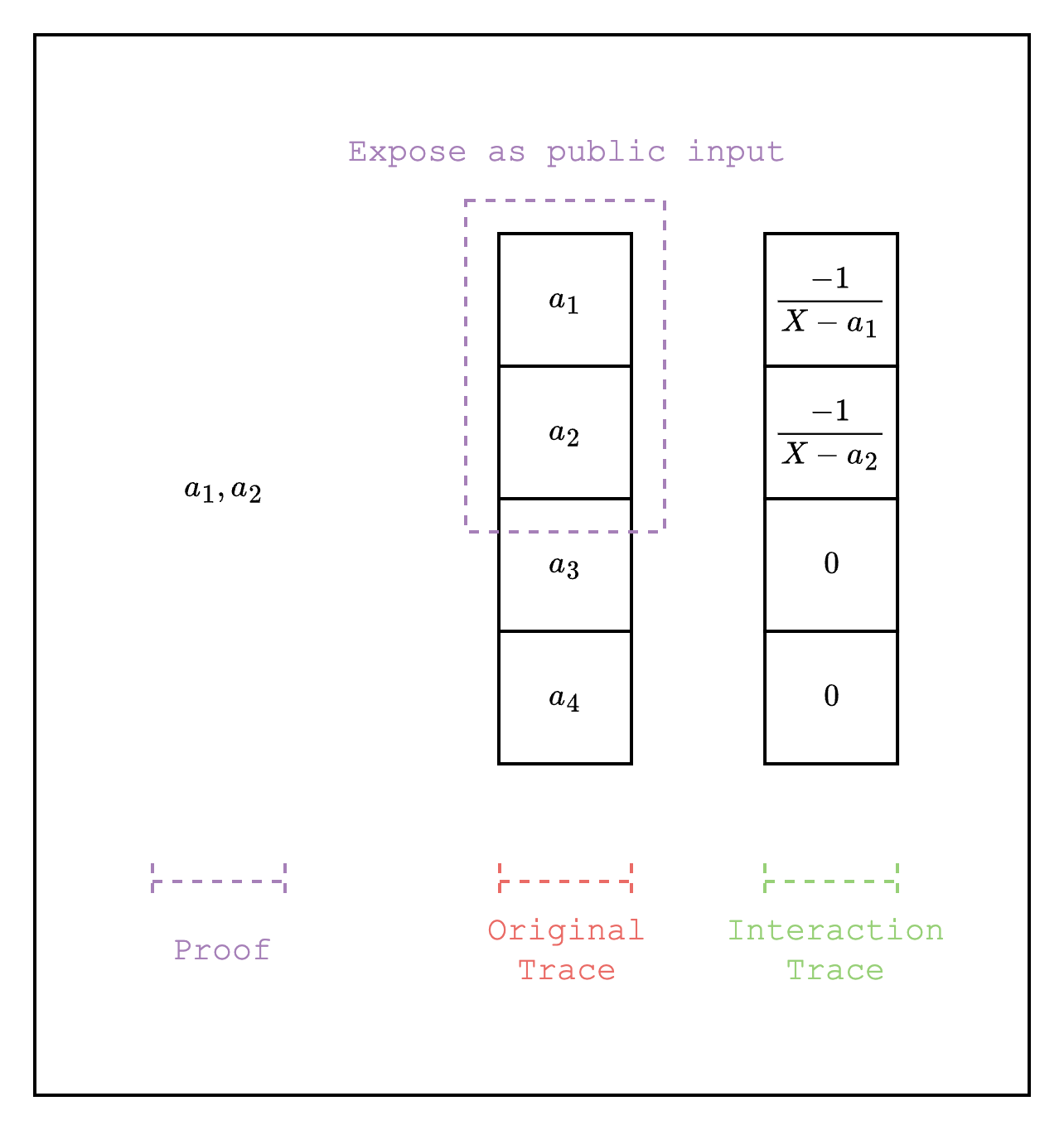

In Stwo, we can achieve this by adding the public input portion of the trace as a LogUp column as negative multiplicity. As shown in Figure 3, the public inputs are added as LogUp values with negative multiplicity and . The public inputs are given to the verifier as part of the proof and the verifier can directly compute the LogUp values with positive multiplicity and and add it to the LogUp sum and check that the total sum is 0.

One important thing to note is that the public inputs must be added to the Fiat-Shamir channel before drawing random elements for the interaction trace. We refer the reader to this example implementation for reference.

XOR

We can also handle XOR operations as part of the AIR. First, as we did in the Components section, we create a computing component and a scheduling component. Then, we connect the two components using lookups: the computing component sets the LogUp value as a negative multiplicity and the scheduling component sets the same value as a positive multiplicity.

For example, Figure 4 shows the XOR operation for 4-bit integers. To accommodate the entire combination of inputs, the size of the trace for the computing component is rows. Note that the size of the trace for the scheduling component does not have to be the same as the computing component.

Note that for larger integers, we may need to decompose into smaller limbs to avoid creating large tables. Also note that the M31 field does not fully support XOR operations for 31-bit integers since we cannot use , although this is not feasible as it would require a table of size of around .

Cairo AIR

The following sections cover how Cairo is expressed as an AIR and proved using Stwo. The explanation is based on this commit of the Stwo-Cairo repository.

Overview of Cairo

This is an informal overview of Cairo. For a more formal explanation, please refer to the original Cairo paper.

Let's start by understanding how Cairo works. Essentially, Cairo is a Turing-complete CPU architecture specifically designed to enable efficient proofs of execution using STARKs. In particular, Cairo uses a read-only memory model instead of the more common read-write memory model and does not use any general-purpose registers.

Non-Deterministic Read-Only Memory

A read-only memory model is one where each address in memory can have only a single value throughout the program's execution. This contrasts with the more common read-write memory model, where an address can have multiple values at different points during execution.

The memory is also non-deterministic: the prover provides the values of the memory cells as witness values, and they do not need further constraints beyond ensuring that each address has a single value throughout the program's execution.

Registers

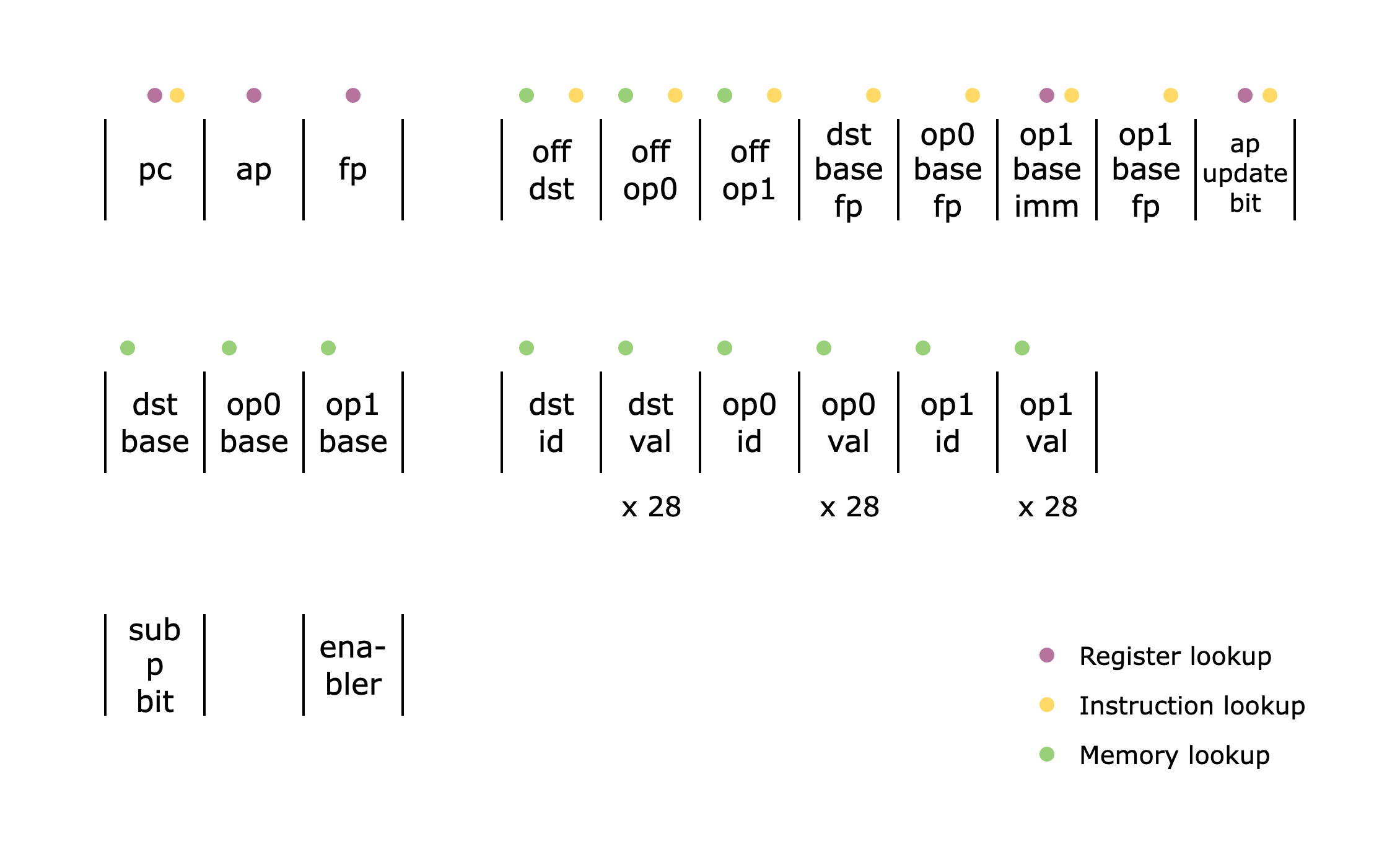

In physical CPUs, accessing memory is expensive compared to accessing registers due to physical proximity. This is why instructions typically operate over registers rather than directly over memory cells. In Cairo, accessing memory and registers incur the same cost, so Cairo instructions operate directly over memory cells. Thus, the three registers used by Cairo do not store instructions or operand values like in physical CPUs, but rather pointers to the memory cells where the instructions and operands are stored:

pcis the program counter, which points to the current Cairo instructionapis the allocation pointer, which points to the current available memory addressfpis the frame pointer, which points to the current frame in the "call stack"

Cairo Instructions

Let's now see what a Cairo instruction looks like.

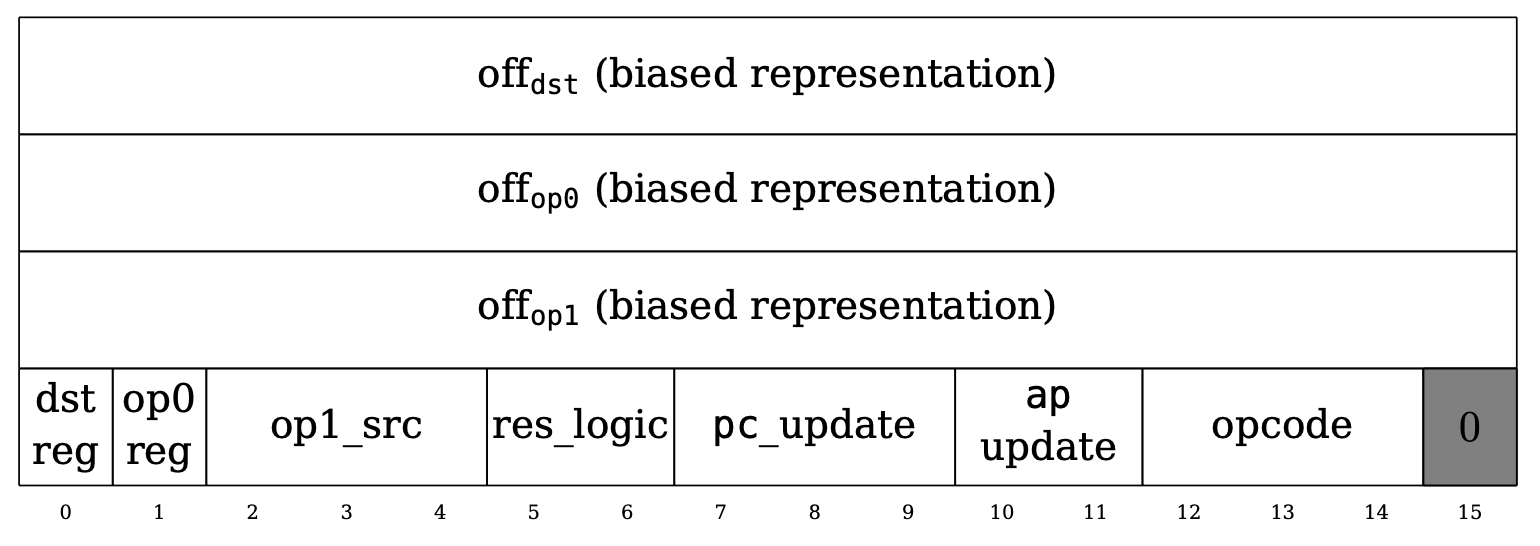

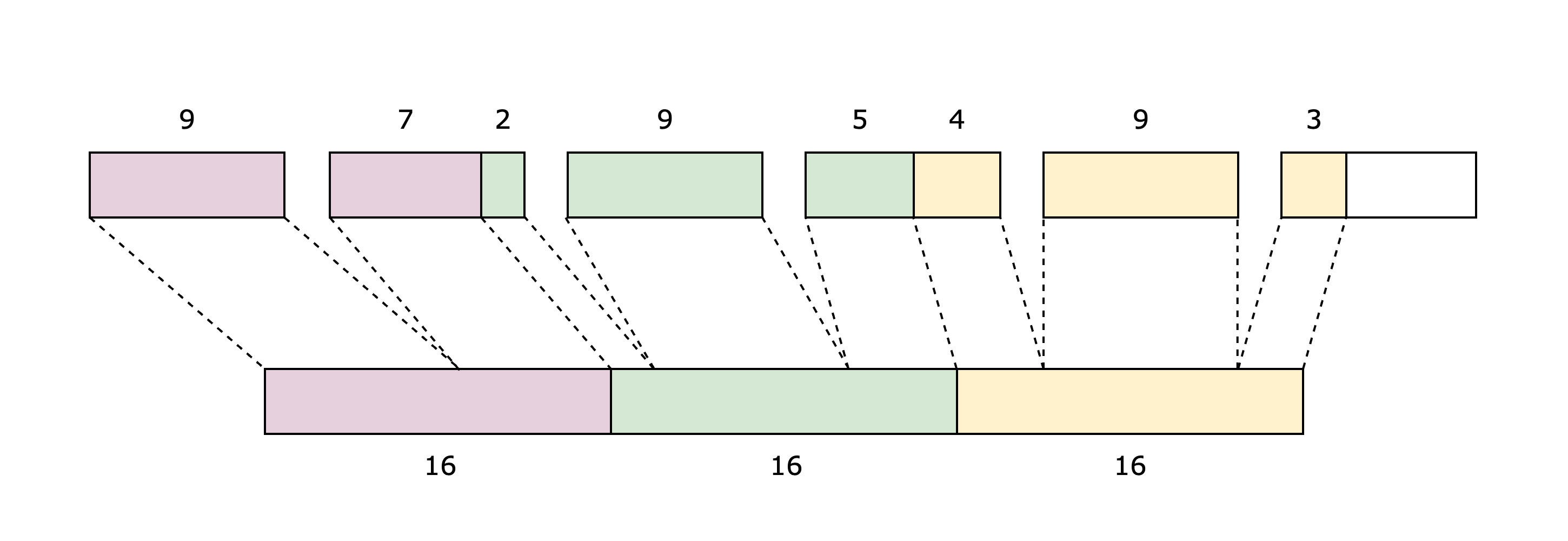

As the figure above from the Cairo paper shows, an instruction is 64 bits, where the first three 16-bit integers are signed offsets to the operands dst, op0, and op1.

The next 15 bits are flags. The dst_reg and op0_reg 1-bit flags indicate whether to use the ap or the fp register as the base for the dst and op0 operands. The op1_src flag supports a wider range of base values for the op1 operand: op0, pc, fp, and ap. The res_logic flag indicates how to compute the res operand: op1, op0 + op1, or op0 * op1. The pc_update and ap_update flags show how to update the pc and ap registers after computing the operands. The opcode flag indicates whether this instruction belongs to a predefined opcode (e.g., CALL, RET, ASSERT_EQ) and also defines how the ap and fp registers should be updated.

For a more detailed explanation of the flags, please refer to Section 4.5 of the Cairo paper.

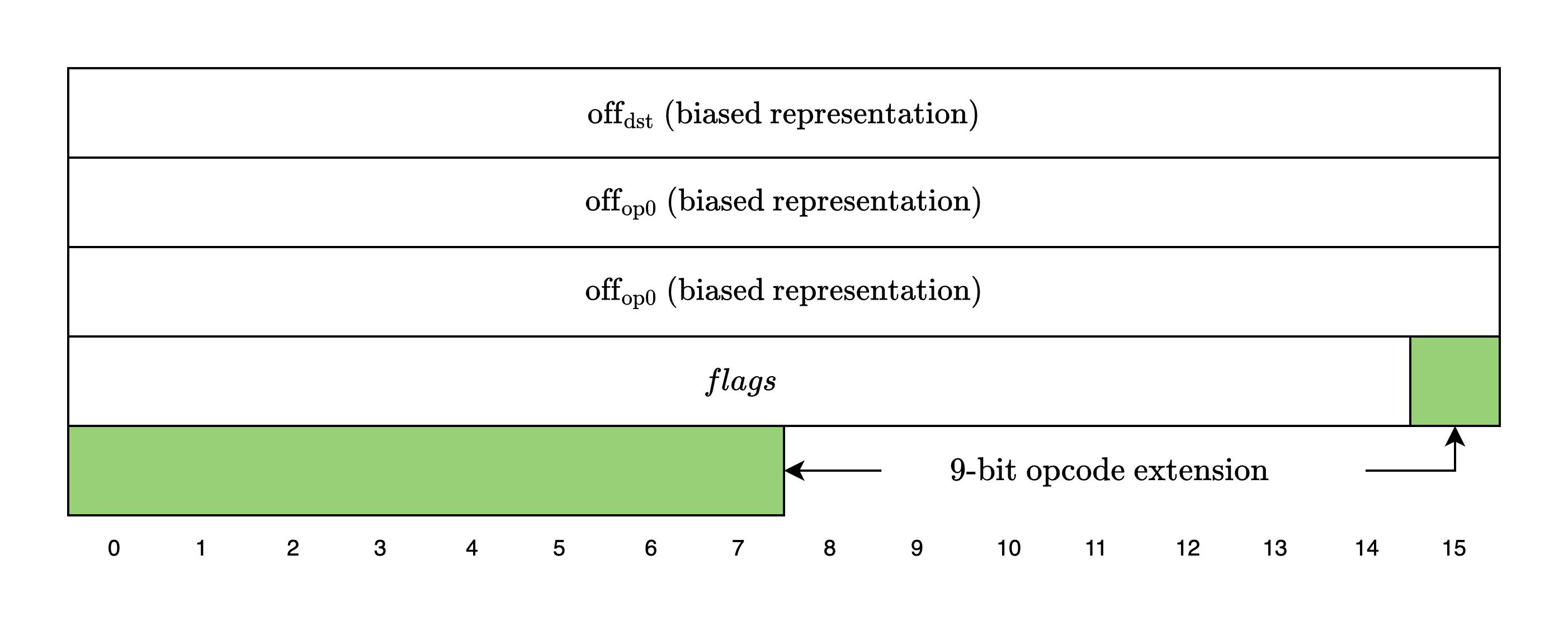

Finally, the last bit is fixed to 0, but as we will see in the next section, this design has been modified in the current version of Cairo to support opcode extensions.

Opcodes and Opcode Extensions

In Cairo, an opcode refers to what the instruction should do. Cairo defines a set of common CPU operations as specific opcodes (e.g., ADD, MUL, JUMP, CALL), and the current version of Cairo also defines a new set of opcodes used to improve the performance of heavy computations such as Blake2s hashing and QM31 addition and multiplication.

Since the 64-bit instruction structure is not flexible enough to support this extended set of opcodes, Cairo extends the instruction size to 72 bits and uses the last 9 bits as the opcode extension value.

As of this commit, the following opcode extension values are supported:

0: Stone (original opcodes)1: Blake2: BlakeFinalize3: QM31Operation

Even if an instruction does not belong to any predefined set of opcodes, it is considered a valid opcode as long as it adheres to the state-transition function defined in Section 4.5 of the Cairo paper. In Stwo Cairo, this is referred to as a generic opcode.

Basic Building Blocks

This section covers the basic building blocks used to build the Cairo AIR.

Felt252 to M31

Cairo works over the prime field , while Stwo works over the prime field . Thus, in order to represent the execution of Cairo with Stwo, we need to decompose the 252-bit integers into 31-bit integers. The Cairo AIR chooses to use the 9-bit decomposition, so a single 252-bit integer will result in 28 9-bit limbs.

Range checks

Range-checks are very commonly used in the Cairo AIR. They are used to ensure that the witness values are within a certain range, most commonly within a certain bit length. For example, in the Felt252 to M31 section, we saw that a 252-bit integer is decomposed into 28 9-bit limbs, so we need to verify that each limb is in the range .

This is done by using a preprocessed column that contains the entire range of possible values for the bit length. For example, for a 9-bit range check, the column will contain the values from 0 to . We also have another column that contains the number of times the range-check was invoked for each valid value and we use lookups to check that each range-check is valid. For a more practical example, please refer to the Static Lookups section.

Main Components

Now that we have a basic understanding of Cairo and the building blocks that are used to build the Cairo AIR, let's take a look at the main components of the Cairo AIR.

For readers who are unfamiliar with the concepts of components and lookups, we suggest going over the Components section of the book.

Fetch, Decode, Execute

Cairo follows the common CPU architecture of fetching, decoding, and executing an instruction in a single CPU step. Below is a high-level diagram of what a single CPU step looks like in Cairo.

Since we need to prove the correctness of all CPU steps, the Cairo AIR writes the results of fetching, decoding, and executing an instruction at every CPU step into a trace and proves that the constraints over the trace are satisfied—i.e., consistent with the semantics of Cairo.

Let's keep this in mind while we go over the main components of the Cairo AIR.

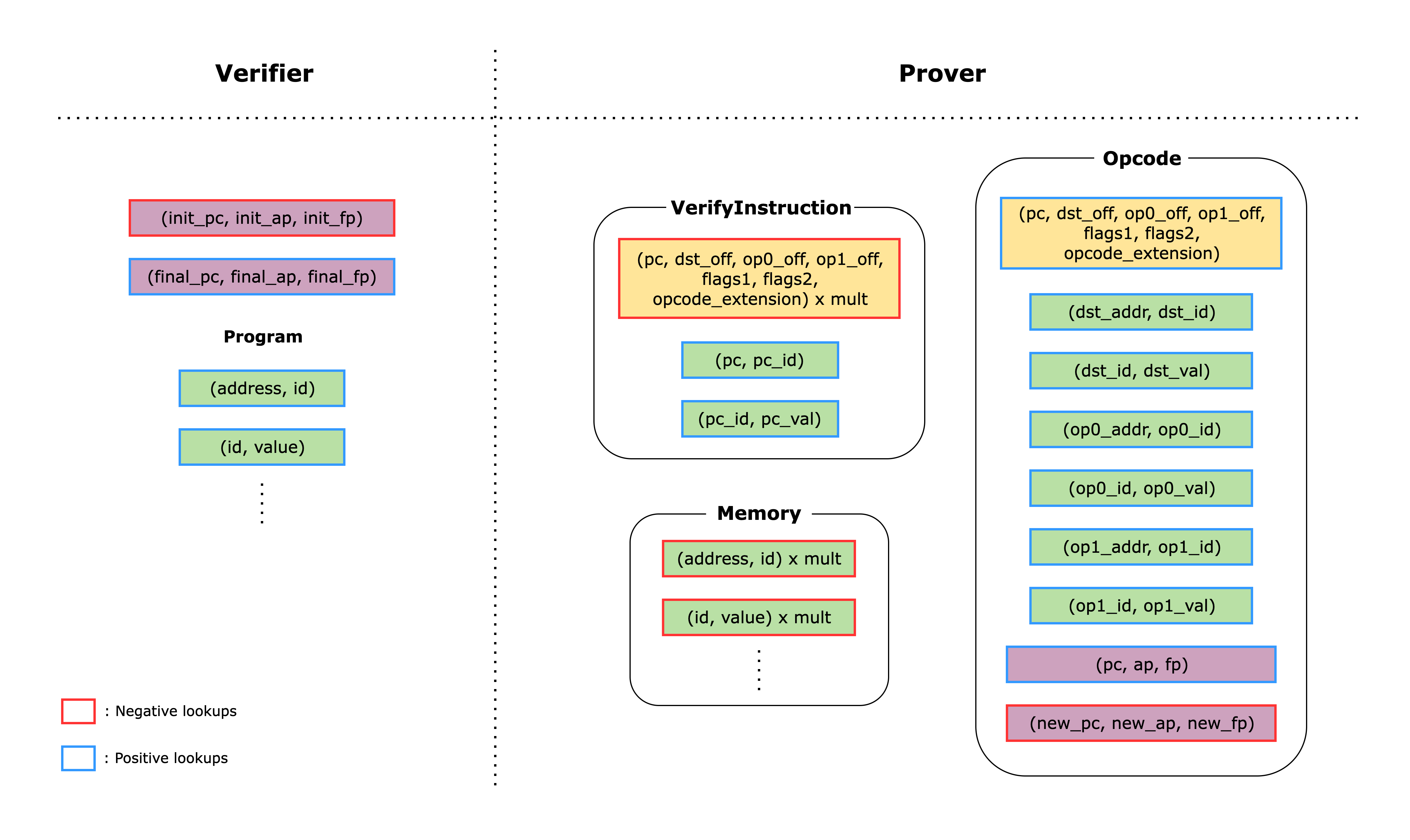

1. Memory Component

The first component we need is a Memory component, which implements the non-deterministic read-only memory model of Cairo.

In Cairo AIR, instead of mapping the memory address to a value directly, we first map the address to an id and then map the id to a value. This is done to classify the memory values into two groups: Small and Big, where Small values are 72-bit integers and Big values are 252-bit integers. As many memory values do not exceed the Small size, this allows us to save cost on unnecessary padding.

As a result, the Memory component is actually 2 components: MemoryAddressToId and MemoryIdToValue.

The constraints for the MemoryAddressToId and MemoryIdToValue components are as follows:

- An

addressmust appear once and only once in theMemoryAddressToIdcomponent. - An

idmust appear once and only once in theMemoryIdToValuecomponent. - Each

(address, id, value)tuple must be unique.

The first constraint is implemented by using a preprocessed column that contains the sequence of numbers [0, MAX_ADDRESS) and using this as the address values (in the actual code, the memory address starts at 1, so we need to add 1 to the sequence column).

The second constraint is guaranteed because the address value is always unique.

A short explainer on how the id value is computed: